mirror of

https://github.com/LibreQoE/LibreQoS.git

synced 2025-02-25 18:55:32 -06:00

Merge branch 'develop' into unifig

This commit is contained in:

2

.github/workflows/lint_python.yml

vendored

2

.github/workflows/lint_python.yml

vendored

@@ -24,7 +24,7 @@ jobs:

|

||||

- run: pytest . || true

|

||||

- run: pytest --doctest-modules . || true

|

||||

- run: shopt -s globstar && pyupgrade --py37-plus **/*.py || true

|

||||

- run: safety check

|

||||

- run: safety check --ignore=62044

|

||||

#- uses: pyupio/safety@2.3.4

|

||||

# with:

|

||||

# api-key: ${{secrets.SAFETY_API_KEY}}

|

||||

|

||||

@@ -8,7 +8,11 @@ Learn more at [LibreQoS.io](https://libreqos.io/)!

|

||||

|

||||

## Sponsors

|

||||

|

||||

Special thanks to Equinix for providing server resources to support the development of LibreQoS.

|

||||

LibreQoS' development is made possible by our sponsors, the NLnet Foundation and Equinix.

|

||||

|

||||

LibreQoS has been funded through the NGI0 Entrust Fund, a fund established by NLnet with financial support from the European Commission’s Next Generation Internet programme, under the aegis of DG Communications Networks, Content and Technology under grant agreement No 101069594. Learn more at https://nlnet.nl/project/LibreQoS/

|

||||

|

||||

Equinix supports LibreQoS through its Open Source program – providing access to hardware resources on its Equinix Metal infrastructure. Equinix’ support has been crucial for LibreQoS to scale past 10Gbps for higher-bandwidth networks. Learn more about Equinix Metal here.

|

||||

Learn more about [Equinix Metal here](https://deploy.equinix.com/metal/).

|

||||

|

||||

## Support LibreQoS

|

||||

|

||||

@@ -9,7 +9,7 @@

|

||||

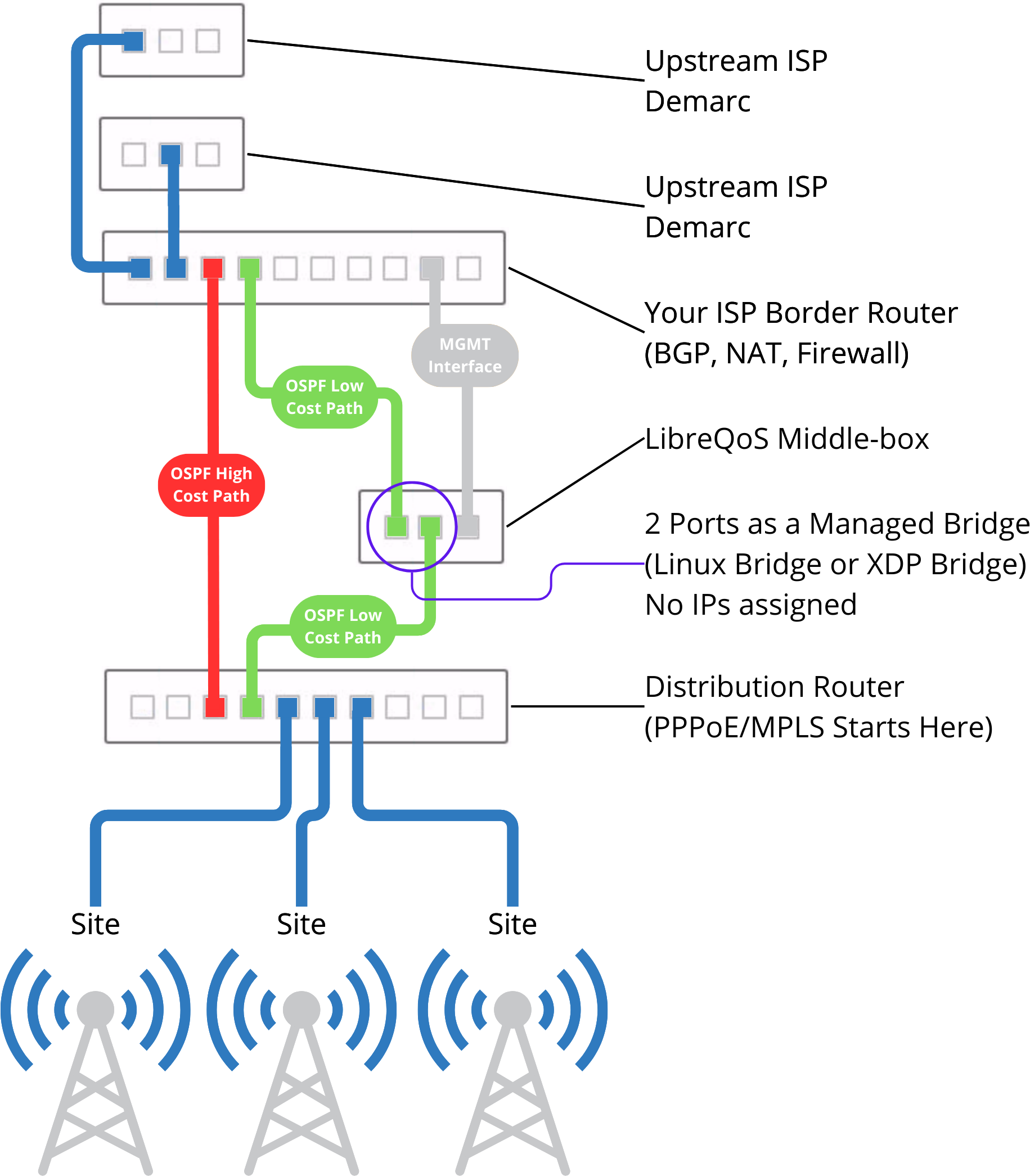

- OSPF primary link (low cost) through the server running LibreQoS

|

||||

- OSPF backup link (high cost, maybe 200 for example)

|

||||

|

||||

|

||||

|

||||

|

||||

### Network Interface Card

|

||||

|

||||

|

||||

BIN

docs/design.png

BIN

docs/design.png

Binary file not shown.

|

Before Width: | Height: | Size: 75 KiB After Width: | Height: | Size: 347 KiB |

@@ -20,7 +20,7 @@ DEBIAN_DIR=$DPKG_DIR/DEBIAN

|

||||

LQOS_DIR=$DPKG_DIR/opt/libreqos/src

|

||||

ETC_DIR=$DPKG_DIR/etc

|

||||

MOTD_DIR=$DPKG_DIR/etc/update-motd.d

|

||||

LQOS_FILES="graphInfluxDB.py influxDBdashboardTemplate.json integrationCommon.py integrationRestHttp.py integrationSplynx.py integrationUISP.py ispConfig.example.py LibreQoS.py lqos.example lqTools.py mikrotikFindIPv6.py network.example.json pythonCheck.py README.md scheduler.py ShapedDevices.example.csv"

|

||||

LQOS_FILES="graphInfluxDB.py influxDBdashboardTemplate.json integrationCommon.py integrationRestHttp.py integrationSplynx.py integrationUISP.py integrationSonar.py ispConfig.example.py LibreQoS.py lqos.example lqTools.py mikrotikFindIPv6.py network.example.json pythonCheck.py README.md scheduler.py ShapedDevices.example.csv"

|

||||

LQOS_BIN_FILES="lqos_scheduler.service.example lqosd.service.example lqos_node_manager.service.example"

|

||||

RUSTPROGS="lqosd lqtop xdp_iphash_to_cpu_cmdline xdp_pping lqos_node_manager lqusers lqos_setup lqos_map_perf"

|

||||

|

||||

|

||||

125

src/integrationPowercode.py

Normal file

125

src/integrationPowercode.py

Normal file

@@ -0,0 +1,125 @@

|

||||

from pythonCheck import checkPythonVersion

|

||||

checkPythonVersion()

|

||||

import requests

|

||||

import warnings

|

||||

from ispConfig import excludeSites, findIPv6usingMikrotik, bandwidthOverheadFactor, exceptionCPEs, powercode_api_key, powercode_api_url

|

||||

from integrationCommon import isIpv4Permitted

|

||||

import base64

|

||||

from requests.auth import HTTPBasicAuth

|

||||

if findIPv6usingMikrotik == True:

|

||||

from mikrotikFindIPv6 import pullMikrotikIPv6

|

||||

from integrationCommon import NetworkGraph, NetworkNode, NodeType

|

||||

from urllib3.exceptions import InsecureRequestWarning

|

||||

|

||||

def getCustomerInfo():

|

||||

headers= {'Content-Type': 'application/x-www-form-urlencoded'}

|

||||

url = powercode_api_url + ":444/api/preseem/index.php"

|

||||

data = {}

|

||||

data['apiKey'] = powercode_api_key

|

||||

data['action'] = 'list_customers'

|

||||

|

||||

r = requests.post(url, data=data, headers=headers, verify=False, timeout=10)

|

||||

return r.json()

|

||||

|

||||

def getListServices():

|

||||

headers= {'Content-Type': 'application/x-www-form-urlencoded'}

|

||||

url = powercode_api_url + ":444/api/preseem/index.php"

|

||||

data = {}

|

||||

data['apiKey'] = powercode_api_key

|

||||

data['action'] = 'list_services'

|

||||

|

||||

r = requests.post(url, data=data, headers=headers, verify=False, timeout=10)

|

||||

servicesDict = {}

|

||||

for service in r.json():

|

||||

if service['rate_down'] and service['rate_up']:

|

||||

servicesDict[service['id']] = {}

|

||||

servicesDict[service['id']]['downloadMbps'] = int(round(int(service['rate_down']) / 1000))

|

||||

servicesDict[service['id']]['uploadMbps'] = int(round(int(service['rate_up']) / 1000))

|

||||

return servicesDict

|

||||

|

||||

def createShaper():

|

||||

net = NetworkGraph()

|

||||

requests.packages.urllib3.disable_warnings(category=InsecureRequestWarning)

|

||||

print("Fetching data from Powercode")

|

||||

|

||||

customerInfo = getCustomerInfo()

|

||||

|

||||

customerIDs = []

|

||||

for customer in customerInfo:

|

||||

if customer['id'] != '1':

|

||||

if customer['id'] != '':

|

||||

if customer['status'] == 'Active':

|

||||

customerIDint = int(customer['id'])

|

||||

if customerIDint != 0:

|

||||

if customerIDint != None:

|

||||

if customerIDint not in customerIDs:

|

||||

customerIDs.append(customerIDint)

|

||||

|

||||

allServices = getListServices()

|

||||

|

||||

acceptableEquipment = ['Customer Owned Equipment', 'Router', 'Customer Owned Equipment', 'Managed Routers', 'CPE']

|

||||

|

||||

devicesByCustomerID = {}

|

||||

for customer in customerInfo:

|

||||

if customer['status'] == 'Active':

|

||||

chosenName = ''

|

||||

if customer['name'] != '':

|

||||

chosenName = customer['name']

|

||||

elif customer['company_name'] != '':

|

||||

chosenName = customer['company_name']

|

||||

else:

|

||||

chosenName = customer['id']

|

||||

for equipment in customer['equipment']:

|

||||

if equipment['type'] in acceptableEquipment:

|

||||

if equipment['service_id'] in allServices:

|

||||

device = {}

|

||||

device['id'] = "c_" + customer['id'] + "_s_" + "_d_" + equipment['id']

|

||||

device['name'] = equipment['name']

|

||||

device['ipv4'] = equipment['ip_address']

|

||||

device['mac'] = equipment['mac_address']

|

||||

if customer['id'] not in devicesByCustomerID:

|

||||

devicesByCustomerID[customer['id']] = {}

|

||||

devicesByCustomerID[customer['id']]['name'] = chosenName

|

||||

devicesByCustomerID[customer['id']]['downloadMbps'] = allServices[equipment['service_id']]['downloadMbps']

|

||||

devicesByCustomerID[customer['id']]['uploadMbps'] = allServices[equipment['service_id']]['uploadMbps']

|

||||

if 'devices' not in devicesByCustomerID[customer['id']]:

|

||||

devicesByCustomerID[customer['id']]['devices'] = []

|

||||

devicesByCustomerID[customer['id']]['devices'].append(device)

|

||||

|

||||

for customerID in devicesByCustomerID:

|

||||

customer = NetworkNode(

|

||||

type=NodeType.client,

|

||||

id=customerID,

|

||||

displayName=devicesByCustomerID[customerID]['name'],

|

||||

address='',

|

||||

customerName=devicesByCustomerID[customerID]['name'],

|

||||

download=devicesByCustomerID[customerID]['downloadMbps'],

|

||||

upload=devicesByCustomerID[customerID]['uploadMbps'],

|

||||

)

|

||||

net.addRawNode(customer)

|

||||

for device in devicesByCustomerID[customerID]['devices']:

|

||||

newDevice = NetworkNode(

|

||||

id=device['id'],

|

||||

displayName=device["name"],

|

||||

type=NodeType.device,

|

||||

parentId=customerID,

|

||||

mac=device["mac"],

|

||||

ipv4=[device['ipv4']],

|

||||

ipv6=[]

|

||||

)

|

||||

net.addRawNode(newDevice)

|

||||

print("Imported " + str(len(devicesByCustomerID)) + " customers")

|

||||

net.prepareTree()

|

||||

net.plotNetworkGraph(False)

|

||||

if net.doesNetworkJsonExist():

|

||||

print("network.json already exists. Leaving in-place.")

|

||||

else:

|

||||

net.createNetworkJson()

|

||||

net.createShapedDevices()

|

||||

|

||||

def importFromPowercode():

|

||||

#createNetworkJSON()

|

||||

createShaper()

|

||||

|

||||

if __name__ == '__main__':

|

||||

importFromPowercode()

|

||||

326

src/integrationSonar.py

Normal file

326

src/integrationSonar.py

Normal file

@@ -0,0 +1,326 @@

|

||||

from pythonCheck import checkPythonVersion

|

||||

checkPythonVersion()

|

||||

import requests

|

||||

import subprocess

|

||||

from ispConfig import sonar_api_url,sonar_api_key,sonar_airmax_ap_model_ids,sonar_active_status_ids,sonar_ltu_ap_model_ids,snmp_community

|

||||

all_models = sonar_airmax_ap_model_ids + sonar_ltu_ap_model_ids

|

||||

from integrationCommon import NetworkGraph, NetworkNode, NodeType

|

||||

from multiprocessing.pool import ThreadPool

|

||||

|

||||

### Requirements

|

||||

# snmpwalk needs to be installed. "sudo apt-get install snmp"

|

||||

|

||||

### Assumptions

|

||||

# Network sites are in Sonar and the APs are assigned to those sites.

|

||||

# The MAC address is a required and primary field for all of the radios/routers.

|

||||

# There is 1 IP assigned to each AP.

|

||||

# For customers, IPs are assigned to equipment and not directly to the customer. I can add that later if people actually do that.

|

||||

# Service plans can be expressed as an integer in Mbps. So 20Mbps and not 20.3Mbps or something like that.

|

||||

# Every customer should have a data service. I'm currently not adding them if they don't.

|

||||

|

||||

###Notes

|

||||

# I need to deal with other types of APs in the future I'm currently testing with Prism AC Gen2s, Rocket M5s, and LTU Rockets.

|

||||

# There should probably be a log file somewhere and output status to the Web UI.

|

||||

# If snmp fails to get the name of the AP then it's just called "Not found via snmp" We won't see that happen unless it is able to get the connected cpe and not the name.

|

||||

|

||||

|

||||

def sonarRequest(query,variables={}):

|

||||

|

||||

r = requests.post(sonar_api_url, json={'query': query, 'variables': variables}, headers={'Authorization': 'Bearer ' + sonar_api_key}, timeout=10)

|

||||

r_json = r.json()

|

||||

|

||||

# Sonar responses look like this: {"data": {"accounts": {"entities": [{"id": '1'},{"id": 2}]}}}

|

||||

# I just want to return the list so I need to find what field we're querying.

|

||||

field = list(r_json['data'].keys())[0]

|

||||

sonar_list = r_json['data'][field]['entities']

|

||||

return sonar_list

|

||||

|

||||

def getActiveStatuses():

|

||||

if not sonar_active_status_ids:

|

||||

query = """query getActiveStatuses {

|

||||

account_statuses (activates_account: true) {

|

||||

entities {

|

||||

id

|

||||

activates_account

|

||||

}

|

||||

}

|

||||

}"""

|

||||

|

||||

statuses_from_sonar = sonarRequest(query)

|

||||

status_ids = []

|

||||

for status in statuses_from_sonar:

|

||||

status_ids.append(status['id'])

|

||||

return status_ids

|

||||

else:

|

||||

return sonar_active_status_ids

|

||||

|

||||

# Sometimes the IP will be under the field data for an item and sometimes it will be assigned to the inventory item itself.

|

||||

def findIPs(inventory_item):

|

||||

ips = []

|

||||

for ip in inventory_item['inventory_model_field_data']['entities'][0]['ip_assignments']['entities']:

|

||||

ips.append(ip['subnet'])

|

||||

for ip in inventory_item['ip_assignments']['entities']:

|

||||

ips.append(ip['subnet'])

|

||||

return ips

|

||||

|

||||

def getSitesAndAps():

|

||||

query = """query getSitesAndAps($pages: Paginator, $rr_ap_models: ReverseRelationFilter, $ap_models: Search){

|

||||

network_sites (paginator: $pages,reverse_relation_filters: [$rr_ap_models]) {

|

||||

entities {

|

||||

name

|

||||

id

|

||||

inventory_items (search: [$ap_models]) {

|

||||

entities {

|

||||

id

|

||||

inventory_model_id

|

||||

inventory_model_field_data {

|

||||

entities {

|

||||

ip_assignments {

|

||||

entities {

|

||||

subnet

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

ip_assignments {

|

||||

entities {

|

||||

subnet

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}"""

|

||||

|

||||

search_aps = []

|

||||

for ap_id in all_models:

|

||||

search_aps.append({

|

||||

"attribute": "inventory_model_id",

|

||||

"operator": "EQ",

|

||||

"search_value": ap_id

|

||||

})

|

||||

|

||||

variables = {"pages":

|

||||

{

|

||||

"records_per_page": 5,

|

||||

"page": 1

|

||||

},

|

||||

"rr_ap_models": {

|

||||

"relation": "inventory_items",

|

||||

"search": [{

|

||||

"integer_fields": search_aps

|

||||

}]

|

||||

},

|

||||

"ap_models": {

|

||||

"integer_fields": search_aps

|

||||

}

|

||||

}

|

||||

|

||||

sites_and_aps = sonarRequest(query,variables)

|

||||

# This should only return sites that have equipment on them that is in the list sonar_ubiquiti_ap_model_ids in ispConfig.py

|

||||

sites = []

|

||||

aps = []

|

||||

for site in sites_and_aps:

|

||||

for item in site['inventory_items']['entities']:

|

||||

ips = findIPs(item)

|

||||

if ips:

|

||||

aps.append({'parent': f"site_{site['id']}",'id': f"ap_{item['id']}", 'model': item['inventory_model_id'], 'ip': ips[0]}) # Using the first IP in the list here because each IP should only have 1 IP assigned.

|

||||

|

||||

if aps: #We don't care about sites that have equipment but no IP addresses.

|

||||

sites.append({'id': f"site_{site['id']}", 'name': site['name']})

|

||||

return sites, aps

|

||||

|

||||

def getAccounts(sonar_active_status_ids):

|

||||

query = """query getAccounts ($pages: Paginator, $account_search: Search, $data: ReverseRelationFilter,$primary: ReverseRelationFilter) {

|

||||

accounts (paginator: $pages,search: [$account_search]) {

|

||||

entities {

|

||||

account_status_id

|

||||

id

|

||||

name

|

||||

account_services (reverse_relation_filters: [$data]) {

|

||||

entities {

|

||||

service {

|

||||

data_service_detail {

|

||||

download_speed_kilobits_per_second

|

||||

upload_speed_kilobits_per_second

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

addresses {

|

||||

entities {

|

||||

line1

|

||||

line2

|

||||

city

|

||||

subdivision

|

||||

inventory_items {

|

||||

entities {

|

||||

id

|

||||

inventory_model {

|

||||

name

|

||||

}

|

||||

inventory_model_field_data (reverse_relation_filters: [$primary]) {

|

||||

entities {

|

||||

value

|

||||

ip_assignments {

|

||||

entities {

|

||||

subnet

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

ip_assignments {

|

||||

entities {

|

||||

subnet

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}"""

|

||||

|

||||

active_status_ids = []

|

||||

for status_id in sonar_active_status_ids:

|

||||

active_status_ids.append({

|

||||

"attribute": "account_status_id",

|

||||

"operator": "EQ",

|

||||

"search_value": status_id

|

||||

})

|

||||

|

||||

variables = {"pages":

|

||||

{

|

||||

"records_per_page": 5,

|

||||

"page": 1

|

||||

},

|

||||

"account_search": {

|

||||

"integer_fields": active_status_ids

|

||||

},

|

||||

"data": {

|

||||

"relation": "service",

|

||||

"search": [{

|

||||

"string_fields": [{

|

||||

"attribute": "type",

|

||||

"match": True,

|

||||

"search_value": "DATA"

|

||||

}]

|

||||

}]

|

||||

},

|

||||

"primary": {

|

||||

"relation": "inventory_model_field",

|

||||

"search": [{

|

||||

"boolean_fields": [{

|

||||

"attribute": "primary",

|

||||

"search_value": True

|

||||

}]

|

||||

}]

|

||||

}

|

||||

}

|

||||

|

||||

accounts_from_sonar = sonarRequest(query,variables)

|

||||

accounts = []

|

||||

for account in accounts_from_sonar:

|

||||

# We need to make sure the account has an address because Sonar assignments go account -> address (only 1 per account) -> equipment -> ip assignments unless the IP is assigned to the account directly.

|

||||

if account['addresses']['entities']:

|

||||

line1 = account['addresses']['entities'][0]['line1']

|

||||

line2 = account['addresses']['entities'][0]['line2']

|

||||

city = account['addresses']['entities'][0]['city']

|

||||

state = account['addresses']['entities'][0]['subdivision'][-2:]

|

||||

address = f"{line1},{f' {line2},' if line2 else ''} {city}, {state}"

|

||||

devices = []

|

||||

for item in account['addresses']['entities'][0]['inventory_items']['entities']:

|

||||

devices.append({'id': item['id'], 'name': item['inventory_model']['name'], 'ips': findIPs(item), 'mac': item['inventory_model_field_data']['entities'][0]['value']})

|

||||

if account['account_services']['entities'] and devices: # Make sure there is a data plan and devices on the account.

|

||||

download = int(account['account_services']['entities'][0]['service']['data_service_detail']['download_speed_kilobits_per_second']/1000)

|

||||

upload = int(account['account_services']['entities'][0]['service']['data_service_detail']['upload_speed_kilobits_per_second']/1000)

|

||||

if download < 2:

|

||||

download = 2

|

||||

if upload < 2:

|

||||

upload = 2

|

||||

accounts.append({'id': account['id'],'name': account['name'], 'address': address, 'download': download, 'upload': upload ,'devices': devices})

|

||||

return accounts

|

||||

|

||||

def mapApCpeMacs(ap):

|

||||

macs = []

|

||||

macs_output = None

|

||||

if ap['model'] in sonar_airmax_ap_model_ids: #Tested with Prism Gen2AC and Rocket M5.

|

||||

macs_output = subprocess.run(['snmpwalk', '-Os', '-v', '1', '-c', snmp_community, ap['ip'], '.1.3.6.1.4.1.41112.1.4.7.1.1.1'], capture_output=True).stdout.decode('utf8')

|

||||

if ap['model'] in sonar_ltu_ap_model_ids: #Tested with LTU Rocket

|

||||

macs_output = subprocess.run(['snmpwalk', '-Os', '-v', '1', '-c', snmp_community, ap['ip'], '.1.3.6.1.4.1.41112.1.10.1.4.1.11'], capture_output=True).stdout.decode('utf8')

|

||||

if macs_output:

|

||||

name_output = subprocess.run(['snmpwalk', '-Os', '-v', '1', '-c', snmp_community, ap['ip'], '.1.3.6.1.2.1.1.5.0'], capture_output=True).stdout.decode('utf8')

|

||||

ap['name'] = name_output[name_output.find('"')+1:name_output.rfind('"')]

|

||||

for mac_line in macs_output.splitlines():

|

||||

mac = mac_line[mac_line.find(':')+1:]

|

||||

mac = mac.strip().replace(' ',':')

|

||||

macs.append(mac)

|

||||

else:

|

||||

ap['name'] = 'Not found via snmp'

|

||||

ap['cpe_macs'] = macs

|

||||

|

||||

return ap

|

||||

|

||||

def mapMacAP(mac,aps):

|

||||

for ap in aps:

|

||||

for cpe_mac in ap['cpe_macs']:

|

||||

if cpe_mac == mac:

|

||||

return ap['id']

|

||||

return None

|

||||

|

||||

def createShaper():

|

||||

net = NetworkGraph()

|

||||

|

||||

#Get active statuses from Sonar if necessary.

|

||||

sonar_active_status_ids = getActiveStatuses()

|

||||

|

||||

# Get Network Sites and Access Points from Sonar.

|

||||

sites, aps = getSitesAndAps()

|

||||

|

||||

# Get Customer equipment and IPs.

|

||||

accounts = getAccounts(sonar_active_status_ids)

|

||||

|

||||

# Get CPE macs on each AP.

|

||||

pool = ThreadPool(30) #30 is arbitrary at the moment.

|

||||

for i, ap in enumerate(pool.map(mapApCpeMacs, aps)):

|

||||

ap = aps[i]

|

||||

pool.close()

|

||||

pool.join()

|

||||

|

||||

# Update customers with the AP to which they are connected.

|

||||

for account in accounts:

|

||||

for device in account['devices']:

|

||||

account['parent'] = mapMacAP(device['mac'],aps)

|

||||

if account['parent']:

|

||||

break

|

||||

|

||||

for site in sites:

|

||||

net.addRawNode(NetworkNode(id=site['id'], displayName=site['name'], parentId="", type=NodeType.site))

|

||||

|

||||

for ap in aps:

|

||||

if ap['cpe_macs']: # I don't think we care about Aps with no customers.

|

||||

net.addRawNode(NetworkNode(id=ap['id'], displayName=ap['name'], parentId=ap['parent'], type=NodeType.ap))

|

||||

|

||||

for account in accounts:

|

||||

if account['parent']:

|

||||

customer = NetworkNode(id=account['id'],displayName=account['name'],parentId=account['parent'],type=NodeType.client,download=account['download'], upload=account['upload'], address=account['address'])

|

||||

else:

|

||||

customer = NetworkNode(id=account['id'],displayName=account['name'],type=NodeType.client,download=account['download'], upload=account['upload'], address=account['address'])

|

||||

net.addRawNode(customer)

|

||||

|

||||

for device in account['devices']:

|

||||

libre_device = NetworkNode(id=device['id'], displayName=device['name'],parentId=account['id'],type=NodeType.device, ipv4=device['ips'],ipv6=[],mac=device['mac'])

|

||||

net.addRawNode(libre_device)

|

||||

|

||||

net.prepareTree()

|

||||

net.plotNetworkGraph(False)

|

||||

net.createNetworkJson()

|

||||

net.createShapedDevices()

|

||||

|

||||

def importFromSonar():

|

||||

createShaper()

|

||||

|

||||

if __name__ == '__main__':

|

||||

importFromSonar()

|

||||

@@ -51,7 +51,6 @@ def getRouters(headers):

|

||||

for router in data:

|

||||

routerID = router['id']

|

||||

ipForRouter[routerID] = router['ip']

|

||||

|

||||

print("Router IPs found: " + str(len(ipForRouter)))

|

||||

return ipForRouter

|

||||

|

||||

@@ -63,6 +62,30 @@ def combineAddress(json):

|

||||

else:

|

||||

return json["street_1"] + " " + json["city"] + " " + json["zip_code"]

|

||||

|

||||

def getAllServices(headers):

|

||||

services = spylnxRequest("admin/customers/customer/0/internet-services?main_attributes%5Bstatus%5D=active", headers)

|

||||

return services

|

||||

|

||||

def getAllIPs(headers):

|

||||

ipv4ByCustomerID = {}

|

||||

ipv6ByCustomerID = {}

|

||||

allIPv4 = spylnxRequest("admin/networking/ipv4-ip?main_attributes%5Bis_used%5D=1", headers)

|

||||

allIPv6 = spylnxRequest("admin/networking/ipv6-ip", headers)

|

||||

for ipv4 in allIPv4:

|

||||

if ipv4['customer_id'] not in ipv4ByCustomerID:

|

||||

ipv4ByCustomerID[ipv4['customer_id']] = []

|

||||

temp = ipv4ByCustomerID[ipv4['customer_id']]

|

||||

temp.append(ipv4['ip'])

|

||||

ipv4ByCustomerID[ipv4['customer_id']] = temp

|

||||

for ipv6 in allIPv6:

|

||||

if ipv6['is_used'] == 1:

|

||||

if ipv6['customer_id'] not in ipv6ByCustomerID:

|

||||

ipv6ByCustomerID[ipv6['customer_id']] = []

|

||||

temp = ipv6ByCustomerID[ipv6['customer_id']]

|

||||

temp.append(ipv6['ip'])

|

||||

ipv6ByCustomerID[ipv6['customer_id']] = temp

|

||||

return (ipv4ByCustomerID, ipv6ByCustomerID)

|

||||

|

||||

def createShaper():

|

||||

net = NetworkGraph()

|

||||

|

||||

@@ -71,17 +94,28 @@ def createShaper():

|

||||

tariff, downloadForTariffID, uploadForTariffID = getTariffs(headers)

|

||||

customers = getCustomers(headers)

|

||||

ipForRouter = getRouters(headers)

|

||||

|

||||

# It's not very clear how a service is meant to handle multiple

|

||||

# devices on a shared tariff. Creating each service as a combined

|

||||

# entity including the customer, to be on the safe side.

|

||||

allServices = getAllServices(headers)

|

||||

ipv4ByCustomerID, ipv6ByCustomerID = getAllIPs(headers)

|

||||

|

||||

allServicesDict = {}

|

||||

for serviceItem in allServices:

|

||||

if (serviceItem['status'] == 'active'):

|

||||

if serviceItem["customer_id"] not in allServicesDict:

|

||||

allServicesDict[serviceItem["customer_id"]] = []

|

||||

temp = allServicesDict[serviceItem["customer_id"]]

|

||||

temp.append(serviceItem)

|

||||

allServicesDict[serviceItem["customer_id"]] = temp

|

||||

|

||||

#It's not very clear how a service is meant to handle multiple

|

||||

#devices on a shared tariff. Creating each service as a combined

|

||||

#entity including the customer, to be on the safe side.

|

||||

for customerJson in customers:

|

||||

if customerJson['status'] == 'active':

|

||||

services = spylnxRequest("admin/customers/customer/" + customerJson["id"] + "/internet-services", headers)

|

||||

for serviceJson in services:

|

||||

if (serviceJson['status'] == 'active'):

|

||||

combinedId = "c_" + str(customerJson["id"]) + "_s_" + str(serviceJson["id"])

|

||||

tariff_id = serviceJson['tariff_id']

|

||||

if customerJson['id'] in allServicesDict:

|

||||

servicesForCustomer = allServicesDict[customerJson['id']]

|

||||

for service in servicesForCustomer:

|

||||

combinedId = "c_" + str(customerJson["id"]) + "_s_" + str(service["id"])

|

||||

tariff_id = service['tariff_id']

|

||||

customer = NetworkNode(

|

||||

type=NodeType.client,

|

||||

id=combinedId,

|

||||

@@ -93,39 +127,42 @@ def createShaper():

|

||||

)

|

||||

net.addRawNode(customer)

|

||||

|

||||

ipv4 = ''

|

||||

ipv6 = ''

|

||||

routerID = serviceJson['router_id']

|

||||

ipv4 = []

|

||||

ipv6 = []

|

||||

routerID = service['router_id']

|

||||

|

||||

# If not "Taking IPv4" (Router will assign IP), then use router's set IP

|

||||

# Debug

|

||||

taking_ipv4 = int(serviceJson['taking_ipv4'])

|

||||

taking_ipv4 = int(service['taking_ipv4'])

|

||||

if taking_ipv4 == 0:

|

||||

try:

|

||||

ipv4 = ipForRouter[routerID]

|

||||

except:

|

||||

warnings.warn("taking_ipv4 was 0 for client " + combinedId + " but router ID was not found in ipForRouter", stacklevel=2)

|

||||

ipv4 = ''

|

||||

if routerID in ipForRouter:

|

||||

ipv4 = [ipForRouter[routerID]]

|

||||

|

||||

elif taking_ipv4 == 1:

|

||||

ipv4 = serviceJson['ipv4']

|

||||

ipv4 = [service['ipv4']]

|

||||

if len(ipv4) == 0:

|

||||

#Only do this if single service for a customer

|

||||

if len(servicesForCustomer) == 1:

|

||||

if customerJson['id'] in ipv4ByCustomerID:

|

||||

ipv4 = ipv4ByCustomerID[customerJson['id']]

|

||||

|

||||

# If not "Taking IPv6" (Router will assign IP), then use router's set IP

|

||||

if isinstance(serviceJson['taking_ipv6'], str):

|

||||

taking_ipv6 = int(serviceJson['taking_ipv6'])

|

||||

if isinstance(service['taking_ipv6'], str):

|

||||

taking_ipv6 = int(service['taking_ipv6'])

|

||||

else:

|

||||

taking_ipv6 = serviceJson['taking_ipv6']

|

||||

taking_ipv6 = service['taking_ipv6']

|

||||

if taking_ipv6 == 0:

|

||||

ipv6 = ''

|

||||

ipv6 = []

|

||||

elif taking_ipv6 == 1:

|

||||

ipv6 = serviceJson['ipv6']

|

||||

ipv6 = [service['ipv6']]

|

||||

|

||||

device = NetworkNode(

|

||||

id=combinedId+"_d" + str(serviceJson["id"]),

|

||||

displayName=serviceJson["id"],

|

||||

id=combinedId+"_d" + str(service["id"]),

|

||||

displayName=service["id"],

|

||||

type=NodeType.device,

|

||||

parentId=combinedId,

|

||||

mac=serviceJson["mac"],

|

||||

ipv4=[ipv4],

|

||||

ipv6=[ipv6]

|

||||

mac=service["mac"],

|

||||

ipv4=ipv4,

|

||||

ipv6=ipv6

|

||||

)

|

||||

net.addRawNode(device)

|

||||

|

||||

|

||||

@@ -282,7 +282,7 @@ def loadRoutingOverrides():

|

||||

with open("integrationUISProutes.csv", "r") as f:

|

||||

reader = csv.reader(f)

|

||||

for row in reader:

|

||||

if not row[0].startswith("#") and len(row) == 3:

|

||||

if row and not row[0].startswith("#") and len(row) == 3:

|

||||

fromSite, toSite, cost = row

|

||||

overrides[fromSite.strip() + "->" + toSite.strip()] = int(cost)

|

||||

#print(overrides)

|

||||

|

||||

@@ -77,6 +77,12 @@ overwriteNetworkJSONalways = False

|

||||

ignoreSubnets = ['192.168.0.0/16']

|

||||

allowedSubnets = ['100.64.0.0/10']

|

||||

|

||||

# Powercode Integration

|

||||

automaticImportPowercode = False

|

||||

powercode_api_key = ''

|

||||

# Everything before :444/api/ in your Powercode instance URL

|

||||

powercode_api_url = ''

|

||||

|

||||

# Splynx Integration

|

||||

automaticImportSplynx = False

|

||||

splynx_api_key = ''

|

||||

@@ -84,6 +90,18 @@ splynx_api_secret = ''

|

||||

# Everything before /api/2.0/ on your Splynx instance

|

||||

splynx_api_url = 'https://YOUR_URL.splynx.app'

|

||||

|

||||

#Sonar Integration

|

||||

automaticImportSonar = False

|

||||

sonar_api_key = ''

|

||||

sonar_api_url = '' # ex 'https://company.sonar.software/api/graphql'

|

||||

# If there are radios in these lists, we will try to get the clients using snmp. This requires snmpwalk to be install on the server. You can use "sudo apt-get install snmp" for that. You will also need to fill in the snmp_community.

|

||||

sonar_airmax_ap_model_ids = [] # ex ['29','43']

|

||||

sonar_ltu_ap_model_ids = [] # ex ['4']

|

||||

snmp_community = ''

|

||||

# This is for all account statuses where we should be applying QoS. If you leave it blank, we'll use any status in account marked with "Activates Account" in Sonar.

|

||||

sonar_active_status_ids = []

|

||||

|

||||

|

||||

# UISP integration

|

||||

automaticImportUISP = False

|

||||

uispAuthToken = ''

|

||||

|

||||

@@ -7,6 +7,18 @@ if automatic_import_uisp():

|

||||

from integrationUISP import importFromUISP

|

||||

if automatic_import_splynx():

|

||||

from integrationSplynx import importFromSplynx

|

||||

try:

|

||||

from ispConfig import automaticImportPowercode

|

||||

except:

|

||||

automaticImportPowercode = False

|

||||

if automaticImportPowercode:

|

||||

from integrationPowercode import importFromPowercode

|

||||

try:

|

||||

from ispConfig import automaticImportSonar

|

||||

except:

|

||||

automaticImportSonar = False

|

||||

if automaticImportSonar:

|

||||

from integrationSonar import importFromSonar

|

||||

from apscheduler.schedulers.background import BlockingScheduler

|

||||

from apscheduler.executors.pool import ThreadPoolExecutor

|

||||

|

||||

@@ -23,6 +35,16 @@ def importFromCRM():

|

||||

importFromSplynx()

|

||||

except:

|

||||

print("Failed to import from Splynx")

|

||||

elif automaticImportPowercode:

|

||||

try:

|

||||

importFromPowercode()

|

||||

except:

|

||||

print("Failed to import from Powercode")

|

||||

elif automaticImportSonar:

|

||||

try:

|

||||

importFromSonar()

|

||||

except:

|

||||

print("Failed to import from Sonar")

|

||||

|

||||

#def graphHandler():

|

||||

# try:

|

||||

|

||||

Reference in New Issue

Block a user