mirror of

https://github.com/LibreQoE/LibreQoS.git

synced 2025-02-25 18:55:32 -06:00

Merge pull request #564 from LibreQoE/ui_stability

UI Stability, Memory Management and More - B3 Work List

This commit is contained in:

commit

90c1b1823f

4

.github/workflows/rust.yml

vendored

4

.github/workflows/rust.yml

vendored

@ -7,8 +7,8 @@ jobs:

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ubuntu-latest]

|

||||

runs-on: ${{ matrix.os }}

|

||||

|

||||

runs-on: [ self-hosted, ubuntu-latest ]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Install dependencies

|

||||

|

||||

@ -29,6 +29,13 @@ Please support the continued development of LibreQoS by sponsoring us via [GitHu

|

||||

|

||||

Our Zulip chat server is available at [https://chat.libreqos.io/join/fvu3cerayyaumo377xwvpev6/](https://chat.libreqos.io/join/fvu3cerayyaumo377xwvpev6/).

|

||||

|

||||

## LibreQoS Social

|

||||

- https://www.youtube.com/@LibreQoS

|

||||

- https://www.linkedin.com/company/libreqos/

|

||||

- https://www.facebook.com/libreqos

|

||||

- https://twitter.com/libreqos

|

||||

- https://fosstodon.org/@LibreQoS/

|

||||

|

||||

## Long-Term Stats (LTS)

|

||||

|

||||

Long-Term Stats (LTS) is an analytics service built for LibreQoS that revolutionizes the way you track and analyze your network.

|

||||

|

||||

@ -85,44 +85,3 @@ Then run

|

||||

```shell

|

||||

sudo netplan apply

|

||||

```

|

||||

|

||||

### Install InfluxDB (Optional but Recommended)

|

||||

|

||||

InfluxDB allows you to track long-term stats beyond what lqos_node_manager can so far.

|

||||

|

||||

To install InfluxDB 2.x., follow the steps at [https://portal.influxdata.com/downloads/](https://portal.influxdata.com/downloads/).

|

||||

|

||||

For high throughput networks (5+ Gbps) you will likely want to install InfluxDB to a separate machine or VM from that of the LibreQoS server to avoid CPU load.

|

||||

|

||||

Restart your system that is running InfluxDB

|

||||

|

||||

```shell

|

||||

sudo reboot

|

||||

```

|

||||

|

||||

Check to ensure InfluxDB is running properly. This command should show "Active: active" with green dot.

|

||||

|

||||

```shell

|

||||

sudo service influxdb status

|

||||

```

|

||||

|

||||

Check that Web UI is running:<br>

|

||||

|

||||

```shell

|

||||

http://SERVER_IP_ADDRESS:8086

|

||||

```

|

||||

|

||||

Create Bucket

|

||||

|

||||

- Data > Buckets > Create Bucket

|

||||

|

||||

Call the bucket `libreqos` (all lowercase).<br>

|

||||

Have it store as many days of data as you prefer. 7 days is standard.<>

|

||||

Import Dashboard `Boards > Create Dashboard > Import Dashboard`

|

||||

Then upload the file [influxDBdashboardTemplate.json](https://github.com/rchac/LibreQoS/blob/main/src/influxDBdashboardTemplate.json) to InfluxDB.

|

||||

|

||||

[Generate an InfluxDB Token](https://docs.influxdata.com/influxdb/cloud/security/tokens/create-token/). It will be added to ispConfig.py in the following steps.

|

||||

|

||||

```{note}

|

||||

You may want to install a reverse proxy in front of the web interfaces for influx and lqos. Setting these up is outside the scope of this document, but some examples are [Caddy](https://caddyserver.com/), and Nginx [Proxy Manager](https://nginxproxymanager.com/)

|

||||

```

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

## System Requirements

|

||||

### VM or physical server

|

||||

* For VMs, NIC passthrough is required for optimal throughput and latency (XDP vs generic XDP). Using Virtio / bridging is much slower than NIC passthrough. Virtio / bridging should not be used for large amounts of traffic.

|

||||

### Physical server

|

||||

* LibreQoS requires a dedicated, physical x86_64 device.

|

||||

* While it is technically possible to run LibreQoS in VM, it is not officially supported, and comes at a significant 30% performance penalty (even when using NIC passthrough). For VMs, NIC passthrough is required for throughput above 1 Gbps (XDP vs generic XDP).

|

||||

|

||||

### CPU

|

||||

* 2 or more CPU cores

|

||||

@ -14,47 +15,38 @@ Single-thread CPU performance will determine the max throughput of a single HTB

|

||||

| 250 Mbps | 1250 |

|

||||

| 500 Mbps | 1500 |

|

||||

| 1 Gbps | 2000 |

|

||||

| 3 Gbps | 3000 |

|

||||

| 10 Gbps | 4000 |

|

||||

| 2.5 Gbps | 3000 |

|

||||

| 5 Gbps | 4000 |

|

||||

|

||||

Below is a table of approximate aggregate throughput capacity, assuming a a CPU with a [single thread](https://www.cpubenchmark.net/singleThread.html#server-thread) performance of 2700 or greater:

|

||||

Below is a table of approximate aggregate throughput capacity, assuming a a CPU with a [single thread](https://www.cpubenchmark.net/singleThread.html#server-thread) performance of 2700 / 4000:

|

||||

|

||||

| Aggregate Throughput | CPU Cores |

|

||||

| ------------------------| ------------- |

|

||||

| 500 Mbps | 2 |

|

||||

| 1 Gbps | 4 |

|

||||

| 5 Gbps | 6 |

|

||||

| 10 Gbps | 8 |

|

||||

| 20 Gbps | 16 |

|

||||

| 50 Gbps | 32 |

|

||||

| 100 Gbps * | 64 |

|

||||

(* Estimated)

|

||||

| Aggregate Throughput | CPU Cores Needed (>2700 single-thread) | CPU Cores Needed (>4000 single-thread) |

|

||||

| ------------------------| -------------------------------------- | -------------------------------------- |

|

||||

| 500 Mbps | 2 | 2 |

|

||||

| 1 Gbps | 4 | 2 |

|

||||

| 5 Gbps | 6 | 4 |

|

||||

| 10 Gbps | 8 | 6 |

|

||||

| 20 Gbps | 16 | 8 |

|

||||

| 50 Gbps | 32 | 16 |

|

||||

| 100 Gbps | 64 | 32 |

|

||||

|

||||

So for example, an ISP delivering 1Gbps service plans with 10Gbps aggregate throughput would choose a CPU with a 2500+ single-thread score and 8 cores, such as the Intel Xeon E-2388G @ 3.20GHz.

|

||||

|

||||

### Memory

|

||||

* Minimum RAM = 2 + (0.002 x Subscriber Count) GB

|

||||

* Recommended RAM:

|

||||

|

||||

| Subscribers | RAM |

|

||||

| ------------- | ------------- |

|

||||

| 100 | 4 GB |

|

||||

| 1,000 | 8 GB |

|

||||

| 5,000 | 16 GB |

|

||||

| 10,000* | 18 GB |

|

||||

| 50,000* | 24 GB |

|

||||

|

||||

(* Estimated)

|

||||

| 100 | 8 GB |

|

||||

| 1,000 | 16 GB |

|

||||

| 5,000 | 64 GB |

|

||||

| 10,000 | 128 GB |

|

||||

| 20,000 | 256 GB |

|

||||

|

||||

### Server Recommendations

|

||||

It is most cost-effective to buy a used server with specifications matching your unique requirements, as laid out in the System Requirements section above.

|

||||

For those who do not have the time to do that, here are some off-the-shelf options to consider:

|

||||

|

||||

| Aggregate | 100Mbps Plans | 1Gbps Plans | 4Gbps Plans |

|

||||

| ------------- | ------------- | ------------- | ------------- |

|

||||

| 1 Gbps Total | A | | |

|

||||

| 10 Gbps Total | B or C | B or C | C |

|

||||

|

||||

* A | [Lanner L-1513-4C](https://www.whiteboxsolution.com/product/l-1513/) (Select L-1513-4C)

|

||||

* B | [Supermicro SuperServer 510T-ML](https://www.thinkmate.com/system/superserver-510t-ml) (Select E-2388G)

|

||||

* C | [Supermicro AS-1015A-MT](https://store.supermicro.com/us_en/as-1015a-mt.html) (Ryzen 9 7700X, 2x16GB DDR5 4800MHz ECC, 1xSupermicro 10-Gigabit XL710+ X557)

|

||||

Here are some convenient, off-the-shelf server options to consider:

|

||||

| Throughput | Model | CPU Option | RAM Option | NIC Option | Extras | Temp Range |

|

||||

| --- | --- | --- | --- | --- | --- | --- |

|

||||

| 2.5 Gbps | [Supermicro SYS-E102-13R-E](https://store.supermicro.com/us_en/compact-embedded-iot-i5-1350pe-sys-e102-13r-e.html) | Default | 2x8GB | Built-in | [USB-C RJ45](https://www.amazon.com/Anker-Ethernet-PowerExpand-Aluminum-Portable/dp/B08CK9X9Z8/)| 0°C ~ 40°C (32°F ~ 104°F) |

|

||||

| 10 Gbps | [Supermicro AS -1115S-FWTRT](https://store.supermicro.com/us_en/1u-amd-epyc-8004-compact-server-as-1115s-fwtrt.html) | 8124P | 2x16GB | Mellanox (2 x SFP28) | | 0°C ~ 40°C (32°F ~ 104°F) |

|

||||

| 25 Gbps | [Supermicro AS -1115S-FWTRT](https://store.supermicro.com/us_en/1u-amd-epyc-8004-compact-server-as-1115s-fwtrt.html) | 8534P | 4x16GB | Mellanox (2 x SFP28) | | 0°C ~ 40°C (32°F ~ 104°F) |

|

||||

|

||||

103

docs/v1.4/Quickstart/configuration.md

Normal file

103

docs/v1.4/Quickstart/configuration.md

Normal file

@ -0,0 +1,103 @@

|

||||

# Configure LibreQoS

|

||||

|

||||

## Configure lqos.conf

|

||||

|

||||

Copy the lqosd daemon configuration file to `/etc`:

|

||||

|

||||

```shell

|

||||

cd /opt/libreqos/src

|

||||

sudo cp lqos.example /etc/lqos.conf

|

||||

```

|

||||

|

||||

Now edit the file to match your setup with

|

||||

|

||||

```shell

|

||||

sudo nano /etc/lqos.conf

|

||||

```

|

||||

|

||||

Change `enp1s0f1` and `enp1s0f2` to match your network interfaces. It doesn't matter which one is which. Notice, it's paring the interfaces, so when you first enter enps0f<ins>**1**</ins> in the first line, the `redirect_to` parameter is enp1s0f<ins>**2**</ins> (replacing with your actual interface names).

|

||||

|

||||

- First Line: `name = "enp1s0f1", redirect_to = "enp1s0f2"`

|

||||

- Second Line: `name = "enp1s0f2", redirect_to = "enp1s0f1"`

|

||||

|

||||

Then, if using Bifrost/XDP set `use_xdp_bridge = true` under that same `[bridge]` section.

|

||||

|

||||

## Configure ispConfig.py

|

||||

|

||||

Copy ispConfig.example.py to ispConfig.py and edit as needed

|

||||

|

||||

```shell

|

||||

cd /opt/libreqos/src/

|

||||

cp ispConfig.example.py ispConfig.py

|

||||

nano ispConfig.py

|

||||

```

|

||||

|

||||

- Set upstreamBandwidthCapacityDownloadMbps and upstreamBandwidthCapacityUploadMbps to match the bandwidth in Mbps of your network's upstream / WAN internet connection. The same can be done for generatedPNDownloadMbps and generatedPNUploadMbps.

|

||||

- Set interfaceA to the interface facing your core router (or bridged internal network if your network is bridged)

|

||||

- Set interfaceB to the interface facing your edge router

|

||||

- Set ```enableActualShellCommands = True``` to allow the program to actually run the commands.

|

||||

|

||||

## Network.json

|

||||

|

||||

Network.json allows ISP operators to define a Hierarchical Network Topology, or Flat Network Topology.

|

||||

|

||||

For networks with no Parent Nodes (no strictly defined Access Points or Sites) edit the network.json to use a Flat Network Topology with

|

||||

```nano network.json```

|

||||

setting the following file content:

|

||||

|

||||

```json

|

||||

{}

|

||||

```

|

||||

|

||||

If you plan to use the built-in UISP or Splynx integrations, you do not need to create a network.json file quite yet.

|

||||

|

||||

If you plan to use the built-in UISP integration, it will create this automatically on its first run (assuming network.json is not already present). You can then modify the network.json to more accurately reflect your topology.

|

||||

|

||||

If you will not be using an integration, you can manually define the network.json following the template file - network.example.json

|

||||

|

||||

```text

|

||||

+-----------------------------------------------------------------------+

|

||||

| Entire Network |

|

||||

+-----------------------+-----------------------+-----------------------+

|

||||

| Parent Node A | Parent Node B | Parent Node C |

|

||||

+-----------------------+-------+-------+-------+-----------------------+

|

||||

| Parent Node D | Sub 3 | Sub 4 | Sub 5 | Sub 6 | Sub 7 | Parent Node F |

|

||||

+-------+-------+-------+-------+-------+-------+-------+-------+-------+

|

||||

| Sub 1 | Sub 2 | | | | Sub 8 | Sub 9 |

|

||||

+-------+-------+-------+-----------------------+-------+-------+-------+

|

||||

```

|

||||

|

||||

## Manual Setup

|

||||

|

||||

You can use

|

||||

|

||||

```shell

|

||||

python3 csvToNetworkJSON.py

|

||||

```

|

||||

|

||||

to convert manualNetwork.csv to a network.json file.

|

||||

manualNetwork.csv can be copied from the template file, manualNetwork.template.csv

|

||||

|

||||

Note: The parent node name must match that used for clients in ShapedDevices.csv

|

||||

|

||||

## ShapedDevices.csv

|

||||

|

||||

If you are using an integration, this file will be automatically generated. If you are not using an integration, you can manually edit the file.

|

||||

|

||||

### Manual Editing

|

||||

|

||||

- Modify the ShapedDevices.csv file using your preferred spreadsheet editor (LibreOffice Calc, Excel, etc), following the template file - ShapedDevices.example.csv

|

||||

- Circuit ID is required. Must be a string of some sort (int is fine, gets parsed as string). Must NOT include any number symbols (#). Every circuit needs a unique CircuitID - they cannot be reused. Here, circuit essentially means customer location. If a customer has multiple locations on different parts of your network, use a unique CircuitID for each of those locations.

|

||||

- At least one IPv4 address or IPv6 address is required for each entry.

|

||||

- The Access Point or Site name should be set in the Parent Node field. Parent Node can be left blank for flat networks.

|

||||

- The ShapedDevices.csv file allows you to set minimum guaranteed, and maximum allowed bandwidth per subscriber.

|

||||

- The minimum allowed plan rates for Circuits are 2Mbit. Bandwidth min and max should both be above that threshold.

|

||||

- Recommendation: set the min bandwidth to something like 25/10 and max to 1.15X advertised plan rate by using bandwidthOverheadFactor = 1.15

|

||||

- This way, when an AP hits its ceiling, users have any remaining AP capacity fairly distributed between them.

|

||||

- Ensure a reasonable minimum bandwidth minimum for every subscriber, allowing them to utilize up to the maximum provided when AP utilization is below 100%.

|

||||

|

||||

Note regarding SLAs: For customers with SLA contracts that guarantee them a minimum bandwidth, set their plan rate as the minimum bandwidth. That way when an AP approaches its ceiling, SLA customers will always get that amount.

|

||||

|

||||

|

||||

|

||||

Once your configuration is complete. You're ready to run the application and start the [Deamons](./services-and-run.md)

|

||||

42

docs/v1.4/Quickstart/networkdesignassumptions.md

Normal file

42

docs/v1.4/Quickstart/networkdesignassumptions.md

Normal file

@ -0,0 +1,42 @@

|

||||

# Network Design Assumptions

|

||||

|

||||

## Officially supported configuration

|

||||

|

||||

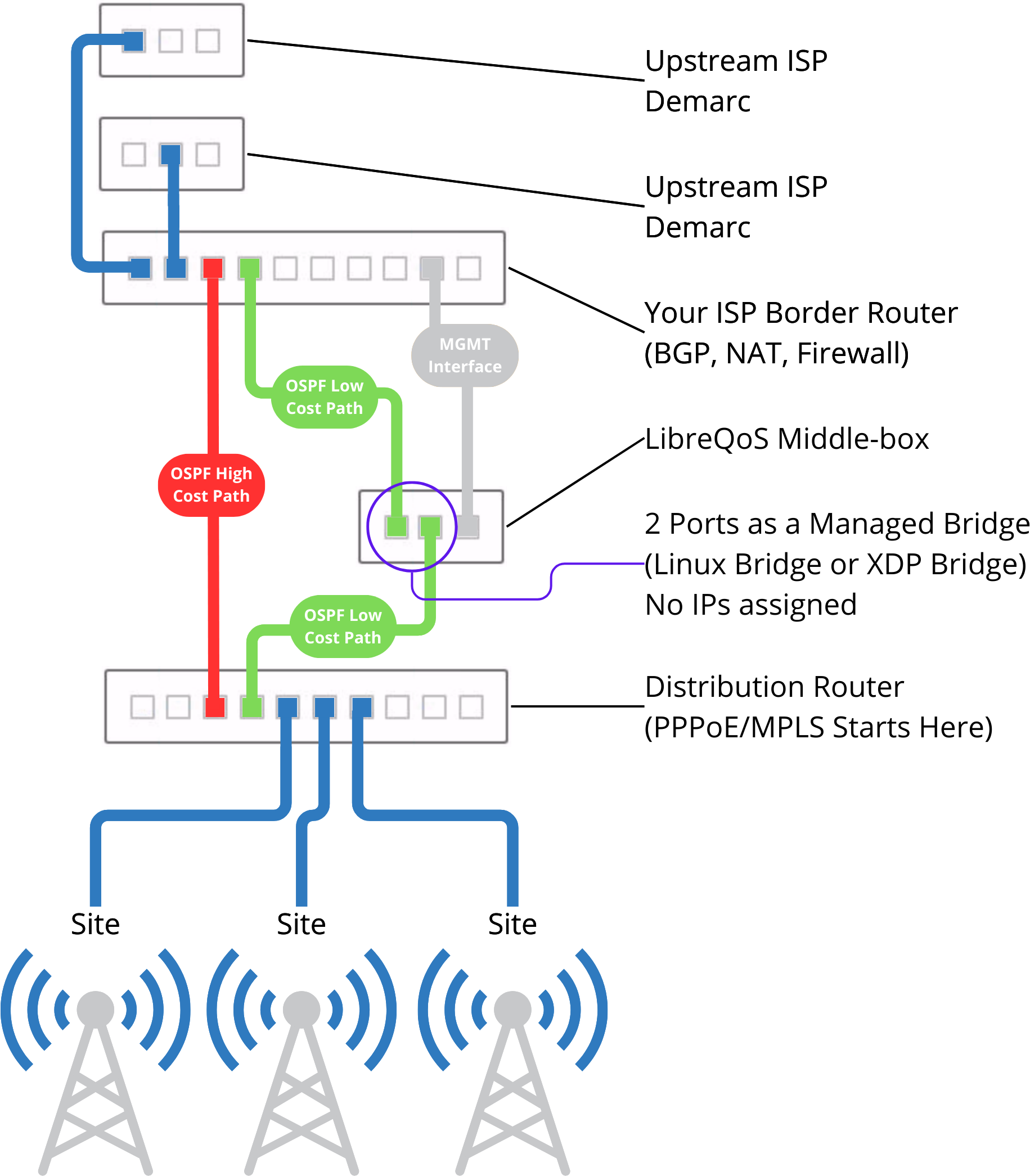

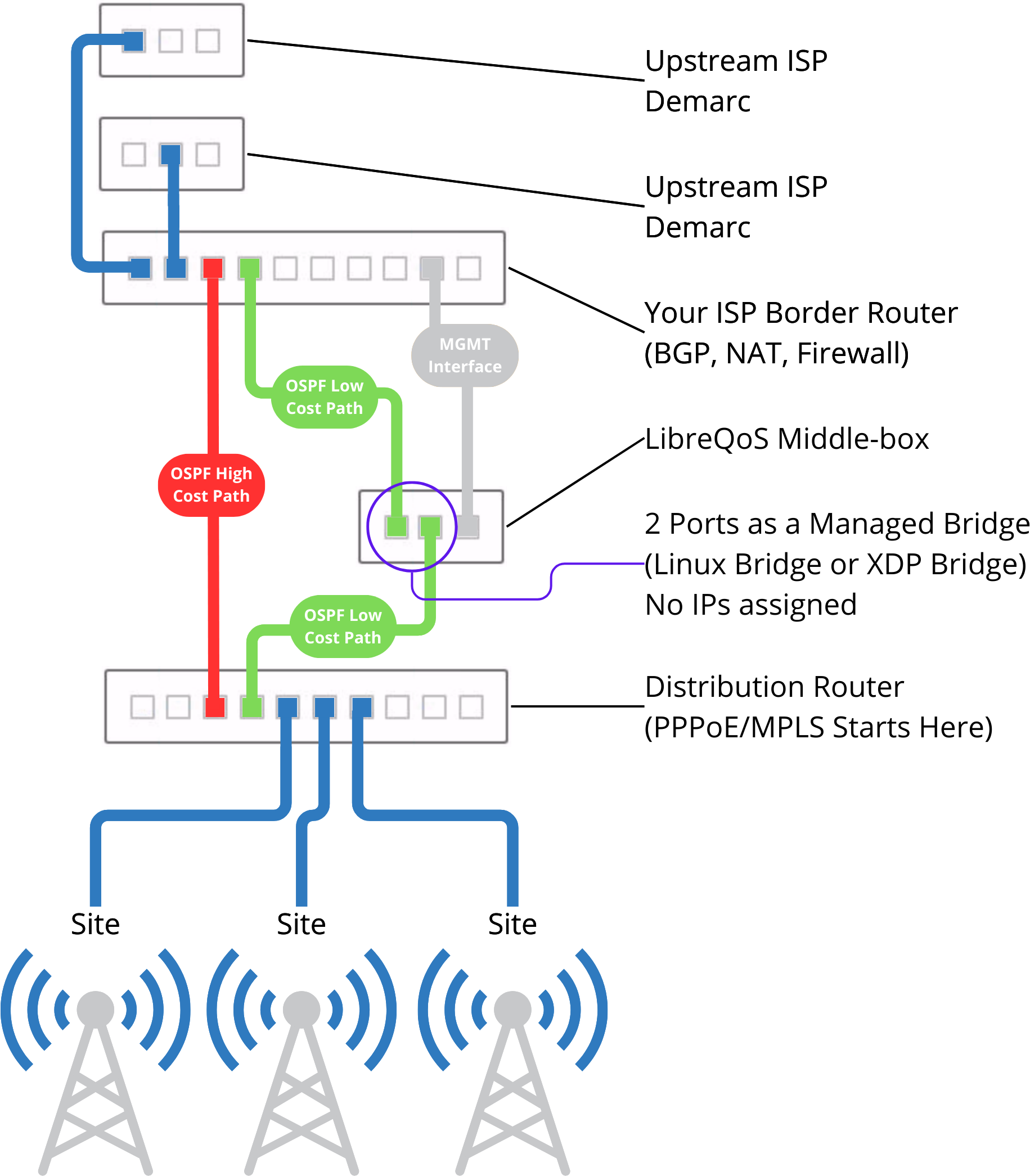

- LibreQoS placed inline in network, usually between an edge router (NAT, firewall) and core router (distribution to sites across network).

|

||||

- If you use NAT/CG-NAT, place LibreQoS inline south of where NAT is applied, as LibreQoS needs to shape internal addresses (100.64.0.0/12) not public post-NAT IPs.

|

||||

- Edge and Core routers should have 1500 MTU on links between them

|

||||

- If you use MPLS, you would terminate MPLS traffic at the core router. LibreQoS cannot decapsulate MPLS on its own.

|

||||

- OSPF primary link (low cost) through the server running LibreQoS

|

||||

- OSPF backup link (high cost, maybe 200 for example)

|

||||

|

||||

|

||||

|

||||

### Network Interface Card

|

||||

|

||||

```{note}

|

||||

You must have one of these:

|

||||

- single NIC with two interfaces,

|

||||

- two NICs with single interface,

|

||||

- 2x VLANs interface (using one or two NICs).

|

||||

```

|

||||

|

||||

LibreQoS requires NICs to have 2 or more RX/TX queues and XDP support. While many cards theoretically meet these requirements, less commonly used cards tend to have unreported driver bugs which impede XDP functionality and make them unusable for our purposes. At this time we recommend the Intel x520, Intel x710, and Nvidia (ConnectX-5 or newer) NICs. We cannot guarantee compatibility with other cards.

|

||||

|

||||

## Alternate configuration (Not officially supported)

|

||||

|

||||

This alternate configuration uses Spanning Tree Protocol (STP) to modify the data path in the event the LibreQoS device is offline for maintenance or another problem.

|

||||

|

||||

```{note}

|

||||

Most of the same considerations apply to the alternate configuration as they do to the officially supported configuation

|

||||

```

|

||||

|

||||

- LibreQoS placed inline in network, usually between an edge router (NAT, firewall) and core router (distribution to sites across network).

|

||||

- If you use NAT/CG-NAT, place LibreQoS inline south of where NAT is applied, as LibreQoS needs to shape internal addresses (100.64.0.0/12) not public post-NAT IPs.

|

||||

- Edge router and Core switch should have 1500 MTU on links between them

|

||||

- If you use MPLS, you would terminate MPLS traffic somewhere south of the core/distribution switch. LibreQoS cannot decapsulate MPLS on its own.

|

||||

- Spanning Tree primary link (low cost) through the server running LibreQoS

|

||||

- Spanning Tree backup link (high cost, maybe 80 for example)

|

||||

|

||||

Keep in mind that if you use different bandwidth links, for example, 10 Gbps through LibreQoS, and 1 Gbps between core switch and edge router, you may need to be more intentional with your STP costs.

|

||||

|

||||

|

||||

37

docs/v1.4/Quickstart/quickstart-libreqos-1.4.md

Normal file

37

docs/v1.4/Quickstart/quickstart-libreqos-1.4.md

Normal file

@ -0,0 +1,37 @@

|

||||

# Install LibreQoS 1.4

|

||||

|

||||

## Updating from v1.3

|

||||

|

||||

### Remove offloadOff.service

|

||||

|

||||

```shell

|

||||

sudo systemctl disable offloadOff.service

|

||||

sudo rm /usr/local/sbin/offloadOff.sh /etc/systemd/system/offloadOff.service

|

||||

```

|

||||

|

||||

### Remove cron tasks from v1.3

|

||||

|

||||

Run ```sudo crontab -e``` and remove any entries pertaining to LibreQoS from v1.3.

|

||||

|

||||

## Simple install via .Deb package (Recommended)

|

||||

|

||||

Use the deb package from the [latest v1.4 release](https://github.com/LibreQoE/LibreQoS/releases/).

|

||||

|

||||

```shell

|

||||

sudo echo "deb http://stats.libreqos.io/ubuntu jammy main" | sudo tee -a /etc/apt/sources.list.d/libreqos.list

|

||||

sudo wget -O - -q http://stats.libreqos.io/repo.asc | sudo apt-key add -

|

||||

apt-get update

|

||||

apt-get install libreqos

|

||||

```

|

||||

|

||||

You will be asked some questions about your configuration, and the management daemon and webserver will automatically start. Go to http://<your_ip>:9123/ to finish installation.

|

||||

|

||||

## Complex Install (Not Reccomended)

|

||||

|

||||

```{note}

|

||||

Use this install if you'd like to constantly deploy from the main branch on Github. For experienced users only!

|

||||

```

|

||||

|

||||

[Complex Installation](../TechnicalDocs/complex-install.md)

|

||||

|

||||

You are now ready to [Configure](./configuration.md) LibreQoS!

|

||||

87

docs/v1.4/Quickstart/quickstart-prereq.md

Normal file

87

docs/v1.4/Quickstart/quickstart-prereq.md

Normal file

@ -0,0 +1,87 @@

|

||||

# Server Setup - Pre-requisites

|

||||

|

||||

Disable hyperthreading on the BIOS/UEFI of your host system. Hyperthreaading is also known as Simultaneous Multi Threading (SMT) on AMD systems. Disabling this is very important for optimal performance of the XDP cpumap filtering and, in turn, throughput and latency.

|

||||

|

||||

- Boot, pressing the appropriate key to enter the BIOS settings

|

||||

- For AMD systems, you will have to navigate the settings to find the "SMT Control" setting. Usually it is under something like ```Advanced -> AMD CBS -> CPU Common Options -> Thread Enablement -> SMT Control``` Once you find it, switch to "Disabled" or "Off"

|

||||

- For Intel systems, you will also have to navigate the settings to find the "hyperthrading" toggle option. On HP servers it's under ```System Configuration > BIOS/Platform Configuration (RBSU) > Processor Options > Intel (R) Hyperthreading Options.```

|

||||

- Save changes and reboot

|

||||

|

||||

## Install Ubuntu Server

|

||||

|

||||

We recommend Ubuntu Server because its kernel version tends to track closely with the mainline Linux releases. Our current documentation assumes Ubuntu Server. To run LibreQoS v1.4, Linux kernel 5.11 or greater is required, as 5.11 includes some important XDP patches. Ubuntu Server 22.04 uses kernel 5.13, which meets that requirement.

|

||||

|

||||

You can download Ubuntu Server 22.04 from <a href="https://ubuntu.com/download/server">https://ubuntu.com/download/server</a>.

|

||||

|

||||

1. Boot Ubuntu Server from USB.

|

||||

2. Follow the steps to install Ubuntu Server.

|

||||

3. If you use a Mellanox network card, the Ubuntu Server installer will ask you whether to install the mellanox/intel NIC drivers. Check the box to confirm. This extra driver is important.

|

||||

4. On the Networking settings step, it is recommended to assign a static IP address to the management NIC.

|

||||

5. Ensure SSH server is enabled so you can more easily log into the server later.

|

||||

6. You can use scp or sftp to access files from your LibreQoS server for easier file editing. Here's how to access via scp or sftp using an [Ubuntu](https://www.addictivetips.com/ubuntu-linux-tips/sftp-server-ubuntu/) or [Windows](https://winscp.net/eng/index.php) machine.

|

||||

|

||||

### Choose Bridge Type

|

||||

|

||||

There are two options for the bridge to pass data through your two interfaces:

|

||||

|

||||

- Bifrost XDP-Accelerated Bridge

|

||||

- Regular Linux Bridge

|

||||

|

||||

The Bifrost Bridge is recommended for Intel NICs with XDP support, such as the X520 and X710.

|

||||

The regular Linux bridge is recommended for Nvidea/Mellanox NICs such as the ConnectX-5 series (which have superior bridge performance), and VM setups using virtualized NICs.

|

||||

To use the Bifrost bridge, skip the regular Linux bridge section below, and be sure to enable Bifrost/XDP in lqos.conf a few sections below.

|

||||

|

||||

### Adding a regular Linux bridge (if not using Bifrost XDP bridge)

|

||||

|

||||

From the Ubuntu VM, create a linux interface bridge - br0 - with the two shaping interfaces.

|

||||

Find your existing .yaml file in /etc/netplan/ with

|

||||

|

||||

```shell

|

||||

cd /etc/netplan/

|

||||

ls

|

||||

```

|

||||

|

||||

Then edit the .yaml file there with

|

||||

|

||||

```shell

|

||||

sudo nano XX-cloud-init.yaml

|

||||

```

|

||||

|

||||

With XX corresponding to the name of the existing file.

|

||||

|

||||

Editing the .yaml file, we need to define the shaping interfaces (here, ens19 and ens20) and add the bridge with those two interfaces. Assuming your interfaces are ens18, ens19, and ens20, here is what your file might look like:

|

||||

|

||||

```yaml

|

||||

# This is the network config written by 'subiquity'

|

||||

network:

|

||||

ethernets:

|

||||

ens18:

|

||||

addresses:

|

||||

- 10.0.0.12/24

|

||||

routes:

|

||||

- to: default

|

||||

via: 10.0.0.1

|

||||

nameservers:

|

||||

addresses:

|

||||

- 1.1.1.1

|

||||

- 8.8.8.8

|

||||

search: []

|

||||

ens19:

|

||||

dhcp4: no

|

||||

ens20:

|

||||

dhcp4: no

|

||||

version: 2

|

||||

bridges:

|

||||

br0:

|

||||

interfaces:

|

||||

- ens19

|

||||

- ens20

|

||||

```

|

||||

|

||||

Make sure to replace 10.0.0.12/24 with your LibreQoS VM's address and subnet, and to replace the default gateway 10.0.0.1 with whatever your default gateway is.

|

||||

|

||||

Then run

|

||||

|

||||

```shell

|

||||

sudo netplan apply

|

||||

```

|

||||

71

docs/v1.4/Quickstart/services-and-run.md

Normal file

71

docs/v1.4/Quickstart/services-and-run.md

Normal file

@ -0,0 +1,71 @@

|

||||

# LibreQoS daemons

|

||||

|

||||

lqosd

|

||||

|

||||

- Manages actual XDP code. Build with Rust.

|

||||

|

||||

lqos_node_manager

|

||||

|

||||

- Runs the GUI available at http://a.b.c.d:9123

|

||||

|

||||

lqos_scheduler

|

||||

|

||||

- lqos_scheduler handles statistics and performs continuous refreshes of LibreQoS' shapers, including pulling from any enabled CRM Integrations (UISP, Splynx).

|

||||

- On start: Run a full setup of queues

|

||||

- Every 10 seconds: Graph bandwidth and latency stats

|

||||

- Every 30 minutes: Update queues, pulling new configuration from CRM integration if enabled

|

||||

|

||||

## Run daemons with systemd

|

||||

|

||||

You can setup `lqosd`, `lqos_node_manager`, and `lqos_scheduler` as systemd services.

|

||||

|

||||

```shell

|

||||

sudo cp /opt/libreqos/src/bin/lqos_node_manager.service.example /etc/systemd/system/lqos_node_manager.service

|

||||

sudo cp /opt/libreqos/src/bin/lqosd.service.example /etc/systemd/system/lqosd.service

|

||||

sudo cp /opt/libreqos/src/bin/lqos_scheduler.service.example /etc/systemd/system/lqos_scheduler.service

|

||||

```

|

||||

|

||||

Finally, run

|

||||

|

||||

```shell

|

||||

sudo systemctl daemon-reload

|

||||

sudo systemctl enable lqosd lqos_node_manager lqos_scheduler

|

||||

```

|

||||

|

||||

You can now point a web browser at `http://a.b.c.d:9123` (replace `a.b.c.d` with the management IP address of your shaping server) and enjoy a real-time view of your network.

|

||||

|

||||

## Debugging lqos_scheduler

|

||||

|

||||

In the background, lqos_scheduler runs scheduler.py, which in turn runs LibreQoS.py

|

||||

|

||||

One-time runs of these individual components can be very helpful for debugging and to make sure everything is correctly configured.

|

||||

|

||||

First, stop lqos_scheduler

|

||||

|

||||

```shell

|

||||

sudo systemctl stop lqos_scheduler

|

||||

```

|

||||

|

||||

For one-time runs of LibreQoS.py, use

|

||||

|

||||

```shell

|

||||

sudo ./LibreQoS.py

|

||||

```

|

||||

|

||||

- To use the debug mode with more verbose output, use:

|

||||

|

||||

```shell

|

||||

sudo ./LibreQoS.py --debug

|

||||

```

|

||||

|

||||

To confirm that lqos_scheduler (scheduler.py) is able to work correctly, run:

|

||||

|

||||

```shell

|

||||

sudo python3 scheduler.py

|

||||

```

|

||||

|

||||

Once you have any errors eliminated, restart lqos_scheduler with

|

||||

|

||||

```shell

|

||||

sudo systemctl start lqos_scheduler

|

||||

```

|

||||

31

docs/v1.4/Quickstart/share.md

Normal file

31

docs/v1.4/Quickstart/share.md

Normal file

@ -0,0 +1,31 @@

|

||||

# Share your before and after

|

||||

|

||||

We ask that you please share an anonymized screenshot of your LibreQoS deployment before (monitor only mode) and after (queuing enabled) to the [LibreQoS Chat](https://chat.libreqos.io/join/fvu3cerayyaumo377xwvpev6/). This helps us gauge the impact of our software. It also makes us smile.

|

||||

|

||||

1. Enable monitor only mode

|

||||

2. Klingon mode (Redact customer info)

|

||||

3. Screenshot

|

||||

4. Resume regular queuing

|

||||

5. Screenshot

|

||||

|

||||

## Enable monitor only mode

|

||||

|

||||

```shell

|

||||

sudo systemctl stop lqos_scheduler

|

||||

sudo systemctl restart lqosd

|

||||

sudo systemctl restart lqos_node_manager

|

||||

```

|

||||

|

||||

## Klingon mode

|

||||

|

||||

Please go to the Web UI and click Configuration. Toggle Redact Customer Information (screenshot mode) and then Apply Changes.

|

||||

|

||||

## Resume regular queuing

|

||||

|

||||

```shell

|

||||

sudo systemctl start lqos_scheduler

|

||||

```

|

||||

|

||||

## Screenshot

|

||||

|

||||

To generate a screenshot - please go to the Web UI and click Configuration. Toggle Redact Customer Information (screenshot mode), Apply Changes, and then return to the dashboard to take a screenshot.

|

||||

56

docs/v1.4/TechnicalDocs/complex-install.md

Normal file

56

docs/v1.4/TechnicalDocs/complex-install.md

Normal file

@ -0,0 +1,56 @@

|

||||

# Complex install (Not Recommended)

|

||||

|

||||

## Clone the repo

|

||||

|

||||

The recommended install location is `/opt/libreqos`

|

||||

Go to the install location, and clone the repo:

|

||||

|

||||

```shell

|

||||

cd /opt/

|

||||

git clone https://github.com/LibreQoE/LibreQoS.git libreqos

|

||||

sudo chown -R YOUR_USER /opt/libreqos

|

||||

```

|

||||

|

||||

By specifying `libreqos` at the end, git will ensure the folder name is lowercase.

|

||||

|

||||

## Install Dependencies from apt and pip

|

||||

|

||||

You need to have a few packages from `apt` installed:

|

||||

|

||||

```shell

|

||||

sudo apt-get install -y python3-pip clang gcc gcc-multilib llvm libelf-dev git nano graphviz curl screen llvm pkg-config linux-tools-common linux-tools-`uname -r` libbpf-dev libssl-dev

|

||||

```

|

||||

|

||||

Then you need to install some Python dependencies:

|

||||

|

||||

```shell

|

||||

cd /opt/libreqos

|

||||

python3 -m pip install -r requirements.txt

|

||||

sudo python3 -m pip install -r requirements.txt

|

||||

```

|

||||

|

||||

## Install the Rust development system

|

||||

|

||||

Go to [RustUp](https://rustup.rs) and follow the instructions. Basically, run the following:

|

||||

|

||||

```shell

|

||||

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

|

||||

```

|

||||

|

||||

When Rust finishes installing, it will tell you to execute a command to place the Rust build tools into your path. You need to either execute this command or logout and back in again.

|

||||

|

||||

Once that's done, please run:

|

||||

|

||||

```shell

|

||||

cd /opt/libreqos/src/

|

||||

./build_rust.sh

|

||||

```

|

||||

|

||||

This will take a while the first time, but it puts everything in the right place.

|

||||

|

||||

Now, to build rust crates, run:

|

||||

|

||||

```shell

|

||||

cd rust

|

||||

cargo build --all

|

||||

```

|

||||

12

docs/v1.4/TechnicalDocs/extras.md

Normal file

12

docs/v1.4/TechnicalDocs/extras.md

Normal file

@ -0,0 +1,12 @@

|

||||

# Extras

|

||||

|

||||

## Flamegraph

|

||||

|

||||

```shell

|

||||

git clone https://github.com/brendangregg/FlameGraph.git

|

||||

cd FlameGraph

|

||||

sudo perf record -F 99 -a -g -- sleep 60

|

||||

perf script > out.perf

|

||||

./stackcollapse-perf.pl out.perf > out.folded

|

||||

./flamegraph.pl --title LibreQoS --width 7200 out.folded > libreqos.svg

|

||||

```

|

||||

65

docs/v1.4/TechnicalDocs/integrations.md

Normal file

65

docs/v1.4/TechnicalDocs/integrations.md

Normal file

@ -0,0 +1,65 @@

|

||||

# Integrations

|

||||

|

||||

## UISP Integration

|

||||

|

||||

First, set the relevant parameters for UISP (uispAuthToken, UISPbaseURL, etc.) in ispConfig.py.

|

||||

|

||||

To test the UISP Integration, use

|

||||

|

||||

```shell

|

||||

python3 integrationUISP.py

|

||||

```

|

||||

|

||||

On the first successful run, it will create a network.json and ShapedDevices.csv file.

|

||||

If a network.json file exists, it will not be overwritten.

|

||||

You can modify the network.json file to more accurately reflect bandwidth limits.

|

||||

ShapedDevices.csv will be overwritten every time the UISP integration is run.

|

||||

You have the option to run integrationUISP.py automatically on boot and every 10 minutes, which is recommended. This can be enabled by setting ```automaticImportUISP = True``` in ispConfig.py

|

||||

|

||||

## Powercode Integration

|

||||

|

||||

First, set the relevant parameters for Powercode (powercode_api_key, powercode_api_url, etc.) in ispConfig.py.

|

||||

|

||||

To test the Powercode Integration, use

|

||||

|

||||

```shell

|

||||

python3 integrationPowercode.py

|

||||

```

|

||||

|

||||

On the first successful run, it will create a ShapedDevices.csv file.

|

||||

You can modify the network.json file manually to reflect Site/AP bandwidth limits.

|

||||

ShapedDevices.csv will be overwritten every time the Powercode integration is run.

|

||||

You have the option to run integrationPowercode.py automatically on boot and every 10 minutes, which is recommended. This can be enabled by setting ```automaticImportPowercode = True``` in ispConfig.py

|

||||

|

||||

## Sonar Integration

|

||||

|

||||

First, set the relevant parameters for Sonar (sonar_api_key, sonar_api_url, etc.) in ispConfig.py.

|

||||

|

||||

To test the Sonar Integration, use

|

||||

|

||||

```shell

|

||||

python3 integrationSonar.py

|

||||

```

|

||||

|

||||

On the first successful run, it will create a ShapedDevices.csv file.

|

||||

If a network.json file exists, it will not be overwritten.

|

||||

You can modify the network.json file to more accurately reflect bandwidth limits.

|

||||

ShapedDevices.csv will be overwritten every time the Sonar integration is run.

|

||||

You have the option to run integrationSonar.py automatically on boot and every 10 minutes, which is recommended. This can be enabled by setting ```automaticImportSonar = True``` in ispConfig.py

|

||||

|

||||

## Splynx Integration

|

||||

|

||||

First, set the relevant parameters for Splynx (splynx_api_key, splynx_api_secret, etc.) in ispConfig.py.

|

||||

|

||||

The Splynx Integration uses Basic authentication. For using this type of authentication, please make sure you enable [Unsecure access](https://splynx.docs.apiary.io/#introduction/authentication) in your Splynx API key settings. Also the Splynx API key should be granted access to the necessary permissions.

|

||||

|

||||

To test the Splynx Integration, use

|

||||

|

||||

```shell

|

||||

python3 integrationSplynx.py

|

||||

```

|

||||

|

||||

On the first successful run, it will create a ShapedDevices.csv file.

|

||||

You can manually create your network.json file to more accurately reflect bandwidth limits.

|

||||

ShapedDevices.csv will be overwritten every time the Splynx integration is run.

|

||||

You have the option to run integrationSplynx.py automatically on boot and every 10 minutes, which is recommended. This can be enabled by setting ```automaticImportSplynx = True``` in ispConfig.py

|

||||

28

docs/v1.4/TechnicalDocs/performance-tuning.md

Normal file

28

docs/v1.4/TechnicalDocs/performance-tuning.md

Normal file

@ -0,0 +1,28 @@

|

||||

# Performance Tuning

|

||||

|

||||

## Ubuntu Starts Slowly (~2 minutes)

|

||||

|

||||

### List all services which requires network

|

||||

|

||||

```shell

|

||||

systemctl show -p WantedBy network-online.target

|

||||

```

|

||||

|

||||

### For Ubuntu 22.04 this command can help

|

||||

|

||||

```shell

|

||||

systemctl disable cloud-config iscsid cloud-final

|

||||

```

|

||||

|

||||

### Set proper governor for CPU (baremetal/hypervisior host)

|

||||

|

||||

```shell

|

||||

cpupower frequency-set --governor performance

|

||||

```

|

||||

|

||||

### OSPF

|

||||

|

||||

It is recommended to set the OSPF timers of both OSPF neighbors (core and edge router) to minimize downtime upon a reboot of the LibreQoS server.

|

||||

|

||||

* hello interval

|

||||

* dead

|

||||

42

docs/v1.4/TechnicalDocs/troubleshooting.md

Normal file

42

docs/v1.4/TechnicalDocs/troubleshooting.md

Normal file

@ -0,0 +1,42 @@

|

||||

# Troubleshooting

|

||||

|

||||

## Common Issues

|

||||

|

||||

### LibreQoS Is Running, But Traffic Not Shaping

|

||||

|

||||

In ispConfig.py, make sure the edge and core interfaces correspond to correctly to the edge and core. Try swapping the interfaces to see if shaping starts to work.

|

||||

|

||||

Make sure your services are running properly

|

||||

|

||||

- `lqosd.service`

|

||||

- `lqos_node_manager`

|

||||

- `lqos_scheduler`

|

||||

|

||||

Node manager and scheduler are dependent on the `lqos.service` being in a healthy, running state.

|

||||

|

||||

For example to check the status of lqosd, run:

|

||||

```sudo systemctl status lqosd```

|

||||

|

||||

### lqosd not running or failed to start

|

||||

At the command-line, type ```sudo RUST_LOG=info /opt/libreqos/src/bin/lqosd``` which will provide specifics regarding why it failed to start.

|

||||

|

||||

### RTNETLINK answers: Invalid argument

|

||||

|

||||

This tends to show up when the MQ qdisc cannot be added correctly to the NIC interface. This would suggest the NIC has insufficient RX/TX queues. Please make sure you are using the [recommended NICs](../SystemRequirements/Networking.md).

|

||||

|

||||

### InfluxDB "Failed to update bandwidth graphs"

|

||||

|

||||

The scheduler (scheduler.py) runs the InfluxDB integration within a try/except statement. If it fails to update InfluxDB, it will report "Failed to update bandwidth graphs".

|

||||

To find the exact cause of the failure, please run ```python3 graphInfluxDB.py``` which will provde more specific errors.

|

||||

|

||||

### All customer IPs are listed under Unknown IPs, rather than Shaped Devices in GUI

|

||||

```

|

||||

cd /opt/libreqos/src

|

||||

sudo systemctl stop lqos_scheduler

|

||||

sudo python3 LibreQoS.py

|

||||

```

|

||||

|

||||

The console output from running LibreQoS.py directly provides more specific errors regarding issues with ShapedDevices.csv and network.json

|

||||

Once you have identified the error and fixed ShapedDevices.csv and/or Network.json, please then run

|

||||

|

||||

```sudo systemctl start lqos_scheduler```

|

||||

24

docs/v1.4/Updates/update.md

Normal file

24

docs/v1.4/Updates/update.md

Normal file

@ -0,0 +1,24 @@

|

||||

# Updating 1.4 To Latest Version

|

||||

|

||||

```{warning}

|

||||

If you use the XDP bridge, traffic will stop passing through the bridge during the update (XDP bridge is only operating while lqosd runs).

|

||||

```

|

||||

|

||||

## If you installed with Git

|

||||

|

||||

1. Change to your `LibreQoS` directory (e.g. `cd /opt/LibreQoS`)

|

||||

2. Update from Git: `git pull`

|

||||

3. Recompile: `./build-rust.sh`

|

||||

4. `sudo rust/remove_pinned_maps.sh`

|

||||

|

||||

Run the following commands to reload the LibreQoS services.

|

||||

|

||||

```shell

|

||||

sudo systemctl restart lqosd

|

||||

sudo systemctl restart lqos_node_manager

|

||||

sudo systemctl restart lqos_scheduler

|

||||

```

|

||||

|

||||

## If you installed through the APT repository

|

||||

|

||||

All you should have to do in this case is run `sudo apt update && sudo apt upgrade` and LibreQoS should install the new package.

|

||||

1

docs/v1.4/test.txt

Normal file

1

docs/v1.4/test.txt

Normal file

@ -0,0 +1 @@

|

||||

|

||||

17

docs/v1.5/ChangeNotes/v1.5.md

Normal file

17

docs/v1.5/ChangeNotes/v1.5.md

Normal file

@ -0,0 +1,17 @@

|

||||

# LibreQoS v4 to v1.5 Change Summary

|

||||

|

||||

NLNet Milestones: 2B and 2C.

|

||||

|

||||

This is a relatively huge development branch. Major features:

|

||||

|

||||

* The kernel-side XDP now performs all dissection and analysis in the XDP side, not the TC side. This results in better CPU usage overall.

|

||||

* If your kernel/drivers support it, use eBPF metadata functionality to completely skip a secondary LPM check - for a substantial CPU usage decrease.

|

||||

* Packets are divided into "flows" (by a source IP/dest IP/protocol/src port/dst port tuple).

|

||||

* Flows gather TCP retransmission data, as well as byte/packet counts.

|

||||

* Flows are scanned for RTT (by time sequence). When one occurs, instead of using a regularly polled map (which proved slow), they are sent to the userspace demon by a kernel ringbuffer/message system.

|

||||

* RTT messages are received by the userspace and compared with an "ignore" list. If they aren't ignored, they are categorized by remote IP for ASN information, and RTT data placed in a large ringbuffer.

|

||||

* Flows are expired after a TCP FIN or RST event, or 30 seconds (configurable) after they cease sending data.

|

||||

* Once a flow expires, it is sent to the "finished flow system".

|

||||

* The finished flow system categorizes by target ASN, target location (geolocated via a free database), IP protocol and ethertype. These are displayed in the GUI.

|

||||

* Optionally, finished flows can be sent to another host in summary form via Netflow V5 or Netflow V9 protocols - allowing for further analysis with tools such as `ntop`.

|

||||

* Quite a bit of UI work to accommodate all of this.

|

||||

101

docs/v1.5/Quickstart/configuration.md

Normal file

101

docs/v1.5/Quickstart/configuration.md

Normal file

@ -0,0 +1,101 @@

|

||||

# Configure LibreQoS

|

||||

|

||||

## Configure lqos.conf

|

||||

|

||||

If you installed LibreQoS the complex (Git) installation, you can copy the lqosd daemon configuration file to `/etc`. This is not neccesarry if you installed using the .deb:

|

||||

|

||||

```shell

|

||||

cd /opt/libreqos/src

|

||||

sudo cp lqos.example /etc/lqos.conf

|

||||

```

|

||||

|

||||

Now edit the file to match your setup with

|

||||

|

||||

```shell

|

||||

sudo nano /etc/lqos.conf

|

||||

```

|

||||

|

||||

In the ```[bridge]``` section, change `to_internet` and `to_network` to match your network interfaces.

|

||||

- `to_internet = "enp1s0f1"`

|

||||

- `to_network = "enp1s0f2"`

|

||||

|

||||

Then, if using Bifrost/XDP set `use_xdp_bridge = true` under that same `[bridge]` section. If you're not sure whether you need this, we recommend to leave it as `false`.

|

||||

|

||||

- Set downlink_bandwidth_mbps and uplink_bandwidth_mbps to match the bandwidth in Mbps of your network's upstream / WAN internet connection. The same can be done for generated_pn_download_mbps and generated_pn_upload_mbps.

|

||||

- to_internet would be the interface facing your edge router and the broader internet

|

||||

- to_network would be the interface facing your core router (or bridged internal network if your network is bridged)

|

||||

|

||||

Note: If you find that traffic is not being shaped when it should, please make sure to swap the interface order and restart lqosd as well as lqos_scheduler with ```sudo systemctl restart lqosd lqos_scheduler```.

|

||||

|

||||

After changing any part of `/etc/lqos.conf` it is highly recommended to always restart lqosd, using `sudo systemctl restart lqosd`. This re-parses any new values in lqos.conf, making those new values accessible to both the Rust and Python sides of the code.

|

||||

|

||||

### Integrations

|

||||

|

||||

Learn more about [configuring integrations here](../TechnicalDocs/integrations.md).

|

||||

|

||||

## Network.json

|

||||

|

||||

Network.json allows ISP operators to define a Hierarchical Network Topology, or Flat Network Topology.

|

||||

|

||||

If you plan to use the built-in UISP or Splynx integrations, you do not need to create a network.json file quite yet.

|

||||

If you plan to use the built-in UISP integration, it will create this automatically on its first run (assuming network.json is not already present).

|

||||

|

||||

If you will not be using an integration, you can manually define the network.json following the template file - network.example.json

|

||||

|

||||

```text

|

||||

+-----------------------------------------------------------------------+

|

||||

| Entire Network |

|

||||

+-----------------------+-----------------------+-----------------------+

|

||||

| Parent Node A | Parent Node B | Parent Node C |

|

||||

+-----------------------+-------+-------+-------+-----------------------+

|

||||

| Parent Node D | Sub 3 | Sub 4 | Sub 5 | Sub 6 | Sub 7 | Parent Node F |

|

||||

+-------+-------+-------+-------+-------+-------+-------+-------+-------+

|

||||

| Sub 1 | Sub 2 | | | | Sub 8 | Sub 9 |

|

||||

+-------+-------+-------+-----------------------+-------+-------+-------+

|

||||

```

|

||||

|

||||

For networks with no Parent Nodes (no strictly defined Access Points or Sites) edit the network.json to use a Flat Network Topology with

|

||||

```nano network.json```

|

||||

setting the following file content:

|

||||

|

||||

```json

|

||||

{}

|

||||

```

|

||||

|

||||

## CSV to JSON format helper

|

||||

|

||||

You can use

|

||||

|

||||

```shell

|

||||

python3 csvToNetworkJSON.py

|

||||

```

|

||||

|

||||

to convert manualNetwork.csv to a network.json file.

|

||||

manualNetwork.csv can be copied from the template file, manualNetwork.template.csv

|

||||

|

||||

Note: The parent node name must match that used for clients in ShapedDevices.csv

|

||||

|

||||

## ShapedDevices.csv

|

||||

|

||||

If you are using an integration, this file will be automatically generated. If you are not using an integration, you can manually edit the file using either the WebUI or by directly editing the ShapedDevices.csv file through the CLI.

|

||||

|

||||

### Manual Editing by WebUI

|

||||

Navigate to the LibreQoS WebUI (http://a.b.c.d:9123) and select Configuration > Shaped Devices.

|

||||

|

||||

### Manual Editing by CLI

|

||||

|

||||

- Modify the ShapedDevices.csv file using your preferred spreadsheet editor (LibreOffice Calc, Excel, etc), following the template file - ShapedDevices.example.csv

|

||||

- Circuit ID is required. Must be a string of some sort (int is fine, gets parsed as string). Must NOT include any number symbols (#). Every circuit needs a unique CircuitID - they cannot be reused. Here, circuit essentially means customer location. If a customer has multiple locations on different parts of your network, use a unique CircuitID for each of those locations.

|

||||

- At least one IPv4 address or IPv6 address is required for each entry.

|

||||

- The Access Point or Site name should be set in the Parent Node field. Parent Node can be left blank for flat networks.

|

||||

- The ShapedDevices.csv file allows you to set minimum guaranteed, and maximum allowed bandwidth per subscriber.

|

||||

- The minimum allowed plan rates for Circuits are 2Mbit. Bandwidth min and max should both be above that threshold.

|

||||

- Recommendation: set the min bandwidth to something like 25/10 and max to 1.15X advertised plan rate by using bandwidthOverheadFactor = 1.15

|

||||

- This way, when an AP hits its ceiling, users have any remaining AP capacity fairly distributed between them.

|

||||

- Ensure a reasonable minimum bandwidth minimum for every subscriber, allowing them to utilize up to the maximum provided when AP utilization is below 100%.

|

||||

|

||||

Note regarding SLAs: For customers with SLA contracts that guarantee them a minimum bandwidth, set their plan rate as the minimum bandwidth. That way when an AP approaches its ceiling, SLA customers will always get that amount.

|

||||

|

||||

|

||||

|

||||

Once your configuration is complete. You're ready to run the application and start the [Deamons](./services-and-run.md)

|

||||

46

docs/v1.5/Quickstart/networkdesignassumptions.md

Normal file

46

docs/v1.5/Quickstart/networkdesignassumptions.md

Normal file

@ -0,0 +1,46 @@

|

||||

# Network Design Assumptions

|

||||

|

||||

## Officially supported configuration

|

||||

|

||||

- LibreQoS placed inline in network, usually between an edge router (NAT, firewall) and core router (distribution to sites across network).

|

||||

- If you use NAT/CG-NAT, place LibreQoS inline south of where NAT is applied, as LibreQoS needs to shape internal addresses (100.64.0.0/12) not public post-NAT IPs.

|

||||

- Edge and Core routers should have 1500 MTU on links between them

|

||||

- If you use MPLS, you would terminate MPLS traffic at the core router. LibreQoS cannot decapsulate MPLS on its own.

|

||||

- OSPF primary link (low cost) through the server running LibreQoS

|

||||

- OSPF backup link (high cost, maybe 200 for example)

|

||||

|

||||

|

||||

|

||||

## Testbed configuration

|

||||

When you are first testing out LibreQoS, we recommend deploying a small-scale testbed to see it in action.

|

||||

|

||||

|

||||

### Network Interface Card

|

||||

|

||||

```{note}

|

||||

You must have one of these:

|

||||

- single NIC with two interfaces,

|

||||

- two NICs with single interface,

|

||||

- 2x VLANs interface (using one or two NICs).

|

||||

```

|

||||

|

||||

LibreQoS requires NICs to have 2 or more RX/TX queues and XDP support. While many cards theoretically meet these requirements, less commonly used cards tend to have unreported driver bugs which impede XDP functionality and make them unusable for our purposes. At this time we recommend the Intel x520, Intel x710, and Nvidia (ConnectX-5 or newer) NICs. We cannot guarantee compatibility with other cards.

|

||||

|

||||

## Alternate configuration (Not officially supported)

|

||||

|

||||

This alternate configuration uses Spanning Tree Protocol (STP) to modify the data path in the event the LibreQoS device is offline for maintenance or another problem.

|

||||

|

||||

```{note}

|

||||

Most of the same considerations apply to the alternate configuration as they do to the officially supported configuation

|

||||

```

|

||||

|

||||

- LibreQoS placed inline in network, usually between an edge router (NAT, firewall) and core router (distribution to sites across network).

|

||||

- If you use NAT/CG-NAT, place LibreQoS inline south of where NAT is applied, as LibreQoS needs to shape internal addresses (100.64.0.0/12) not public post-NAT IPs.

|

||||

- Edge router and Core switch should have 1500 MTU on links between them

|

||||

- If you use MPLS, you would terminate MPLS traffic somewhere south of the core/distribution switch. LibreQoS cannot decapsulate MPLS on its own.

|

||||

- Spanning Tree primary link (low cost) through the server running LibreQoS

|

||||

- Spanning Tree backup link (high cost, maybe 80 for example)

|

||||

|

||||

Keep in mind that if you use different bandwidth links, for example, 10 Gbps through LibreQoS, and 1 Gbps between core switch and edge router, you may need to be more intentional with your STP costs.

|

||||

|

||||

|

||||

24

docs/v1.5/Quickstart/quickstart-libreqos-1.5.md

Normal file

24

docs/v1.5/Quickstart/quickstart-libreqos-1.5.md

Normal file

@ -0,0 +1,24 @@

|

||||

# Install LibreQoS 1.5

|

||||

|

||||

## Step 1 - Complete The Prerequisites

|

||||

|

||||

[LibreQoS Installation Prerequisites](quickstart-prereq.md)

|

||||

|

||||

## Step 2 - Install

|

||||

|

||||

### Download .DEB Package (Recommended Method)

|

||||

|

||||

Donwload the latest .deb from https://libreqos.io/#download .

|

||||

|

||||

Unzip the .zip file and transfer the .deb to your LibreQoS box, installing with:

|

||||

```

|

||||

sudo apt install [deb file name]

|

||||

```

|

||||

|

||||

### Git Install (For Developers Only - Not Recommended)

|

||||

|

||||

[Complex Installation](../TechnicalDocs/git-install.md)

|

||||

|

||||

## Step 3 - Configure

|

||||

|

||||

You are now ready to [Configure](./configuration.md) LibreQoS!

|

||||

141

docs/v1.5/Quickstart/quickstart-prereq.md

Normal file

141

docs/v1.5/Quickstart/quickstart-prereq.md

Normal file

@ -0,0 +1,141 @@

|

||||

# Server Setup - Pre-requisites

|

||||

|

||||

Disable hyperthreading on the BIOS/UEFI of your host system. Hyperthreaading is also known as Simultaneous Multi Threading (SMT) on AMD systems. Disabling this is very important for optimal performance of the XDP cpumap filtering and, in turn, throughput and latency.

|

||||

|

||||

- Boot, pressing the appropriate key to enter the BIOS settings

|

||||

- For AMD systems, you will have to navigate the settings to find the "SMT Control" setting. Usually it is under something like ```Advanced -> AMD CBS -> CPU Common Options -> Thread Enablement -> SMT Control``` Once you find it, switch to "Disabled" or "Off"

|

||||

- For Intel systems, you will also have to navigate the settings to find the "hyperthrading" toggle option. On HP servers it's under ```System Configuration > BIOS/Platform Configuration (RBSU) > Processor Options > Intel (R) Hyperthreading Options.```

|

||||

- Save changes and reboot

|

||||

|

||||

## Install Ubuntu Server

|

||||

|

||||

We recommend Ubuntu Server because its kernel version tends to track closely with the mainline Linux releases. Our current documentation assumes Ubuntu Server. To run LibreQoS v1.4, Linux kernel 5.11 or greater is required, as 5.11 includes some important XDP patches. Ubuntu Server 22.04 uses kernel 5.13, which meets that requirement.

|

||||

|

||||

You can download Ubuntu Server 22.04 from <a href="https://ubuntu.com/download/server">https://ubuntu.com/download/server</a>.

|

||||

|

||||

1. Boot Ubuntu Server from USB.

|

||||

2. Follow the steps to install Ubuntu Server.

|

||||

3. If you use a Mellanox network card, the Ubuntu Server installer will ask you whether to install the mellanox/intel NIC drivers. Check the box to confirm. This extra driver is important.

|

||||

4. On the Networking settings step, it is recommended to assign a static IP address to the management NIC.

|

||||

5. Ensure SSH server is enabled so you can more easily log into the server later.

|

||||

6. You can use scp or sftp to access files from your LibreQoS server for easier file editing. Here's how to access via scp or sftp using an [Ubuntu](https://www.addictivetips.com/ubuntu-linux-tips/sftp-server-ubuntu/) or [Windows](https://winscp.net/eng/index.php) machine.

|

||||

|

||||

### Choose Bridge Type

|

||||

|

||||

There are two options for the bridge to pass data through your two interfaces:

|

||||

|

||||

- Bifrost XDP-Accelerated Bridge

|

||||

- Regular Linux Bridge

|

||||

|

||||

|

||||

The regular Linux bridge is recommended for Nvidea/Mellanox NICs such as the ConnectX-5 series (which have superior bridge performance), and VM setups using virtualized NICs. The Bifrost Bridge is recommended for Intel NICs with XDP support, such as the X520 and X710.

|

||||

|

||||

To use the Bifrost bridge, be sure to enable Bifrost/XDP in lqos.conf in the [Configuration](configuration.md) section.

|

||||

|

||||

Below are the instructions to configure Netplan, whether using the Linux Bridge or Bifrost XDP bridge:

|

||||

|

||||

## Netplan config

|

||||

|

||||

### Netplan for a regular Linux bridge (if not using Bifrost XDP bridge)

|

||||

|

||||

From the Ubuntu VM, create a linux interface bridge - br0 - with the two shaping interfaces.

|

||||

Find your existing .yaml file in /etc/netplan/ with

|

||||

|

||||

```shell

|

||||

cd /etc/netplan/

|

||||

ls

|

||||

```

|

||||

|

||||

Then edit the .yaml file there with

|

||||

|

||||

```shell

|

||||

sudo nano XX-cloud-init.yaml

|

||||

```

|

||||

|

||||

With XX corresponding to the name of the existing file.

|

||||

|

||||

Editing the .yaml file, we need to define the shaping interfaces (here, ens19 and ens20) and add the bridge with those two interfaces. Assuming your interfaces are ens18, ens19, and ens20, here is what your file might look like:

|

||||

|

||||

```yaml

|

||||

# This is the network config written by 'subiquity'

|

||||

network:

|

||||

ethernets:

|

||||

ens18:

|

||||

addresses:

|

||||

- (addr goes here)

|

||||

gateway4: (gateway goes here)

|

||||

nameservers:

|

||||

addresses:

|

||||

- 1.1.1.1

|

||||

- 8.8.8.8

|

||||

search: []

|

||||

ens19:

|

||||

dhcp4: no

|

||||

dhcp6: no

|

||||

ens20:

|

||||

dhcp4: no

|

||||

dhcp6: no

|

||||

version: 2

|

||||

bridges:

|

||||

br0:

|

||||

interfaces:

|

||||

- ens19

|

||||

- ens20

|

||||

```

|

||||

|

||||

By setting `dhcp4: no` and `dhcp6: no`, the interfaces will be brought up as part of the normal boot cycle, despite not having IP addresses assigned.

|

||||

|

||||

Make sure to replace `(addr goes here)` with your LibreQoS VM's address and subnet CIDR, and to replace `(gateway goes here)` with whatever your default gateway is.

|

||||

|

||||

Then run

|

||||

|

||||

```shell

|

||||

sudo netplan apply

|

||||

```

|

||||

|

||||

### Netplan for the Bifrost XDP bridge

|

||||

|

||||

Find your existing .yaml file in /etc/netplan/ with

|

||||

|

||||

```shell

|

||||

cd /etc/netplan/

|

||||

ls

|

||||

```

|

||||

|

||||

Then edit the .yaml file there with

|

||||

|

||||

```shell

|

||||

sudo nano XX-cloud-init.yaml

|

||||

```

|

||||

|

||||

With XX corresponding to the name of the existing file.

|

||||

|

||||

Editing the .yaml file, we need to define the shaping interfaces (here, ens19 and ens20) and add the bridge with those two interfaces. Assuming your interfaces are ens18, ens19, and ens20, here is what your file might look like:

|

||||

|

||||

```

|

||||

network:

|

||||

ethernets:

|

||||

ens18:

|

||||

addresses:

|

||||

- (addr goes here)

|

||||

gateway4: (gateway goes here)

|

||||

nameservers:

|

||||

addresses:

|

||||

- (etc)

|

||||

search: []

|

||||

ens19:

|

||||

dhcp4: no

|

||||

dhcp6: no

|

||||

ens20:

|

||||

dhcp4: no

|

||||

dhcp6: no

|

||||

```

|

||||

|

||||

By setting `dhcp4: no` and `dhcp6: no`, the interfaces will be brought up as part of the normal boot cycle, despite not having IP addresses assigned.

|

||||

|

||||

Make sure to replace (addr goes here) with your LibreQoS VM's address and subnet CIDR, and to replace `(gateway goes here)` with whatever your default gateway is.

|

||||

Once everything is in place, run:

|

||||

|

||||

```shell

|

||||

sudo netplan apply

|

||||

```

|

||||

69

docs/v1.5/Quickstart/services-and-run.md

Normal file

69

docs/v1.5/Quickstart/services-and-run.md

Normal file

@ -0,0 +1,69 @@

|

||||

# LibreQoS daemons

|

||||

|

||||

lqosd

|

||||

|

||||

- Manages actual XDP code. Build with Rust.

|

||||

- Runs the GUI available at http://a.b.c.d:9123

|

||||

|

||||

lqos_scheduler

|

||||

|

||||

- lqos_scheduler handles statistics and performs continuous refreshes of LibreQoS' shapers, including pulling from any enabled CRM Integrations (UISP, Splynx).

|

||||

- On start: Run a full setup of queues

|

||||

- Every 30 minutes: Update queues, pulling new configuration from CRM integration if enabled

|

||||

- Minute interval is adjustable with the setting `queue_refresh_interval_mins` in `/etc/lqos.conf`.

|

||||

|

||||

## Run daemons with systemd

|

||||

|

||||

Note: If you used the .deb installer, you can skip this section. The .deb installer automatically sets these up.

|

||||

|

||||

You can setup `lqosd`, and `lqos_scheduler` as systemd services.

|

||||

|

||||

```shell

|

||||

sudo cp /opt/libreqos/src/bin/lqosd.service.example /etc/systemd/system/lqosd.service

|

||||

sudo cp /opt/libreqos/src/bin/lqos_scheduler.service.example /etc/systemd/system/lqos_scheduler.service

|

||||

```

|

||||

|

||||

Finally, run

|

||||

|

||||

```shell

|

||||

sudo systemctl daemon-reload

|

||||

sudo systemctl enable lqosd lqos_scheduler

|

||||

```

|

||||

|

||||

You can now point a web browser at `http://a.b.c.d:9123` (replace `a.b.c.d` with the management IP address of your shaping server) and enjoy a real-time view of your network.

|

||||

|

||||

## Debugging lqos_scheduler

|

||||

|

||||

In the background, lqos_scheduler runs scheduler.py, which in turn runs LibreQoS.py

|

||||

|

||||

One-time runs of these individual components can be very helpful for debugging and to make sure everything is correctly configured.

|

||||

|

||||

First, stop lqos_scheduler

|

||||

|

||||

```shell

|

||||

sudo systemctl stop lqos_scheduler

|

||||

```

|

||||

|

||||

For one-time runs of LibreQoS.py, use

|

||||

|

||||

```shell

|

||||

sudo ./LibreQoS.py

|

||||

```

|

||||

|

||||

- To use the debug mode with more verbose output, use:

|

||||

|

||||

```shell

|

||||

sudo ./LibreQoS.py --debug

|

||||

```

|

||||

|

||||

To confirm that lqos_scheduler (scheduler.py) is able to work correctly, run:

|

||||

|

||||

```shell

|

||||

sudo python3 scheduler.py

|

||||

```

|

||||

|

||||

Once you have any errors eliminated, restart lqos_scheduler with

|

||||

|

||||

```shell

|

||||

sudo systemctl start lqos_scheduler

|

||||

```

|

||||

31

docs/v1.5/Quickstart/share.md

Normal file

31

docs/v1.5/Quickstart/share.md

Normal file

@ -0,0 +1,31 @@

|

||||

# Share your before and after

|