mirror of

https://github.com/opentofu/opentofu.git

synced 2025-02-25 18:45:20 -06:00

Merge branch 'main' into update-internal/configs

This commit is contained in:

commit

58853d209c

@ -11,7 +11,7 @@

|

||||

# built by the (closed-source) official release process.

|

||||

|

||||

FROM docker.mirror.hashicorp.services/golang:alpine

|

||||

LABEL maintainer="HashiCorp Terraform Team <terraform@hashicorp.com>"

|

||||

LABEL maintainer="OpenTF Team <opentf@opentf.org>"

|

||||

|

||||

RUN apk add --no-cache git bash openssh

|

||||

|

||||

|

||||

4

Makefile

4

Makefile

@ -5,7 +5,7 @@ generate:

|

||||

go generate ./...

|

||||

|

||||

# We separate the protobuf generation because most development tasks on

|

||||

# Terraform do not involve changing protobuf files and protoc is not a

|

||||

# OpenTF do not involve changing protobuf files and protoc is not a

|

||||

# go-gettable dependency and so getting it installed can be inconvenient.

|

||||

#

|

||||

# If you are working on changes to protobuf interfaces, run this Makefile

|

||||

@ -43,4 +43,4 @@ website/build-local:

|

||||

# under parallel conditions.

|

||||

.NOTPARALLEL:

|

||||

|

||||

.PHONY: fmtcheck importscheck generate protobuf staticcheck website website/local website/build-local

|

||||

.PHONY: fmtcheck importscheck generate protobuf staticcheck website website/local website/build-local

|

||||

|

||||

@ -1,23 +1,23 @@

|

||||

# Terraform Core Codebase Documentation

|

||||

# OpenTF Core Codebase Documentation

|

||||

|

||||

This directory contains some documentation about the Terraform Core codebase,

|

||||

This directory contains some documentation about the OpenTF Core codebase,

|

||||

aimed at readers who are interested in making code contributions.

|

||||

|

||||

If you're looking for information on _using_ Terraform, please instead refer

|

||||

to [the main Terraform CLI documentation](https://www.terraform.io/docs/cli/index.html).

|

||||

If you're looking for information on _using_ OpenTF, please instead refer

|

||||

to [the main OpenTF CLI documentation](https://www.terraform.io/docs/cli/index.html).

|

||||

|

||||

## Terraform Core Architecture Documents

|

||||

## OpenTF Core Architecture Documents

|

||||

|

||||

* [Terraform Core Architecture Summary](./architecture.md): an overview of the

|

||||

main components of Terraform Core and how they interact. This is the best

|

||||

* [OpenTF Core Architecture Summary](./architecture.md): an overview of the

|

||||

main components of OpenTF Core and how they interact. This is the best

|

||||

starting point if you are diving in to this codebase for the first time.

|

||||

|

||||

* [Resource Instance Change Lifecycle](./resource-instance-change-lifecycle.md):

|

||||

a description of the steps in validating, planning, and applying a change

|

||||

to a resource instance, from the perspective of the provider plugin RPC

|

||||

operations. This may be useful for understanding the various expectations

|

||||

Terraform enforces about provider behavior, either if you intend to make

|

||||

changes to those behaviors or if you are implementing a new Terraform plugin

|

||||

OpenTF enforces about provider behavior, either if you intend to make

|

||||

changes to those behaviors or if you are implementing a new OpenTF plugin

|

||||

SDK and so wish to conform to them.

|

||||

|

||||

(If you are planning to write a new provider using the _official_ SDK then

|

||||

@ -31,10 +31,10 @@ to [the main Terraform CLI documentation](https://www.terraform.io/docs/cli/inde

|

||||

This documentation is for SDK developers, and is not necessary reading for

|

||||

those implementing a provider using the official SDK.

|

||||

|

||||

* [How Terraform Uses Unicode](./unicode.md): an overview of the various

|

||||

features of Terraform that rely on Unicode and how to change those features

|

||||

* [How OpenTF Uses Unicode](./unicode.md): an overview of the various

|

||||

features of OpenTF that rely on Unicode and how to change those features

|

||||

to adopt new versions of Unicode.

|

||||

|

||||

## Contribution Guides

|

||||

|

||||

* [Contributing to Terraform](../.github/CONTRIBUTING.md): a complete guideline for those who want to contribute to this project.

|

||||

* [Contributing to OpenTF](../.github/CONTRIBUTING.md): a complete guideline for those who want to contribute to this project.

|

||||

|

||||

@ -1,26 +1,26 @@

|

||||

# Terraform Core Architecture Summary

|

||||

# OpenTF Core Architecture Summary

|

||||

|

||||

This document is a summary of the main components of Terraform Core and how

|

||||

This document is a summary of the main components of OpenTF Core and how

|

||||

data and requests flow between these components. It's intended as a primer

|

||||

to help navigate the codebase to dig into more details.

|

||||

|

||||

We assume some familiarity with user-facing Terraform concepts like

|

||||

configuration, state, CLI workflow, etc. The Terraform website has

|

||||

We assume some familiarity with user-facing OpenTF concepts like

|

||||

configuration, state, CLI workflow, etc. The OpenTF website has

|

||||

documentation on these ideas.

|

||||

|

||||

## Terraform Request Flow

|

||||

## OpenTF Request Flow

|

||||

|

||||

The following diagram shows an approximation of how a user command is

|

||||

executed in Terraform:

|

||||

executed in OpenTF:

|

||||

|

||||

|

||||

|

||||

|

||||

Each of the different subsystems (solid boxes) in this diagram is described

|

||||

in more detail in a corresponding section below.

|

||||

|

||||

## CLI (`command` package)

|

||||

|

||||

Each time a user runs the `terraform` program, aside from some initial

|

||||

Each time a user runs the `opentf` program, aside from some initial

|

||||

bootstrapping in the root package (not shown in the diagram) execution

|

||||

transfers immediately into one of the "command" implementations in

|

||||

[the `command` package](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/command).

|

||||

@ -29,8 +29,8 @@ their corresponding `command` package types can be found in the `commands.go`

|

||||

file in the root of the repository.

|

||||

|

||||

The full flow illustrated above does not actually apply to _all_ commands,

|

||||

but it applies to the main Terraform workflow commands `terraform plan` and

|

||||

`terraform apply`, along with a few others.

|

||||

but it applies to the main OpenTF workflow commands `opentf plan` and

|

||||

`opentf apply`, along with a few others.

|

||||

|

||||

For these commands, the role of the command implementation is to read and parse

|

||||

any command line arguments, command line options, and environment variables

|

||||

@ -62,18 +62,18 @@ the command-handling code calls `Operation` with the operation it has

|

||||

constructed, and then the backend is responsible for executing that action.

|

||||

|

||||

Backends that execute operations, however, do so as an architectural implementation detail and not a

|

||||

general feature of backends. That is, the term 'backend' as a Terraform feature is used to refer to

|

||||

a plugin that determines where Terraform stores its state snapshots - only the default `local`

|

||||

general feature of backends. That is, the term 'backend' as a OpenTF feature is used to refer to

|

||||

a plugin that determines where OpenTF stores its state snapshots - only the default `local`

|

||||

backend and Terraform Cloud's backends (`remote`, `cloud`) perform operations.

|

||||

|

||||

Thus, most backends do _not_ implement this interface, and so the `command` package wraps these

|

||||

backends in an instance of

|

||||

[`local.Local`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/backend/local#Local),

|

||||

causing the operation to be executed locally within the `terraform` process itself.

|

||||

causing the operation to be executed locally within the `opentf` process itself.

|

||||

|

||||

## Backends

|

||||

|

||||

A _backend_ determines where Terraform should store its state snapshots.

|

||||

A _backend_ determines where OpenTF should store its state snapshots.

|

||||

|

||||

As described above, the `local` backend also executes operations on behalf of most other

|

||||

backends. It uses a _state manager_

|

||||

@ -86,7 +86,7 @@ initial processing/validation of the configuration specified in the

|

||||

operation. It then uses these, along with the other settings given in the

|

||||

operation, to construct a

|

||||

[`terraform.Context`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/terraform#Context),

|

||||

which is the main object that actually performs Terraform operations.

|

||||

which is the main object that actually performs OpenTF operations.

|

||||

|

||||

The `local` backend finally calls an appropriate method on that context to

|

||||

begin execution of the relevant command, such as

|

||||

@ -109,13 +109,13 @@ configuration objects, but the main entry point is in the sub-package

|

||||

via

|

||||

[`configload.Loader`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/configs/configload#Loader).

|

||||

A loader deals with all of the details of installing child modules

|

||||

(during `terraform init`) and then locating those modules again when a

|

||||

(during `opentf init`) and then locating those modules again when a

|

||||

configuration is loaded by a backend. It takes the path to a root module

|

||||

and recursively loads all of the child modules to produce a single

|

||||

[`configs.Config`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/configs#Config)

|

||||

representing the entire configuration.

|

||||

|

||||

Terraform expects configuration files written in the Terraform language, which

|

||||

OpenTF expects configuration files written in the OpenTF language, which

|

||||

is a DSL built on top of

|

||||

[HCL](https://github.com/hashicorp/hcl). Some parts of the configuration

|

||||

cannot be interpreted until we build and walk the graph, since they depend

|

||||

@ -124,12 +124,12 @@ the configuration remain represented as the low-level HCL types

|

||||

[`hcl.Body`](https://pkg.go.dev/github.com/hashicorp/hcl/v2/#Body)

|

||||

and

|

||||

[`hcl.Expression`](https://pkg.go.dev/github.com/hashicorp/hcl/v2/#Expression),

|

||||

allowing Terraform to interpret them at a more appropriate time.

|

||||

allowing OpenTF to interpret them at a more appropriate time.

|

||||

|

||||

## State Manager

|

||||

|

||||

A _state manager_ is responsible for storing and retrieving snapshots of the

|

||||

[Terraform state](https://www.terraform.io/docs/language/state/index.html)

|

||||

[OpenTF state](https://www.terraform.io/docs/language/state/index.html)

|

||||

for a particular workspace. Each manager is an implementation of

|

||||

some combination of interfaces in

|

||||

[the `statemgr` package](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/states/statemgr),

|

||||

@ -144,7 +144,7 @@ that does not implement all of `statemgr.Full`.

|

||||

The implementation

|

||||

[`statemgr.Filesystem`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/states/statemgr#Filesystem) is used

|

||||

by default (by the `local` backend) and is responsible for the familiar

|

||||

`terraform.tfstate` local file that most Terraform users start with, before

|

||||

`terraform.tfstate` local file that most OpenTF users start with, before

|

||||

they switch to [remote state](https://www.terraform.io/docs/language/state/remote.html).

|

||||

Other implementations of `statemgr.Full` are used to implement remote state.

|

||||

Each of these saves and retrieves state via a remote network service

|

||||

@ -166,12 +166,12 @@ to represent the necessary steps for that operation and the dependency

|

||||

relationships between them.

|

||||

|

||||

In most cases, the

|

||||

[vertices](https://en.wikipedia.org/wiki/Vertex_(graph_theory)) of Terraform's

|

||||

[vertices](https://en.wikipedia.org/wiki/Vertex_(graph_theory)) of OpenTF's

|

||||

graphs each represent a specific object in the configuration, or something

|

||||

derived from those configuration objects. For example, each `resource` block

|

||||

in the configuration has one corresponding

|

||||

[`GraphNodeConfigResource`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/terraform#GraphNodeConfigResource)

|

||||

vertex representing it in the "plan" graph. (Terraform Core uses terminology

|

||||

vertex representing it in the "plan" graph. (OpenTF Core uses terminology

|

||||

inconsistently, describing graph _vertices_ also as graph _nodes_ in various

|

||||

places. These both describe the same concept.)

|

||||

|

||||

@ -228,7 +228,7 @@ itself is implemented in

|

||||

[the low-level `dag` package](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/dag#AcyclicGraph.Walk)

|

||||

(where "DAG" is short for [_Directed Acyclic Graph_](https://en.wikipedia.org/wiki/Directed_acyclic_graph)), in

|

||||

[`AcyclicGraph.Walk`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/dag#AcyclicGraph.Walk).

|

||||

However, the "interesting" Terraform walk functionality is implemented in

|

||||

However, the "interesting" OpenTF walk functionality is implemented in

|

||||

[`terraform.ContextGraphWalker`](https://pkg.go.dev/github.com/placeholderplaceholderplaceholder/opentf/internal/terraform#ContextGraphWalker),

|

||||

which implements a small set of higher-level operations that are performed

|

||||

during the graph walk:

|

||||

@ -346,7 +346,7 @@ or

|

||||

|

||||

Expression evaluation produces a dynamic value represented as a

|

||||

[`cty.Value`](https://pkg.go.dev/github.com/zclconf/go-cty/cty#Value).

|

||||

This Go type represents values from the Terraform language and such values

|

||||

This Go type represents values from the OpenTF language and such values

|

||||

are eventually passed to provider plugins.

|

||||

|

||||

### Sub-graphs

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

# Terraform Core Resource Destruction Notes

|

||||

# OpenTF Core Resource Destruction Notes

|

||||

|

||||

This document intends to describe some of the details and complications

|

||||

involved in the destruction of resources. It covers the ordering defined for

|

||||

@ -8,7 +8,7 @@ all possible combinations of dependency ordering, only to outline the basics

|

||||

and document some of the more complicated aspects of resource destruction.

|

||||

|

||||

The graph diagrams here will continue to use the inverted graph structure used

|

||||

internally by Terraform, where edges represent dependencies rather than order

|

||||

internally by OpenTF, where edges represent dependencies rather than order

|

||||

of operations.

|

||||

|

||||

## Simple Resource Creation

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# Planning Behaviors

|

||||

|

||||

A key design tenet for Terraform is that any actions with externally-visible

|

||||

A key design tenet for OpenTF is that any actions with externally-visible

|

||||

side-effects should be carried out via the standard process of creating a

|

||||

plan and then applying it. Any new features should typically fit within this

|

||||

model.

|

||||

@ -8,25 +8,25 @@ model.

|

||||

There are also some historical exceptions to this rule, which we hope to

|

||||

supplement with plan-and-apply-based equivalents over time.

|

||||

|

||||

This document describes the default planning behavior of Terraform in the

|

||||

This document describes the default planning behavior of OpenTF in the

|

||||

absence of any special instructions, and also describes the three main

|

||||

design approaches we can choose from when modelling non-default behaviors that

|

||||

require additional information from outside of Terraform Core.

|

||||

require additional information from outside of OpenTF Core.

|

||||

|

||||

This document focuses primarily on actions relating to _resource instances_,

|

||||

because that is Terraform's main concern. However, these design principles can

|

||||

because that is OpenTF's main concern. However, these design principles can

|

||||

potentially generalize to other externally-visible objects, if we can describe

|

||||

their behaviors in a way comparable to the resource instance behaviors.

|

||||

|

||||

This is developer-oriented documentation rather than user-oriented

|

||||

documentation. See

|

||||

[the main Terraform documentation](https://www.terraform.io/docs) for

|

||||

[the main OpenTF documentation](https://www.terraform.io/docs) for

|

||||

information on existing planning behaviors and other behaviors as viewed from

|

||||

an end-user perspective.

|

||||

|

||||

## Default Planning Behavior

|

||||

|

||||

When given no explicit information to the contrary, Terraform Core will

|

||||

When given no explicit information to the contrary, OpenTF Core will

|

||||

automatically propose taking the following actions in the appropriate

|

||||

situations:

|

||||

|

||||

@ -52,21 +52,21 @@ situations:

|

||||

the configuration (in a `resource` block) and recorded in the prior state

|

||||

_marked as "tainted"_. The special "tainted" status means that the process

|

||||

of creating the object failed partway through and so the existing object does

|

||||

not necessarily match the configuration, so Terraform plans to replace it

|

||||

not necessarily match the configuration, so OpenTF plans to replace it

|

||||

in order to ensure that the resulting object is complete.

|

||||

- **Read**, if there is a `data` block in the configuration.

|

||||

- If possible, Terraform will eagerly perform this action during the planning

|

||||

- If possible, OpenTF will eagerly perform this action during the planning

|

||||

phase, rather than waiting until the apply phase.

|

||||

- If the configuration contains at least one unknown value, or if the

|

||||

data resource directly depends on a managed resource that has any change

|

||||

proposed elsewhere in the plan, Terraform will instead delay this action

|

||||

proposed elsewhere in the plan, OpenTF will instead delay this action

|

||||

to the apply phase so that it can react to the completion of modification

|

||||

actions on other objects.

|

||||

- **No-op**, to explicitly represent that Terraform considered a particular

|

||||

- **No-op**, to explicitly represent that OpenTF considered a particular

|

||||

resource instance but concluded that no action was required.

|

||||

|

||||

The **Replace** action described above is really a sort of "meta-action", which

|

||||

Terraform expands into separate **Create** and **Delete** operations. There are

|

||||

OpenTF expands into separate **Create** and **Delete** operations. There are

|

||||

two possible orderings, and the first one is the default planning behavior

|

||||

unless overridden by a special planning behavior as described later. The

|

||||

two possible lowerings of **Replace** are:

|

||||

@ -81,7 +81,7 @@ two possible lowerings of **Replace** are:

|

||||

## Special Planning Behaviors

|

||||

|

||||

For the sake of this document, a "special" planning behavior is one where

|

||||

Terraform Core will select a different action than the defaults above,

|

||||

OpenTF Core will select a different action than the defaults above,

|

||||

based on explicit instructions given either by a module author, an operator,

|

||||

or a provider.

|

||||

|

||||

@ -107,27 +107,27 @@ of the following depending on which stakeholder is activating the behavior:

|

||||

"automatic".

|

||||

|

||||

Because these special behaviors are activated by values in the provider's

|

||||

response to the planning request from Terraform Core, behaviors of this

|

||||

response to the planning request from OpenTF Core, behaviors of this

|

||||

sort will typically represent "tweaks" to or variants of the default

|

||||

planning behaviors, rather than entirely different behaviors.

|

||||

- [Single-run Behaviors](#single-run-behaviors) are activated by explicitly

|

||||

setting additional "plan options" when calling Terraform Core's plan

|

||||

setting additional "plan options" when calling OpenTF Core's plan

|

||||

operation.

|

||||

|

||||

This design pattern is good for situations where the direct operator of

|

||||

Terraform needs to do something exceptional or one-off, such as when the

|

||||

OpenTF needs to do something exceptional or one-off, such as when the

|

||||

configuration is correct but the real system has become degraded or damaged

|

||||

in a way that Terraform cannot automatically understand.

|

||||

in a way that OpenTF cannot automatically understand.

|

||||

|

||||

However, this design pattern has the disadvantage that each new single-run

|

||||

behavior type requires custom work in every wrapping UI or automaton around

|

||||

Terraform Core, in order provide the user of that wrapper some way

|

||||

OpenTF Core, in order provide the user of that wrapper some way

|

||||

to directly activate the special option, or to offer an "escape hatch" to

|

||||

use Terraform CLI directly and bypass the wrapping automation for a

|

||||

use OpenTF CLI directly and bypass the wrapping automation for a

|

||||

particular change.

|

||||

|

||||

We've also encountered use-cases that seem to call for a hybrid between these

|

||||

different patterns. For example, a configuration construct might cause Terraform

|

||||

different patterns. For example, a configuration construct might cause OpenTF

|

||||

Core to _invite_ a provider to activate a special behavior, but let the

|

||||

provider make the final call about whether to do it. Or conversely, a provider

|

||||

might advertise the possibility of a special behavior but require the user to

|

||||

@ -153,36 +153,36 @@ configuration-driven behaviors, selected to illustrate some different variations

|

||||

that might be useful inspiration for new designs:

|

||||

|

||||

- The `ignore_changes` argument inside `resource` block `lifecycle` blocks

|

||||

tells Terraform that if there is an existing object bound to a particular

|

||||

resource instance address then Terraform should ignore the configured value

|

||||

tells OpenTF that if there is an existing object bound to a particular

|

||||

resource instance address then OpenTF should ignore the configured value

|

||||

for a particular argument and use the corresponding value from the prior

|

||||

state instead.

|

||||

|

||||

This can therefore potentially cause what would've been an **Update** to be

|

||||

a **No-op** instead.

|

||||

- The `replace_triggered_by` argument inside `resource` block `lifecycle`

|

||||

blocks can use a proposed change elsewhere in a module to force Terraform

|

||||

blocks can use a proposed change elsewhere in a module to force OpenTF

|

||||

to propose one of the two **Replace** variants for a particular resource.

|

||||

- The `create_before_destroy` argument inside `resource` block `lifecycle`

|

||||

blocks only takes effect if a particular resource instance has a proposed

|

||||

**Replace** action. If not set or set to `false`, Terraform will decompose

|

||||

it to **Destroy** then **Create**, but if set to `true` Terraform will use

|

||||

**Replace** action. If not set or set to `false`, OpenTF will decompose

|

||||

it to **Destroy** then **Create**, but if set to `true` OpenTF will use

|

||||

the inverted ordering.

|

||||

|

||||

Because Terraform Core will never select a **Replace** action automatically

|

||||

Because OpenTF Core will never select a **Replace** action automatically

|

||||

by itself, this is an example of a hybrid design where the config-driven

|

||||

`create_before_destroy` combines with any other behavior (config-driven or

|

||||

otherwise) that might cause **Replace** to customize exactly what that

|

||||

**Replace** will mean.

|

||||

- Top-level `moved` blocks in a module activate a special behavior during the

|

||||

planning phase, where Terraform will first try to change the bindings of

|

||||

planning phase, where OpenTF will first try to change the bindings of

|

||||

existing objects in the prior state to attach to new addresses before running

|

||||

the normal planning process. This therefore allows a module author to

|

||||

document certain kinds of refactoring so that Terraform can update the

|

||||

document certain kinds of refactoring so that OpenTF can update the

|

||||

state automatically once users upgrade to a new version of the module.

|

||||

|

||||

This special behavior is interesting because it doesn't _directly_ change

|

||||

what actions Terraform will propose, but instead it adds an extra

|

||||

what actions OpenTF will propose, but instead it adds an extra

|

||||

preparation step before the typical planning process which changes the

|

||||

addresses that the planning process will consider. It can therefore

|

||||

_indirectly_ cause different proposed actions for affected resource

|

||||

@ -201,13 +201,13 @@ Providers get an opportunity to activate some special behaviors for a particular

|

||||

resource instance when they respond to the `PlanResourceChange` function of

|

||||

the provider plugin protocol.

|

||||

|

||||

When Terraform Core executes this RPC, it has already selected between

|

||||

When OpenTF Core executes this RPC, it has already selected between

|

||||

**Create**, **Delete**, or **Update** actions for the particular resource

|

||||

instance, and so the special behaviors a provider may activate will typically

|

||||

serve as modifiers or tweaks to that base action, and will not allow

|

||||

the provider to select another base action altogether. The provider wire

|

||||

protocol does not talk about the action types explicitly, and instead only

|

||||

implies them via other content of the request and response, with Terraform Core

|

||||

implies them via other content of the request and response, with OpenTF Core

|

||||

making the final decision about how to react to that information.

|

||||

|

||||

The following is a non-exhaustive list of existing examples of

|

||||

@ -218,7 +218,7 @@ that might be useful inspiration for new designs:

|

||||

more paths to attributes which have changes that the provider cannot

|

||||

implement as an in-place update due to limitations of the remote system.

|

||||

|

||||

In that case, Terraform Core will replace the **Update** action with one of

|

||||

In that case, OpenTF Core will replace the **Update** action with one of

|

||||

the two **Replace** variants, which means that from the provider's

|

||||

perspective the apply phase will really be two separate calls for the

|

||||

decomposed **Create** and **Delete** actions (in either order), rather

|

||||

@ -232,31 +232,31 @@ that might be useful inspiration for new designs:

|

||||

remote system.

|

||||

|

||||

If all of those taken together causes the new object to match the prior

|

||||

state, Terraform Core will treat the update as a **No-op** instead.

|

||||

state, OpenTF Core will treat the update as a **No-op** instead.

|

||||

|

||||

Of the three genres of special behaviors, provider-driven behaviors is the one

|

||||

we've made the least use of historically but one that seems to have a lot of

|

||||

opportunities for future exploration. Provider-driven behaviors can often be

|

||||

ideal because their effects appear as if they are built in to Terraform so

|

||||

that "it just works", with Terraform automatically deciding and explaining what

|

||||

ideal because their effects appear as if they are built in to OpenTF so

|

||||

that "it just works", with OpenTF automatically deciding and explaining what

|

||||

needs to happen and why, without any special effort on the user's part.

|

||||

|

||||

### Single-run Behaviors

|

||||

|

||||

Terraform Core's "plan" operation takes a set of arguments that we collectively

|

||||

call "plan options", that can modify Terraform's planning behavior on a per-run

|

||||

OpenTF Core's "plan" operation takes a set of arguments that we collectively

|

||||

call "plan options", that can modify OpenTF's planning behavior on a per-run

|

||||

basis without any configuration changes or special provider behaviors.

|

||||

|

||||

As noted above, this particular genre of designs is the most burdensome to

|

||||

implement because any wrapping software that can ask Terraform Core to create

|

||||

implement because any wrapping software that can ask OpenTF Core to create

|

||||

a plan must ideally offer some way to set all of the available planning options,

|

||||

or else some part of Terraform's functionality won't be available to anyone

|

||||

or else some part of OpenTF's functionality won't be available to anyone

|

||||

using that wrapper.

|

||||

|

||||

However, we've seen various situations where single-run behaviors really are the

|

||||

most appropriate way to handle a particular use-case, because the need for the

|

||||

behavior originates in some process happening outside of the scope of any

|

||||

particular Terraform module or provider.

|

||||

particular OpenTF module or provider.

|

||||

|

||||

The following is a non-exhaustive list of existing examples of

|

||||

single-run behaviors, selected to illustrate some different variations

|

||||

@ -265,25 +265,25 @@ that might be useful inspiration for new designs:

|

||||

- The "replace" planning option specifies zero or more resource instance

|

||||

addresses.

|

||||

|

||||

For any resource instance specified, Terraform Core will transform any

|

||||

For any resource instance specified, OpenTF Core will transform any

|

||||

**Update** or **No-op** action for that instance into one of the

|

||||

**Replace** actions, thereby allowing an operator to respond to something

|

||||

having become degraded in a way that Terraform and providers cannot

|

||||

automatically detect and force Terraform to replace that object with

|

||||

having become degraded in a way that OpenTF and providers cannot

|

||||

automatically detect and force OpenTF to replace that object with

|

||||

a new one that will hopefully function correctly.

|

||||

- The "refresh only" planning mode ("planning mode" is a single planning option

|

||||

that selects between a few mutually-exclusive behaviors) forces Terraform

|

||||

that selects between a few mutually-exclusive behaviors) forces OpenTF

|

||||

to treat every resource instance as **No-op**, regardless of what is bound

|

||||

to that address in state or present in the configuration.

|

||||

|

||||

## Legacy Operations

|

||||

|

||||

Some of the legacy operations Terraform CLI offers that _aren't_ integrated

|

||||

Some of the legacy operations OpenTF CLI offers that _aren't_ integrated

|

||||

with the plan and apply flow could be thought of as various degenerate kinds

|

||||

of single-run behaviors. Most don't offer any opportunity to preview an effect

|

||||

before applying it, but do meet a similar set of use-cases where an operator

|

||||

needs to take some action to respond to changes to the context Terraform is

|

||||

in rather than to the Terraform configuration itself.

|

||||

needs to take some action to respond to changes to the context OpenTF is

|

||||

in rather than to the OpenTF configuration itself.

|

||||

|

||||

Most of these legacy operations could therefore most readily be translated to

|

||||

single-run behaviors, but before doing so it's worth researching whether people

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

# Terraform Plugin Protocol

|

||||

# OpenTF Plugin Protocol

|

||||

|

||||

This directory contains documentation about the physical wire protocol that

|

||||

Terraform Core uses to communicate with provider plugins.

|

||||

OpenTF Core uses to communicate with provider plugins.

|

||||

|

||||

Most providers are not written directly against this protocol. Instead, prefer

|

||||

to use an SDK that implements this protocol and write the provider against

|

||||

@ -9,35 +9,35 @@ the SDK's API.

|

||||

|

||||

----

|

||||

|

||||

**If you want to write a plugin for Terraform, please refer to

|

||||

[Extending Terraform](https://www.terraform.io/docs/extend/index.html) instead.**

|

||||

**If you want to write a plugin for OpenTF, please refer to

|

||||

[Extending OpenTF](https://www.terraform.io/docs/extend/index.html) instead.**

|

||||

|

||||

This documentation is for those who are developing _Terraform SDKs_, rather

|

||||

This documentation is for those who are developing _OpenTF SDKs_, rather

|

||||

than those implementing plugins.

|

||||

|

||||

----

|

||||

|

||||

From Terraform v0.12.0 onwards, Terraform's plugin protocol is built on

|

||||

From OpenTF v0.12.0 onwards, OpenTF's plugin protocol is built on

|

||||

[gRPC](https://grpc.io/). This directory contains `.proto` definitions of

|

||||

different versions of Terraform's protocol.

|

||||

different versions of OpenTF's protocol.

|

||||

|

||||

Only `.proto` files published as part of Terraform release tags are actually

|

||||

Only `.proto` files published as part of OpenTF release tags are actually

|

||||

official protocol versions. If you are reading this directory on the `main`

|

||||

branch or any other development branch then it may contain protocol definitions

|

||||

that are not yet finalized and that may change before final release.

|

||||

|

||||

## RPC Plugin Model

|

||||

|

||||

Terraform plugins are normal executable programs that, when launched, expose

|

||||

gRPC services on a server accessed via the loopback interface. Terraform Core

|

||||

OpenTF plugins are normal executable programs that, when launched, expose

|

||||

gRPC services on a server accessed via the loopback interface. OpenTF Core

|

||||

discovers and launches plugins, waits for a handshake to be printed on the

|

||||

plugin's `stdout`, and then connects to the indicated port number as a

|

||||

gRPC client.

|

||||

|

||||

For this reason, we commonly refer to Terraform Core itself as the plugin

|

||||

For this reason, we commonly refer to OpenTF Core itself as the plugin

|

||||

"client" and the plugin program itself as the plugin "server". Both of these

|

||||

processes run locally, with the server process appearing as a child process

|

||||

of the client. Terraform Core controls the lifecycle of these server processes

|

||||

of the client. OpenTF Core controls the lifecycle of these server processes

|

||||

and will terminate them when they are no longer required.

|

||||

|

||||

The startup and handshake protocol is not currently documented. We hope to

|

||||

@ -51,7 +51,7 @@ more significant breaking changes from time to time while allowing old and

|

||||

new plugins to be used together for some period.

|

||||

|

||||

The versioning strategy described below was introduced with protocol version

|

||||

5.0 in Terraform v0.12. Prior versions of Terraform and prior protocol versions

|

||||

5.0 in OpenTF v0.12. Prior versions of OpenTF and prior protocol versions

|

||||

do not follow this strategy.

|

||||

|

||||

The authoritative definition for each protocol version is in this directory

|

||||

@ -64,11 +64,11 @@ is the minor version.

|

||||

The minor version increases for each change introducing optional new

|

||||

functionality that can be ignored by implementations of prior versions. For

|

||||

example, if a new field were added to an response message, it could be a minor

|

||||

release as long as Terraform Core can provide some default behavior when that

|

||||

release as long as OpenTF Core can provide some default behavior when that

|

||||

field is not populated.

|

||||

|

||||

The major version increases for any significant change to the protocol where

|

||||

compatibility is broken. However, Terraform Core and an SDK may both choose

|

||||

compatibility is broken. However, OpenTF Core and an SDK may both choose

|

||||

to support multiple major versions at once: the plugin handshake includes a

|

||||

negotiation step where client and server can work together to select a

|

||||

mutually-supported major version.

|

||||

@ -84,9 +84,9 @@ features.

|

||||

|

||||

## Version compatibility for Core, SDK, and Providers

|

||||

|

||||

A particular version of Terraform Core has both a minimum minor version it

|

||||

A particular version of OpenTF Core has both a minimum minor version it

|

||||

requires and a maximum major version that it supports. A particular version of

|

||||

Terraform Core may also be able to optionally use a newer minor version when

|

||||

OpenTF Core may also be able to optionally use a newer minor version when

|

||||

available, but fall back on older behavior when that functionality is not

|

||||

available.

|

||||

|

||||

@ -95,16 +95,16 @@ The compatible versions for a provider are a list of major and minor version

|

||||

pairs, such as "4.0", "5.2", which indicates that the provider supports the

|

||||

baseline features of major version 4 and supports major version 5 including

|

||||

the enhancements from both minor versions 1 and 2. This provider would

|

||||

therefore be compatible with a Terraform Core release that supports only

|

||||

therefore be compatible with a OpenTF Core release that supports only

|

||||

protocol version 5.0, since major version 5 is supported and the optional

|

||||

5.1 and 5.2 enhancements will be ignored.

|

||||

|

||||

If Terraform Core and the plugin do not have at least one mutually-supported

|

||||

major version, Terraform Core will return an error from `terraform init`

|

||||

If OpenTF Core and the plugin do not have at least one mutually-supported

|

||||

major version, OpenTF Core will return an error from `opentf init`

|

||||

during plugin installation:

|

||||

|

||||

```

|

||||

Provider "aws" v1.0.0 is not compatible with Terraform v0.12.0.

|

||||

Provider "aws" v1.0.0 is not compatible with OpenTF v0.12.0.

|

||||

|

||||

Provider version v2.0.0 is the earliest compatible version.

|

||||

Select it with the following version constraint:

|

||||

@ -113,24 +113,24 @@ Select it with the following version constraint:

|

||||

```

|

||||

|

||||

```

|

||||

Provider "aws" v3.0.0 is not compatible with Terraform v0.12.0.

|

||||

Provider "aws" v3.0.0 is not compatible with OpenTF v0.12.0.

|

||||

Provider version v2.34.0 is the latest compatible version. Select

|

||||

it with the following constraint:

|

||||

|

||||

version = "~> 2.34.0"

|

||||

|

||||

Alternatively, upgrade to the latest version of Terraform for compatibility with newer provider releases.

|

||||

Alternatively, upgrade to the latest version of OpenTF for compatibility with newer provider releases.

|

||||

```

|

||||

|

||||

The above messages are for plugins installed via `terraform init` from a

|

||||

Terraform registry, where the registry API allows Terraform Core to recognize

|

||||

The above messages are for plugins installed via `opentf init` from a

|

||||

OpenTF registry, where the registry API allows OpenTF Core to recognize

|

||||

the protocol compatibility for each provider release. For plugins that are

|

||||

installed manually to a local plugin directory, Terraform Core has no way to

|

||||

installed manually to a local plugin directory, OpenTF Core has no way to

|

||||

suggest specific versions to upgrade or downgrade to, and so the error message

|

||||

is more generic:

|

||||

|

||||

```

|

||||

The installed version of provider "example" is not compatible with Terraform v0.12.0.

|

||||

The installed version of provider "example" is not compatible with OpenTF v0.12.0.

|

||||

|

||||

This provider was loaded from:

|

||||

/usr/local/bin/terraform-provider-example_v0.1.0

|

||||

@ -154,14 +154,14 @@ of the plugin in ways that affect its semver-based version numbering:

|

||||

For this reason, SDK developers must be clear in their release notes about

|

||||

the addition and removal of support for major versions.

|

||||

|

||||

Terraform Core also makes an assumption about major version support when

|

||||

OpenTF Core also makes an assumption about major version support when

|

||||

it produces actionable error messages for users about incompatibilities:

|

||||

a particular protocol major version is supported for a single consecutive

|

||||

range of provider releases, with no "gaps".

|

||||

|

||||

## Using the protobuf specifications in an SDK

|

||||

|

||||

If you wish to build an SDK for Terraform plugins, an early step will be to

|

||||

If you wish to build an SDK for OpenTF plugins, an early step will be to

|

||||

copy one or more `.proto` files from this directory into your own repository

|

||||

(depending on which protocol versions you intend to support) and use the

|

||||

`protoc` protocol buffers compiler (with gRPC extensions) to generate suitable

|

||||

@ -178,7 +178,7 @@ You can find out more about the tool usage for each target language in

|

||||

[the gRPC Quick Start guides](https://grpc.io/docs/quickstart/).

|

||||

|

||||

The protobuf specification for a version is immutable after it has been

|

||||

included in at least one Terraform release. Any changes will be documented in

|

||||

included in at least one OpenTF release. Any changes will be documented in

|

||||

a new `.proto` file establishing a new protocol version.

|

||||

|

||||

The protocol buffer compiler will produce some sort of library object appropriate

|

||||

@ -200,7 +200,7 @@ and copy the relevant `.proto` file into it, creating a separate set of stubs

|

||||

that can in principle allow your SDK to support both major versions at the

|

||||

same time. We recommend supporting both the previous and current major versions

|

||||

together for a while across a major version upgrade so that users can avoid

|

||||

having to upgrade both Terraform Core and all of their providers at the same

|

||||

having to upgrade both OpenTF Core and all of their providers at the same

|

||||

time, but you can delete the previous major version stubs once you remove

|

||||

support for that version.

|

||||

|

||||

|

||||

@ -1,33 +1,33 @@

|

||||

# Wire Format for Terraform Objects and Associated Values

|

||||

# Wire Format for OpenTF Objects and Associated Values

|

||||

|

||||

The provider wire protocol (as of major version 5) includes a protobuf message

|

||||

type `DynamicValue` which Terraform uses to represent values from the Terraform

|

||||

type `DynamicValue` which OpenTF uses to represent values from the OpenTF

|

||||

Language type system, which result from evaluating the content of `resource`,

|

||||

`data`, and `provider` blocks, based on a schema defined by the corresponding

|

||||

provider.

|

||||

|

||||

Because the structure of these values is determined at runtime, `DynamicValue`

|

||||

uses one of two possible dynamic serialization formats for the values

|

||||

themselves: MessagePack or JSON. Terraform most commonly uses MessagePack,

|

||||

themselves: MessagePack or JSON. OpenTF most commonly uses MessagePack,

|

||||

because it offers a compact binary representation of a value. However, a server

|

||||

implementation of the provider protocol should fall back to JSON if the

|

||||

MessagePack field is not populated, in order to support both formats.

|

||||

|

||||

The remainder of this document describes how Terraform translates from its own

|

||||

The remainder of this document describes how OpenTF translates from its own

|

||||

type system into the type system of the two supported serialization formats.

|

||||

A server implementation of the Terraform provider protocol can use this

|

||||

A server implementation of the OpenTF provider protocol can use this

|

||||

information to decode `DynamicValue` values from incoming messages into

|

||||

whatever representation is convenient for the provider implementation.

|

||||

|

||||

A server implementation must also be able to _produce_ `DynamicValue` messages

|

||||

as part of various response messages. When doing so, servers should always

|

||||

use MessagePack encoding, because Terraform does not consistently support

|

||||

JSON responses across all request types and all Terraform versions.

|

||||

use MessagePack encoding, because OpenTF does not consistently support

|

||||

JSON responses across all request types and all OpenTF versions.

|

||||

|

||||

Both the MessagePack and JSON serializations are driven by information the

|

||||

provider previously returned in a `Schema` message. Terraform will encode each

|

||||

provider previously returned in a `Schema` message. OpenTF will encode each

|

||||

value depending on the type constraint given for it in the corresponding schema,

|

||||

using the closest possible MessagePack or JSON type to the Terraform language

|

||||

using the closest possible MessagePack or JSON type to the OpenTF language

|

||||

type. Therefore a server implementation can decode a serialized value using a

|

||||

standard MessagePack or JSON library and assume it will conform to the

|

||||

serialization rules described below.

|

||||

@ -38,8 +38,8 @@ The MessagePack types referenced in this section are those defined in

|

||||

[The MessagePack type system specification](https://github.com/msgpack/msgpack/blob/master/spec.md#type-system).

|

||||

|

||||

Note that MessagePack defines several possible serialization formats for each

|

||||

type, and Terraform may choose any of the formats of a specified type.

|

||||

The exact serialization chosen for a given value may vary between Terraform

|

||||

type, and OpenTF may choose any of the formats of a specified type.

|

||||

The exact serialization chosen for a given value may vary between OpenTF

|

||||

versions, but the types given here are contractual.

|

||||

|

||||

Conversely, server implementations that are _producing_ MessagePack-encoded

|

||||

@ -49,7 +49,7 @@ the value without a loss of range.

|

||||

|

||||

### `Schema.Block` Mapping Rules for MessagePack

|

||||

|

||||

To represent the content of a block as MessagePack, Terraform constructs a

|

||||

To represent the content of a block as MessagePack, OpenTF constructs a

|

||||

MessagePack map that contains one key-value pair per attribute and one

|

||||

key-value pair per distinct nested block described in the `Schema.Block` message.

|

||||

|

||||

@ -63,7 +63,7 @@ The key-value pairs representing nested block types have values based on

|

||||

The MessagePack serialization of an attribute value depends on the value of the

|

||||

`type` field of the corresponding `Schema.Attribute` message. The `type` field is

|

||||

a compact JSON serialization of a

|

||||

[Terraform type constraint](https://www.terraform.io/docs/configuration/types.html),

|

||||

[OpenTF type constraint](https://www.terraform.io/docs/configuration/types.html),

|

||||

which consists either of a single

|

||||

string value (for primitive types) or a two-element array giving a type kind

|

||||

and a type argument.

|

||||

@ -84,7 +84,7 @@ in the table below, regardless of type:

|

||||

| `"number"` | Either MessagePack integer, MessagePack float, or MessagePack string representing the number. If a number is represented as a string then the string contains a decimal representation of the number which may have a larger mantissa than can be represented by a 64-bit float. |

|

||||

| `"bool"` | A MessagePack boolean value corresponding to the value. |

|

||||

| `["list",T]` | A MessagePack array with the same number of elements as the list value, each of which is represented by the result of applying these same mapping rules to the nested type `T`. |

|

||||

| `["set",T]` | Identical in representation to `["list",T]`, but the order of elements is undefined because Terraform sets are unordered. |

|

||||

| `["set",T]` | Identical in representation to `["list",T]`, but the order of elements is undefined because OpenTF sets are unordered. |

|

||||

| `["map",T]` | A MessagePack map with one key-value pair per element of the map value, where the element key is serialized as the map key (always a MessagePack string) and the element value is represented by a value constructed by applying these same mapping rules to the nested type `T`. |

|

||||

| `["object",ATTRS]` | A MessagePack map with one key-value pair per attribute defined in the `ATTRS` object. The attribute name is serialized as the map key (always a MessagePack string) and the attribute value is represented by a value constructed by applying these same mapping rules to each attribute's own type. |

|

||||

| `["tuple",TYPES]` | A MessagePack array with one element per element described by the `TYPES` array. The element values are constructed by applying these same mapping rules to the corresponding element of `TYPES`. |

|

||||

@ -97,7 +97,7 @@ values.

|

||||

The older encoding is for unrefined unknown values and uses an extension

|

||||

code of zero, with the extension value payload completely ignored.

|

||||

|

||||

Newer Terraform versions can produce "refined" unknown values which carry some

|

||||

Newer OpenTF versions can produce "refined" unknown values which carry some

|

||||

additional information that constrains the possible range of the final value/

|

||||

Refined unknown values have extension code 12 and then the extension object's

|

||||

payload is a MessagePack-encoded map using integer keys to represent different

|

||||

@ -161,7 +161,7 @@ by applying

|

||||

to the block's contents based on the `block` field, producing what we'll call

|

||||

a _block value_ in the table below.

|

||||

|

||||

The `nesting` value then in turn defines how Terraform will collect all of the

|

||||

The `nesting` value then in turn defines how OpenTF will collect all of the

|

||||

individual block values together to produce a single property value representing

|

||||

the nested block type. For all `nesting` values other than `MAP`, blocks may

|

||||

not have any labels. For the `nesting` value `MAP`, blocks must have exactly

|

||||

@ -173,13 +173,13 @@ one label, which is a string we'll call a _block label_ in the table below.

|

||||

| `LIST` | A MessagePack array of all of the block values, preserving the order of definition of the blocks in the configuration. |

|

||||

| `SET` | A MessagePack array of all of the block values in no particular order. |

|

||||

| `MAP` | A MessagePack map with one key-value pair per block value, where the key is the block label and the value is the block value. |

|

||||

| `GROUP` | The same as with `SINGLE`, except that if there is no block of that type Terraform will synthesize a block value by pretending that all of the declared attributes are null and that there are zero blocks of each declared block type. |

|

||||

| `GROUP` | The same as with `SINGLE`, except that if there is no block of that type OpenTF will synthesize a block value by pretending that all of the declared attributes are null and that there are zero blocks of each declared block type. |

|

||||

|

||||

For the `LIST` and `SET` nesting modes, Terraform guarantees that the

|

||||

For the `LIST` and `SET` nesting modes, OpenTF guarantees that the

|

||||

MessagePack array will have a number of elements between the `min_items` and

|

||||

`max_items` values given in the schema, _unless_ any of the block values contain

|

||||

nested unknown values. When unknown values are present, Terraform considers

|

||||

the value to be potentially incomplete and so Terraform defers validation of

|

||||

nested unknown values. When unknown values are present, OpenTF considers

|

||||

the value to be potentially incomplete and so OpenTF defers validation of

|

||||

the number of blocks. For example, if the configuration includes a `dynamic`

|

||||

block whose `for_each` argument is unknown then the final number of blocks is

|

||||

not predictable until the apply phase.

|

||||

@ -198,7 +198,7 @@ _current_ version of that provider.

|

||||

|

||||

### `Schema.Block` Mapping Rules for JSON

|

||||

|

||||

To represent the content of a block as JSON, Terraform constructs a

|

||||

To represent the content of a block as JSON, OpenTF constructs a

|

||||

JSON object that contains one property per attribute and one property per

|

||||

distinct nested block described in the `Schema.Block` message.

|

||||

|

||||

@ -212,7 +212,7 @@ The properties representing nested block types have property values based on

|

||||

The JSON serialization of an attribute value depends on the value of the `type`

|

||||

field of the corresponding `Schema.Attribute` message. The `type` field is

|

||||

a compact JSON serialization of a

|

||||

[Terraform type constraint](https://www.terraform.io/docs/configuration/types.html),

|

||||

[OpenTF type constraint](https://www.terraform.io/docs/configuration/types.html),

|

||||

which consists either of a single

|

||||

string value (for primitive types) or a two-element array giving a type kind

|

||||

and a type argument.

|

||||

@ -226,10 +226,10 @@ table regardless of type:

|

||||

| `type` Pattern | JSON Representation |

|

||||

|---|---|

|

||||

| `"string"` | A JSON string containing the Unicode characters from the string value. |

|

||||

| `"number"` | A JSON number representing the number value. Terraform numbers are arbitrary-precision floating point, so the value may have a larger mantissa than can be represented by a 64-bit float. |

|

||||

| `"number"` | A JSON number representing the number value. OpenTF numbers are arbitrary-precision floating point, so the value may have a larger mantissa than can be represented by a 64-bit float. |

|

||||

| `"bool"` | Either JSON `true` or JSON `false`, depending on the boolean value. |

|

||||

| `["list",T]` | A JSON array with the same number of elements as the list value, each of which is represented by the result of applying these same mapping rules to the nested type `T`. |

|

||||

| `["set",T]` | Identical in representation to `["list",T]`, but the order of elements is undefined because Terraform sets are unordered. |

|

||||

| `["set",T]` | Identical in representation to `["list",T]`, but the order of elements is undefined because OpenTF sets are unordered. |

|

||||

| `["map",T]` | A JSON object with one property per element of the map value, where the element key is serialized as the property name string and the element value is represented by a property value constructed by applying these same mapping rules to the nested type `T`. |

|

||||

| `["object",ATTRS]` | A JSON object with one property per attribute defined in the `ATTRS` object. The attribute name is serialized as the property name string and the attribute value is represented by a property value constructed by applying these same mapping rules to each attribute's own type. |

|

||||

| `["tuple",TYPES]` | A JSON array with one element per element described by the `TYPES` array. The element values are constructed by applying these same mapping rules to the corresponding element of `TYPES`. |

|

||||

@ -248,7 +248,7 @@ by applying

|

||||

to the block's contents based on the `block` field, producing what we'll call

|

||||

a _block value_ in the table below.

|

||||

|

||||

The `nesting` value then in turn defines how Terraform will collect all of the

|

||||

The `nesting` value then in turn defines how OpenTF will collect all of the

|

||||

individual block values together to produce a single property value representing

|

||||

the nested block type. For all `nesting` values other than `MAP`, blocks may

|

||||

not have any labels. For the `nesting` value `MAP`, blocks must have exactly

|

||||

@ -260,8 +260,8 @@ one label, which is a string we'll call a _block label_ in the table below.

|

||||

| `LIST` | A JSON array of all of the block values, preserving the order of definition of the blocks in the configuration. |

|

||||

| `SET` | A JSON array of all of the block values in no particular order. |

|

||||

| `MAP` | A JSON object with one property per block value, where the property name is the block label and the value is the block value. |

|

||||

| `GROUP` | The same as with `SINGLE`, except that if there is no block of that type Terraform will synthesize a block value by pretending that all of the declared attributes are null and that there are zero blocks of each declared block type. |

|

||||

| `GROUP` | The same as with `SINGLE`, except that if there is no block of that type OpenTF will synthesize a block value by pretending that all of the declared attributes are null and that there are zero blocks of each declared block type. |

|

||||

|

||||

For the `LIST` and `SET` nesting modes, Terraform guarantees that the JSON

|

||||

For the `LIST` and `SET` nesting modes, OpenTF guarantees that the JSON

|

||||

array will have a number of elements between the `min_items` and `max_items`

|

||||

values given in the schema.

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

# Releasing a New Version of the Protocol

|

||||

|

||||

Terraform's plugin protocol is the contract between Terraform's plugins and

|

||||

Terraform, and as such releasing a new version requires some coordination

|

||||

OpenTF's plugin protocol is the contract between OpenTF's plugins and

|

||||

OpenTF, and as such releasing a new version requires some coordination

|

||||

between those pieces. This document is intended to be a checklist to consult

|

||||

when adding a new major version of the protocol (X in X.Y) to ensure that

|

||||

everything that needs to be is aware of it.

|

||||

|

||||

## New Protobuf File

|

||||

|

||||

The protocol is defined in protobuf files that live in the hashicorp/terraform

|

||||

The protocol is defined in protobuf files that live in the opentffoundation/opentf

|

||||

repository. Adding a new version of the protocol involves creating a new

|

||||

`.proto` file in that directory. It is recommended that you copy the latest

|

||||

protocol file, and modify it accordingly.

|

||||

@ -17,7 +17,7 @@ protocol file, and modify it accordingly.

|

||||

|

||||

The

|

||||

[hashicorp/terraform-plugin-go](https://github.com/hashicorp/terraform-plugin-go)

|

||||

repository serves as the foundation for Terraform's plugin ecosystem. It needs

|

||||

repository serves as the foundation for OpenTF's plugin ecosystem. It needs

|

||||

to know about the new major protocol version. Either open an issue in that repo

|

||||

to have the Plugin SDK team add the new package, or if you would like to

|

||||

contribute it yourself, open a PR. It is recommended that you copy the package

|

||||

@ -25,16 +25,16 @@ for the latest protocol version and modify it accordingly.

|

||||

|

||||

## Update the Registry's List of Allowed Versions

|

||||

|

||||

The Terraform Registry validates the protocol versions a provider advertises

|

||||

The OpenTF Registry validates the protocol versions a provider advertises

|

||||

support for when ingesting providers. Providers will not be able to advertise

|

||||

support for the new protocol version until it is added to that list.

|

||||

|

||||

## Update Terraform's Version Constraints

|

||||

## Update OpenTF's Version Constraints

|

||||

|

||||

Terraform only downloads providers that speak protocol versions it is

|

||||

compatible with from the Registry during `terraform init`. When adding support

|

||||

for a new protocol, you need to tell Terraform it knows that protocol version.

|

||||

Modify the `SupportedPluginProtocols` variable in hashicorp/terraform's

|

||||

OpenTF only downloads providers that speak protocol versions it is

|

||||

compatible with from the Registry during `opentf init`. When adding support

|

||||

for a new protocol, you need to tell OpenTF it knows that protocol version.

|

||||

Modify the `SupportedPluginProtocols` variable in opentffoundation/opentf's

|

||||

`internal/getproviders/registry_client.go` file to include the new protocol.

|

||||

|

||||

## Test Running a Provider With the Test Framework

|

||||

@ -42,12 +42,12 @@ Modify the `SupportedPluginProtocols` variable in hashicorp/terraform's

|

||||

Use the provider test framework to test a provider written with the new

|

||||

protocol. This end-to-end test ensures that providers written with the new

|

||||

protocol work correctly with the test framework, especially in communicating

|

||||

the protocol version between the test framework and Terraform.

|

||||

the protocol version between the test framework and OpenTF.

|

||||

|

||||

## Test Retrieving and Running a Provider From the Registry

|

||||

|

||||

Publish a provider, either to the public registry or to the staging registry,

|

||||

and test running `terraform init` and `terraform apply`, along with exercising

|

||||

and test running `opentf init` and `opentf apply`, along with exercising

|

||||

any of the new functionality the protocol version introduces. This end-to-end

|

||||

test ensures that all the pieces needing to be updated before practitioners can

|

||||

use providers built with the new protocol have been updated.

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

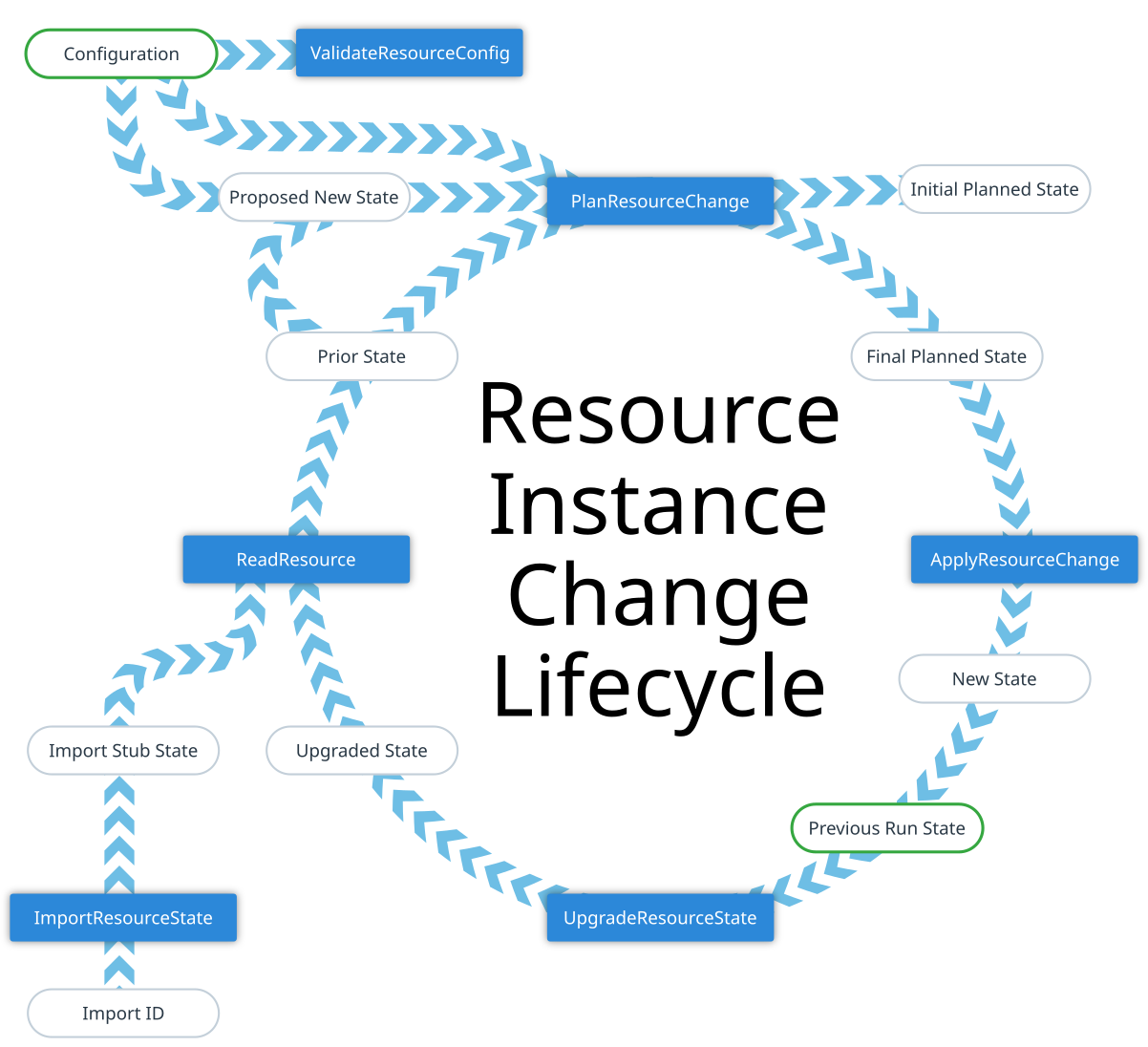

# Terraform Resource Instance Change Lifecycle

|

||||

# OpenTF Resource Instance Change Lifecycle

|

||||

|

||||

This document describes the relationships between the different operations

|

||||

called on a Terraform Provider to handle a change to a resource instance.

|

||||

called on a OpenTF Provider to handle a change to a resource instance.

|

||||

|

||||

|

||||

|

||||

@ -28,18 +28,18 @@ The various object values used in different parts of this process are:

|

||||

* **Prior State**: The provider's representation of the current state of the

|

||||

remote object at the time of the most recent read.

|

||||

|

||||

* **Proposed New State**: Terraform Core uses some built-in logic to perform

|

||||

* **Proposed New State**: OpenTF Core uses some built-in logic to perform

|

||||

an initial basic merger of the **Configuration** and the **Prior State**

|

||||

which a provider may use as a starting point for its planning operation.

|

||||

|

||||

The built-in logic primarily deals with the expected behavior for attributes

|

||||

marked in the schema as "computed". If an attribute is only "computed",

|

||||

Terraform expects the value to only be chosen by the provider and it will

|

||||

OpenTF expects the value to only be chosen by the provider and it will

|

||||

preserve any Prior State. If an attribute is marked as "computed" and

|

||||

"optional", this means that the user may either set it or may leave it

|

||||

unset to allow the provider to choose a value.

|

||||

|

||||

Terraform Core therefore constructs the proposed new state by taking the

|

||||

OpenTF Core therefore constructs the proposed new state by taking the

|

||||

attribute value from Configuration if it is non-null, and then using the

|

||||

Prior State as a fallback otherwise, thereby helping a provider to

|

||||

preserve its previously-chosen value for the attribute where appropriate.

|

||||