Compare commits

123 Commits

hub-xva-de

...

correct-ne

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

301f2f5d72 | ||

|

|

a3be8dc6fa | ||

|

|

06f596adc6 | ||

|

|

1f3b54e0c4 | ||

|

|

2ddfbe8566 | ||

|

|

c61a118e4f | ||

|

|

d69e61a634 | ||

|

|

14f0cbaec6 | ||

|

|

b313eb14ee | ||

|

|

7b47e40244 | ||

|

|

b52204817d | ||

|

|

377552103e | ||

|

|

688b65ccde | ||

|

|

6cb4faf33d | ||

|

|

78b83bb901 | ||

|

|

9ff6f60b66 | ||

|

|

624e10ed15 | ||

|

|

19e10bbb53 | ||

|

|

cca945e05b | ||

|

|

21901f2a75 | ||

|

|

ef7f943eee | ||

|

|

ec1062f9f2 | ||

|

|

2f67ed3138 | ||

|

|

ce912db30e | ||

|

|

41d790f346 | ||

|

|

bf426e15ec | ||

|

|

e4403baeb9 | ||

|

|

61101b00a1 | ||

|

|

69f8ffcfeb | ||

|

|

6b8042291c | ||

|

|

ffc0c83b50 | ||

|

|

8ccd4c269a | ||

|

|

934ec86f93 | ||

|

|

23be38b5fa | ||

|

|

fe7f74e46b | ||

|

|

a3fd78f8e2 | ||

|

|

137bad6f7b | ||

|

|

17df6fc764 | ||

|

|

2e51c8a124 | ||

|

|

5588a46366 | ||

|

|

a8122f9add | ||

|

|

5568be91d2 | ||

|

|

a04bd6f93c | ||

|

|

56d63b10e4 | ||

|

|

2c97643b10 | ||

|

|

679f403648 | ||

|

|

d482c707f6 | ||

|

|

2ec9641783 | ||

|

|

dab1788a3b | ||

|

|

47bb79cce1 | ||

|

|

41dbc20be9 | ||

|

|

10a631ec96 | ||

|

|

830e5aed96 | ||

|

|

7db573885b | ||

|

|

a74d56ebc6 | ||

|

|

ff7d84297e | ||

|

|

3a76509fe9 | ||

|

|

ac4de9ab0f | ||

|

|

471f397418 | ||

|

|

73bbdf6d4e | ||

|

|

7f26aea585 | ||

|

|

1c767b709f | ||

|

|

0ced82c885 | ||

|

|

21dd195b0d | ||

|

|

6aa6cfba8e | ||

|

|

fd7d52d38b | ||

|

|

a47bb14364 | ||

|

|

d6e6fa5735 | ||

|

|

46da11a52e | ||

|

|

68e3dc21e4 | ||

|

|

7232cc45b4 | ||

|

|

be5a297248 | ||

|

|

257031b1bc | ||

|

|

c9db9fa17a | ||

|

|

13f961a422 | ||

|

|

3b38e0c4e1 | ||

|

|

07526efe61 | ||

|

|

8753c02adb | ||

|

|

6a0bbfa447 | ||

|

|

21faaeb33d | ||

|

|

0525fc5909 | ||

|

|

a1a53bb285 | ||

|

|

0c453c4415 | ||

|

|

d0406f9736 | ||

|

|

ba74b8603d | ||

|

|

c675a4d61d | ||

|

|

965c45bc70 | ||

|

|

139a22602a | ||

|

|

e0e4969198 | ||

|

|

08d69d95b3 | ||

|

|

4e6c507ba9 | ||

|

|

fd06374365 | ||

|

|

a07ebc636a | ||

|

|

4c151ac9aa | ||

|

|

05c425698f | ||

|

|

2a961979e6 | ||

|

|

211ede92cc | ||

|

|

256af03772 | ||

|

|

654fd5a4f9 | ||

|

|

541d90e49f | ||

|

|

974e7038e7 | ||

|

|

e2f5b30aa9 | ||

|

|

3483e7d9e0 | ||

|

|

56cb20a1af | ||

|

|

64929653dd | ||

|

|

c955da9bc6 | ||

|

|

291354fa8e | ||

|

|

905d736512 | ||

|

|

3406d6e2a9 | ||

|

|

fc10b5ffb9 | ||

|

|

f89c313166 | ||

|

|

7c734168d0 | ||

|

|

1e7bfec2ce | ||

|

|

1eb0603b4e | ||

|

|

4b32730ce8 | ||

|

|

ad083c1d9b | ||

|

|

b4f84c2de2 | ||

|

|

fc17443ce4 | ||

|

|

342ae06b21 | ||

|

|

093fb7f959 | ||

|

|

f6472424ad | ||

|

|

31ed3767c6 | ||

|

|

366acb65ea |

@@ -21,7 +21,7 @@ module.exports = {

|

||||

|

||||

overrides: [

|

||||

{

|

||||

files: ['cli.js', '*-cli.js', 'packages/*cli*/**/*.js'],

|

||||

files: ['cli.js', '*-cli.js', '**/*cli*/**/*.js'],

|

||||

rules: {

|

||||

'no-console': 'off',

|

||||

},

|

||||

@@ -40,6 +40,13 @@ module.exports = {

|

||||

|

||||

'react/jsx-handler-names': 'off',

|

||||

|

||||

// disabled because not always relevant, we might reconsider in the future

|

||||

//

|

||||

// enabled by https://github.com/standard/eslint-config-standard/commit/319b177750899d4525eb1210686f6aca96190b2f

|

||||

//

|

||||

// example: https://github.com/vatesfr/xen-orchestra/blob/31ed3767c67044ca445658eb6b560718972402f2/packages/xen-api/src/index.js#L156-L157

|

||||

'lines-between-class-members': 'off',

|

||||

|

||||

'no-console': ['error', { allow: ['warn', 'error'] }],

|

||||

'no-var': 'error',

|

||||

'node/no-extraneous-import': 'error',

|

||||

|

||||

@@ -36,7 +36,7 @@

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"@babel/preset-flow": "^7.0.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

@@ -8,5 +8,8 @@

|

||||

"directory": "@xen-orchestra/babel-config",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=6"

|

||||

}

|

||||

}

|

||||

|

||||

32

@xen-orchestra/backups-cli/_composeCommands.js

Normal file

32

@xen-orchestra/backups-cli/_composeCommands.js

Normal file

@@ -0,0 +1,32 @@

|

||||

const getopts = require('getopts')

|

||||

|

||||

const { version } = require('./package.json')

|

||||

|

||||

module.exports = commands =>

|

||||

async function(args, prefix) {

|

||||

const opts = getopts(args, {

|

||||

alias: {

|

||||

help: 'h',

|

||||

},

|

||||

boolean: ['help'],

|

||||

stopEarly: true,

|

||||

})

|

||||

|

||||

const commandName = opts.help || args.length === 0 ? 'help' : args[0]

|

||||

const command = commands[commandName]

|

||||

if (command === undefined) {

|

||||

process.stdout.write(`Usage:

|

||||

|

||||

${Object.keys(commands)

|

||||

.filter(command => command !== 'help')

|

||||

.map(command => ` ${prefix} ${command} ${commands[command].usage || ''}`)

|

||||

.join('\n\n')}

|

||||

|

||||

xo-backups v${version}

|

||||

`)

|

||||

process.exitCode = commandName === 'help' ? 0 : 1

|

||||

return

|

||||

}

|

||||

|

||||

return command.main(args.slice(1), prefix + ' ' + commandName)

|

||||

}

|

||||

393

@xen-orchestra/backups-cli/commands/clean-vms.js

Normal file

393

@xen-orchestra/backups-cli/commands/clean-vms.js

Normal file

@@ -0,0 +1,393 @@

|

||||

#!/usr/bin/env node

|

||||

|

||||

// assigned when options are parsed by the main function

|

||||

let force

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

const assert = require('assert')

|

||||

const getopts = require('getopts')

|

||||

const lockfile = require('proper-lockfile')

|

||||

const { default: Vhd } = require('vhd-lib')

|

||||

const { curryRight, flatten } = require('lodash')

|

||||

const { dirname, resolve } = require('path')

|

||||

const { DISK_TYPE_DIFFERENCING } = require('vhd-lib/dist/_constants')

|

||||

const { pipe, promisifyAll } = require('promise-toolbox')

|

||||

|

||||

const fs = promisifyAll(require('fs'))

|

||||

const handler = require('@xen-orchestra/fs').getHandler({ url: 'file://' })

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

const asyncMap = curryRight((iterable, fn) =>

|

||||

Promise.all(

|

||||

Array.isArray(iterable) ? iterable.map(fn) : Array.from(iterable, fn)

|

||||

)

|

||||

)

|

||||

|

||||

const filter = (...args) => thisArg => thisArg.filter(...args)

|

||||

|

||||

const isGzipFile = async fd => {

|

||||

// https://tools.ietf.org/html/rfc1952.html#page-5

|

||||

const magicNumber = Buffer.allocUnsafe(2)

|

||||

assert.strictEqual(

|

||||

await fs.read(fd, magicNumber, 0, magicNumber.length, 0),

|

||||

magicNumber.length

|

||||

)

|

||||

return magicNumber[0] === 31 && magicNumber[1] === 139

|

||||

}

|

||||

|

||||

// TODO: better check?

|

||||

//

|

||||

// our heuristic is not good enough, there has been some false positives

|

||||

// (detected as invalid by us but valid by `tar` and imported with success),

|

||||

// either THOUGH THEY MAY HAVE BEEN COMPRESSED FILES:

|

||||

// - these files were normal but the check is incorrect

|

||||

// - these files were invalid but without data loss

|

||||

// - these files were invalid but with silent data loss

|

||||

//

|

||||

// maybe reading the end of the file looking for a file named

|

||||

// /^Ref:\d+/\d+\.checksum$/ and then validating the tar structure from it

|

||||

//

|

||||

// https://github.com/npm/node-tar/issues/234#issuecomment-538190295

|

||||

const isValidTar = async (size, fd) => {

|

||||

if (size <= 1024 || size % 512 !== 0) {

|

||||

return false

|

||||

}

|

||||

|

||||

const buf = Buffer.allocUnsafe(1024)

|

||||

assert.strictEqual(

|

||||

await fs.read(fd, buf, 0, buf.length, size - buf.length),

|

||||

buf.length

|

||||

)

|

||||

return buf.every(_ => _ === 0)

|

||||

}

|

||||

|

||||

// TODO: find an heuristic for compressed files

|

||||

const isValidXva = async path => {

|

||||

try {

|

||||

const fd = await fs.open(path, 'r')

|

||||

try {

|

||||

const { size } = await fs.fstat(fd)

|

||||

if (size < 20) {

|

||||

// neither a valid gzip not tar

|

||||

return false

|

||||

}

|

||||

|

||||

return (await isGzipFile(fd))

|

||||

? true // gzip files cannot be validated at this time

|

||||

: await isValidTar(size, fd)

|

||||

} finally {

|

||||

fs.close(fd).catch(noop)

|

||||

}

|

||||

} catch (error) {

|

||||

// never throw, log and report as valid to avoid side effects

|

||||

console.error('isValidXva', path, error)

|

||||

return true

|

||||

}

|

||||

}

|

||||

|

||||

const noop = Function.prototype

|

||||

|

||||

const readDir = path =>

|

||||

fs.readdir(path).then(

|

||||

entries => {

|

||||

entries.forEach((entry, i) => {

|

||||

entries[i] = `${path}/${entry}`

|

||||

})

|

||||

|

||||

return entries

|

||||

},

|

||||

error => {

|

||||

// a missing dir is by definition empty

|

||||

if (error != null && error.code === 'ENOENT') {

|

||||

return []

|

||||

}

|

||||

throw error

|

||||

}

|

||||

)

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// chain is an array of VHDs from child to parent

|

||||

//

|

||||

// the whole chain will be merged into parent, parent will be renamed to child

|

||||

// and all the others will deleted

|

||||

async function mergeVhdChain(chain) {

|

||||

assert(chain.length >= 2)

|

||||

|

||||

const child = chain[0]

|

||||

const parent = chain[chain.length - 1]

|

||||

const children = chain.slice(0, -1).reverse()

|

||||

|

||||

console.warn('Unused parents of VHD', child)

|

||||

chain

|

||||

.slice(1)

|

||||

.reverse()

|

||||

.forEach(parent => {

|

||||

console.warn(' ', parent)

|

||||

})

|

||||

force && console.warn(' merging…')

|

||||

console.warn('')

|

||||

if (force) {

|

||||

// `mergeVhd` does not work with a stream, either

|

||||

// - make it accept a stream

|

||||

// - or create synthetic VHD which is not a stream

|

||||

return console.warn('TODO: implement merge')

|

||||

// await mergeVhd(

|

||||

// handler,

|

||||

// parent,

|

||||

// handler,

|

||||

// children.length === 1

|

||||

// ? child

|

||||

// : await createSyntheticStream(handler, children)

|

||||

// )

|

||||

}

|

||||

|

||||

await Promise.all([

|

||||

force && fs.rename(parent, child),

|

||||

asyncMap(children.slice(0, -1), child => {

|

||||

console.warn('Unused VHD', child)

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

return force && handler.unlink(child)

|

||||

}),

|

||||

])

|

||||

}

|

||||

|

||||

const listVhds = pipe([

|

||||

vmDir => vmDir + '/vdis',

|

||||

readDir,

|

||||

asyncMap(readDir),

|

||||

flatten,

|

||||

asyncMap(readDir),

|

||||

flatten,

|

||||

filter(_ => _.endsWith('.vhd')),

|

||||

])

|

||||

|

||||

async function handleVm(vmDir) {

|

||||

const vhds = new Set()

|

||||

const vhdParents = { __proto__: null }

|

||||

const vhdChildren = { __proto__: null }

|

||||

|

||||

// remove broken VHDs

|

||||

await asyncMap(await listVhds(vmDir), async path => {

|

||||

try {

|

||||

const vhd = new Vhd(handler, path)

|

||||

await vhd.readHeaderAndFooter()

|

||||

vhds.add(path)

|

||||

if (vhd.footer.diskType === DISK_TYPE_DIFFERENCING) {

|

||||

const parent = resolve(dirname(path), vhd.header.parentUnicodeName)

|

||||

vhdParents[path] = parent

|

||||

if (parent in vhdChildren) {

|

||||

const error = new Error(

|

||||

'this script does not support multiple VHD children'

|

||||

)

|

||||

error.parent = parent

|

||||

error.child1 = vhdChildren[parent]

|

||||

error.child2 = path

|

||||

throw error // should we throw?

|

||||

}

|

||||

vhdChildren[parent] = path

|

||||

}

|

||||

} catch (error) {

|

||||

console.warn('Error while checking VHD', path)

|

||||

console.warn(' ', error)

|

||||

if (error != null && error.code === 'ERR_ASSERTION') {

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

force && (await handler.unlink(path))

|

||||

}

|

||||

}

|

||||

})

|

||||

|

||||

// remove VHDs with missing ancestors

|

||||

{

|

||||

const deletions = []

|

||||

|

||||

// return true if the VHD has been deleted or is missing

|

||||

const deleteIfOrphan = vhd => {

|

||||

const parent = vhdParents[vhd]

|

||||

if (parent === undefined) {

|

||||

return

|

||||

}

|

||||

|

||||

// no longer needs to be checked

|

||||

delete vhdParents[vhd]

|

||||

|

||||

deleteIfOrphan(parent)

|

||||

|

||||

if (!vhds.has(parent)) {

|

||||

vhds.delete(vhd)

|

||||

|

||||

console.warn('Error while checking VHD', vhd)

|

||||

console.warn(' missing parent', parent)

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

force && deletions.push(handler.unlink(vhd))

|

||||

}

|

||||

}

|

||||

|

||||

// > A property that is deleted before it has been visited will not be

|

||||

// > visited later.

|

||||

// >

|

||||

// > -- https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Statements/for...in#Deleted_added_or_modified_properties

|

||||

for (const child in vhdParents) {

|

||||

deleteIfOrphan(child)

|

||||

}

|

||||

|

||||

await Promise.all(deletions)

|

||||

}

|

||||

|

||||

const [jsons, xvas] = await readDir(vmDir).then(entries => [

|

||||

entries.filter(_ => _.endsWith('.json')),

|

||||

new Set(entries.filter(_ => _.endsWith('.xva'))),

|

||||

])

|

||||

|

||||

await asyncMap(xvas, async path => {

|

||||

// check is not good enough to delete the file, the best we can do is report

|

||||

// it

|

||||

if (!(await isValidXva(path))) {

|

||||

console.warn('Potential broken XVA', path)

|

||||

console.warn('')

|

||||

}

|

||||

})

|

||||

|

||||

const unusedVhds = new Set(vhds)

|

||||

const unusedXvas = new Set(xvas)

|

||||

|

||||

// compile the list of unused XVAs and VHDs, and remove backup metadata which

|

||||

// reference a missing XVA/VHD

|

||||

await asyncMap(jsons, async json => {

|

||||

const metadata = JSON.parse(await fs.readFile(json))

|

||||

const { mode } = metadata

|

||||

if (mode === 'full') {

|

||||

const linkedXva = resolve(vmDir, metadata.xva)

|

||||

|

||||

if (xvas.has(linkedXva)) {

|

||||

unusedXvas.delete(linkedXva)

|

||||

} else {

|

||||

console.warn('Error while checking backup', json)

|

||||

console.warn(' missing file', linkedXva)

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

force && (await handler.unlink(json))

|

||||

}

|

||||

} else if (mode === 'delta') {

|

||||

const linkedVhds = (() => {

|

||||

const { vhds } = metadata

|

||||

return Object.keys(vhds).map(key => resolve(vmDir, vhds[key]))

|

||||

})()

|

||||

|

||||

// FIXME: find better approach by keeping as much of the backup as

|

||||

// possible (existing disks) even if one disk is missing

|

||||

if (linkedVhds.every(_ => vhds.has(_))) {

|

||||

linkedVhds.forEach(_ => unusedVhds.delete(_))

|

||||

} else {

|

||||

console.warn('Error while checking backup', json)

|

||||

const missingVhds = linkedVhds.filter(_ => !vhds.has(_))

|

||||

console.warn(

|

||||

' %i/%i missing VHDs',

|

||||

missingVhds.length,

|

||||

linkedVhds.length

|

||||

)

|

||||

missingVhds.forEach(vhd => {

|

||||

console.warn(' ', vhd)

|

||||

})

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

force && (await handler.unlink(json))

|

||||

}

|

||||

}

|

||||

})

|

||||

|

||||

// TODO: parallelize by vm/job/vdi

|

||||

const unusedVhdsDeletion = []

|

||||

{

|

||||

// VHD chains (as list from child to ancestor) to merge indexed by last

|

||||

// ancestor

|

||||

const vhdChainsToMerge = { __proto__: null }

|

||||

|

||||

const toCheck = new Set(unusedVhds)

|

||||

|

||||

const getUsedChildChainOrDelete = vhd => {

|

||||

if (vhd in vhdChainsToMerge) {

|

||||

const chain = vhdChainsToMerge[vhd]

|

||||

delete vhdChainsToMerge[vhd]

|

||||

return chain

|

||||

}

|

||||

|

||||

if (!unusedVhds.has(vhd)) {

|

||||

return [vhd]

|

||||

}

|

||||

|

||||

// no longer needs to be checked

|

||||

toCheck.delete(vhd)

|

||||

|

||||

const child = vhdChildren[vhd]

|

||||

if (child !== undefined) {

|

||||

const chain = getUsedChildChainOrDelete(child)

|

||||

if (chain !== undefined) {

|

||||

chain.push(vhd)

|

||||

return chain

|

||||

}

|

||||

}

|

||||

|

||||

console.warn('Unused VHD', vhd)

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

force && unusedVhdsDeletion.push(handler.unlink(vhd))

|

||||

}

|

||||

|

||||

toCheck.forEach(vhd => {

|

||||

vhdChainsToMerge[vhd] = getUsedChildChainOrDelete(vhd)

|

||||

})

|

||||

|

||||

Object.keys(vhdChainsToMerge).forEach(key => {

|

||||

const chain = vhdChainsToMerge[key]

|

||||

if (chain !== undefined) {

|

||||

unusedVhdsDeletion.push(mergeVhdChain(chain))

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

await Promise.all([

|

||||

unusedVhdsDeletion,

|

||||

asyncMap(unusedXvas, path => {

|

||||

console.warn('Unused XVA', path)

|

||||

force && console.warn(' deleting…')

|

||||

console.warn('')

|

||||

return force && handler.unlink(path)

|

||||

}),

|

||||

])

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

module.exports = async function main(args) {

|

||||

const opts = getopts(args, {

|

||||

alias: {

|

||||

force: 'f',

|

||||

},

|

||||

boolean: ['force'],

|

||||

default: {

|

||||

force: false,

|

||||

},

|

||||

})

|

||||

|

||||

;({ force } = opts)

|

||||

await asyncMap(opts._, async vmDir => {

|

||||

vmDir = resolve(vmDir)

|

||||

|

||||

// TODO: implement this in `xo-server`, not easy because not compatible with

|

||||

// `@xen-orchestra/fs`.

|

||||

const release = await lockfile.lock(vmDir)

|

||||

try {

|

||||

await handleVm(vmDir)

|

||||

} catch (error) {

|

||||

console.error('handleVm', vmDir, error)

|

||||

} finally {

|

||||

await release()

|

||||

}

|

||||

})

|

||||

}

|

||||

13

@xen-orchestra/backups-cli/index.js

Executable file

13

@xen-orchestra/backups-cli/index.js

Executable file

@@ -0,0 +1,13 @@

|

||||

#!/usr/bin/env node

|

||||

|

||||

require('./_composeCommands')({

|

||||

'clean-vms': {

|

||||

get main() {

|

||||

return require('./commands/clean-vms')

|

||||

},

|

||||

usage: '[--force] xo-vm-backups/*',

|

||||

},

|

||||

})(process.argv.slice(2), 'xo-backups').catch(error => {

|

||||

console.error('main', error)

|

||||

process.exitCode = 1

|

||||

})

|

||||

28

@xen-orchestra/backups-cli/package.json

Normal file

28

@xen-orchestra/backups-cli/package.json

Normal file

@@ -0,0 +1,28 @@

|

||||

{

|

||||

"bin": {

|

||||

"xo-backups": "index.js"

|

||||

},

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"dependencies": {

|

||||

"@xen-orchestra/fs": "^0.10.2",

|

||||

"getopts": "^2.2.5",

|

||||

"lodash": "^4.17.15",

|

||||

"promise-toolbox": "^0.14.0",

|

||||

"proper-lockfile": "^4.1.1",

|

||||

"vhd-lib": "^0.7.2"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=7.10.1"

|

||||

},

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/backups-cli",

|

||||

"name": "@xen-orchestra/backups-cli",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/backups-cli",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

"scripts": {

|

||||

"postversion": "npm publish --access public"

|

||||

},

|

||||

"version": "0.0.0"

|

||||

}

|

||||

@@ -16,7 +16,7 @@

|

||||

},

|

||||

"dependencies": {

|

||||

"golike-defer": "^0.4.1",

|

||||

"xen-api": "^0.27.2"

|

||||

"xen-api": "^0.27.3"

|

||||

},

|

||||

"scripts": {

|

||||

"postversion": "npm publish"

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@xen-orchestra/cron",

|

||||

"version": "1.0.4",

|

||||

"version": "1.0.6",

|

||||

"license": "ISC",

|

||||

"description": "Focused, well maintained, cron parser/scheduler",

|

||||

"keywords": [

|

||||

@@ -46,7 +46,7 @@

|

||||

"@babel/core": "^7.0.0",

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"@babel/preset-flow": "^7.0.0",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

@@ -5,14 +5,21 @@ import parse from './parse'

|

||||

|

||||

const MAX_DELAY = 2 ** 31 - 1

|

||||

|

||||

function nextDelay(schedule) {

|

||||

const now = schedule._createDate()

|

||||

return next(schedule._schedule, now) - now

|

||||

}

|

||||

|

||||

class Job {

|

||||

constructor(schedule, fn) {

|

||||

let scheduledDate

|

||||

const wrapper = () => {

|

||||

const now = Date.now()

|

||||

if (scheduledDate > now) {

|

||||

// we're early, delay

|

||||

//

|

||||

// no need to check _isEnabled, we're just delaying the existing timeout

|

||||

//

|

||||

// see https://github.com/vatesfr/xen-orchestra/issues/4625

|

||||

this._timeout = setTimeout(wrapper, scheduledDate - now)

|

||||

return

|

||||

}

|

||||

|

||||

this._isRunning = true

|

||||

|

||||

let result

|

||||

@@ -32,7 +39,9 @@ class Job {

|

||||

this._isRunning = false

|

||||

|

||||

if (this._isEnabled) {

|

||||

const delay = nextDelay(schedule)

|

||||

const now = schedule._createDate()

|

||||

scheduledDate = +next(schedule._schedule, now)

|

||||

const delay = scheduledDate - now

|

||||

this._timeout =

|

||||

delay < MAX_DELAY

|

||||

? setTimeout(wrapper, delay)

|

||||

|

||||

@@ -2,12 +2,24 @@

|

||||

|

||||

import { createSchedule } from './'

|

||||

|

||||

const wrap = value => () => value

|

||||

|

||||

describe('issues', () => {

|

||||

let originalDateNow

|

||||

beforeAll(() => {

|

||||

originalDateNow = Date.now

|

||||

})

|

||||

afterAll(() => {

|

||||

Date.now = originalDateNow

|

||||

originalDateNow = undefined

|

||||

})

|

||||

|

||||

test('stop during async execution', async () => {

|

||||

let nCalls = 0

|

||||

let resolve, promise

|

||||

|

||||

const job = createSchedule('* * * * *').createJob(() => {

|

||||

const schedule = createSchedule('* * * * *')

|

||||

const job = schedule.createJob(() => {

|

||||

++nCalls

|

||||

|

||||

// eslint-disable-next-line promise/param-names

|

||||

@@ -18,6 +30,7 @@ describe('issues', () => {

|

||||

})

|

||||

|

||||

job.start()

|

||||

Date.now = wrap(+schedule.next(1)[0])

|

||||

jest.runAllTimers()

|

||||

|

||||

expect(nCalls).toBe(1)

|

||||

@@ -35,7 +48,8 @@ describe('issues', () => {

|

||||

let nCalls = 0

|

||||

let resolve, promise

|

||||

|

||||

const job = createSchedule('* * * * *').createJob(() => {

|

||||

const schedule = createSchedule('* * * * *')

|

||||

const job = schedule.createJob(() => {

|

||||

++nCalls

|

||||

|

||||

// eslint-disable-next-line promise/param-names

|

||||

@@ -46,6 +60,7 @@ describe('issues', () => {

|

||||

})

|

||||

|

||||

job.start()

|

||||

Date.now = wrap(+schedule.next(1)[0])

|

||||

jest.runAllTimers()

|

||||

|

||||

expect(nCalls).toBe(1)

|

||||

@@ -56,6 +71,7 @@ describe('issues', () => {

|

||||

resolve()

|

||||

await promise

|

||||

|

||||

Date.now = wrap(+schedule.next(1)[0])

|

||||

jest.runAllTimers()

|

||||

expect(nCalls).toBe(2)

|

||||

})

|

||||

|

||||

@@ -1,13 +1,13 @@

|

||||

# ${pkg.name} [](https://travis-ci.org/${pkg.shortGitHubPath})

|

||||

# @xen-orchestra/defined [](https://travis-ci.org/${pkg.shortGitHubPath})

|

||||

|

||||

> ${pkg.description}

|

||||

|

||||

## Install

|

||||

|

||||

Installation of the [npm package](https://npmjs.org/package/${pkg.name}):

|

||||

Installation of the [npm package](https://npmjs.org/package/@xen-orchestra/defined):

|

||||

|

||||

```

|

||||

> npm install --save ${pkg.name}

|

||||

> npm install --save @xen-orchestra/defined

|

||||

```

|

||||

|

||||

## Usage

|

||||

@@ -40,10 +40,10 @@ the code.

|

||||

|

||||

You may:

|

||||

|

||||

- report any [issue](${pkg.bugs})

|

||||

- report any [issue](https://github.com/vatesfr/xen-orchestra/issues)

|

||||

you've encountered;

|

||||

- fork and create a pull request.

|

||||

|

||||

## License

|

||||

|

||||

${pkg.license} © [${pkg.author.name}](${pkg.author.url})

|

||||

ISC © [Vates SAS](https://vates.fr)

|

||||

|

||||

@@ -34,7 +34,7 @@

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"@babel/preset-flow": "^7.0.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

@@ -62,10 +62,10 @@ the code.

|

||||

|

||||

You may:

|

||||

|

||||

- report any [issue](${pkg.bugs})

|

||||

- report any [issue](https://github.com/vatesfr/xen-orchestra/issues)

|

||||

you've encountered;

|

||||

- fork and create a pull request.

|

||||

|

||||

## License

|

||||

|

||||

${pkg.license} © [${pkg.author.name}](${pkg.author.url})

|

||||

ISC © [Vates SAS](https://vates.fr)

|

||||

|

||||

@@ -33,7 +33,7 @@

|

||||

"@babel/core": "^7.0.0",

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@xen-orchestra/fs",

|

||||

"version": "0.10.1",

|

||||

"version": "0.10.2",

|

||||

"license": "AGPL-3.0",

|

||||

"description": "The File System for Xen Orchestra backups.",

|

||||

"keywords": [],

|

||||

@@ -18,19 +18,19 @@

|

||||

"dist/"

|

||||

],

|

||||

"engines": {

|

||||

"node": ">=6"

|

||||

"node": ">=8.10"

|

||||

},

|

||||

"dependencies": {

|

||||

"@marsaud/smb2": "^0.14.0",

|

||||

"@sindresorhus/df": "^2.1.0",

|

||||

"@sindresorhus/df": "^3.1.1",

|

||||

"@xen-orchestra/async-map": "^0.0.0",

|

||||

"decorator-synchronized": "^0.5.0",

|

||||

"execa": "^1.0.0",

|

||||

"execa": "^3.2.0",

|

||||

"fs-extra": "^8.0.1",

|

||||

"get-stream": "^4.0.0",

|

||||

"get-stream": "^5.1.0",

|

||||

"limit-concurrency-decorator": "^0.4.0",

|

||||

"lodash": "^4.17.4",

|

||||

"promise-toolbox": "^0.13.0",

|

||||

"promise-toolbox": "^0.14.0",

|

||||

"readable-stream": "^3.0.6",

|

||||

"through2": "^3.0.0",

|

||||

"tmp": "^0.1.0",

|

||||

@@ -46,7 +46,7 @@

|

||||

"@babel/preset-flow": "^7.0.0",

|

||||

"async-iterator-to-stream": "^1.1.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"dotenv": "^8.0.0",

|

||||

"index-modules": "^0.3.0",

|

||||

"rimraf": "^3.0.0"

|

||||

|

||||

@@ -389,7 +389,7 @@ export default class RemoteHandlerAbstract {

|

||||

async test(): Promise<Object> {

|

||||

const SIZE = 1024 * 1024 * 10

|

||||

const testFileName = normalizePath(`${Date.now()}.test`)

|

||||

const data = await fromCallback(cb => randomBytes(SIZE, cb))

|

||||

const data = await fromCallback(randomBytes, SIZE)

|

||||

let step = 'write'

|

||||

try {

|

||||

const writeStart = process.hrtime()

|

||||

|

||||

@@ -86,7 +86,7 @@ handlers.forEach(url => {

|

||||

describe('#createOutputStream()', () => {

|

||||

it('creates parent dir if missing', async () => {

|

||||

const stream = await handler.createOutputStream('dir/file')

|

||||

await fromCallback(cb => pipeline(createTestDataStream(), stream, cb))

|

||||

await fromCallback(pipeline, createTestDataStream(), stream)

|

||||

await expect(await handler.readFile('dir/file')).toEqual(TEST_DATA)

|

||||

})

|

||||

})

|

||||

@@ -106,7 +106,7 @@ handlers.forEach(url => {

|

||||

describe('#createWriteStream()', () => {

|

||||

testWithFileDescriptor('file', 'wx', async ({ file, flags }) => {

|

||||

const stream = await handler.createWriteStream(file, { flags })

|

||||

await fromCallback(cb => pipeline(createTestDataStream(), stream, cb))

|

||||

await fromCallback(pipeline, createTestDataStream(), stream)

|

||||

await expect(await handler.readFile('file')).toEqual(TEST_DATA)

|

||||

})

|

||||

|

||||

|

||||

@@ -47,8 +47,19 @@ export default class LocalHandler extends RemoteHandlerAbstract {

|

||||

})

|

||||

}

|

||||

|

||||

_getInfo() {

|

||||

return df.file(this._getFilePath('/'))

|

||||

async _getInfo() {

|

||||

// df.file() resolves with an object with the following properties:

|

||||

// filesystem, type, size, used, available, capacity and mountpoint.

|

||||

// size, used, available and capacity may be `NaN` so we remove any `NaN`

|

||||

// value from the object.

|

||||

const info = await df.file(this._getFilePath('/'))

|

||||

Object.keys(info).forEach(key => {

|

||||

if (Number.isNaN(info[key])) {

|

||||

delete info[key]

|

||||

}

|

||||

})

|

||||

|

||||

return info

|

||||

}

|

||||

|

||||

async _getSize(file) {

|

||||

|

||||

@@ -15,7 +15,7 @@ Installation of the [npm package](https://npmjs.org/package/@xen-orchestra/log):

|

||||

Everywhere something should be logged:

|

||||

|

||||

```js

|

||||

import createLogger from '@xen-orchestra/log'

|

||||

import { createLogger } from '@xen-orchestra/log'

|

||||

|

||||

const log = createLogger('my-module')

|

||||

|

||||

@@ -42,6 +42,7 @@ log.error('could not join server', {

|

||||

Then, at application level, configure the logs are handled:

|

||||

|

||||

```js

|

||||

import { createLogger } from '@xen-orchestra/log'

|

||||

import { configure, catchGlobalErrors } from '@xen-orchestra/log/configure'

|

||||

import transportConsole from '@xen-orchestra/log/transports/console'

|

||||

import transportEmail from '@xen-orchestra/log/transports/email'

|

||||

@@ -77,8 +78,8 @@ configure([

|

||||

])

|

||||

|

||||

// send all global errors (uncaught exceptions, warnings, unhandled rejections)

|

||||

// to this transport

|

||||

catchGlobalErrors(transport)

|

||||

// to this logger

|

||||

catchGlobalErrors(createLogger('app'))

|

||||

```

|

||||

|

||||

### Transports

|

||||

|

||||

@@ -31,14 +31,14 @@

|

||||

},

|

||||

"dependencies": {

|

||||

"lodash": "^4.17.4",

|

||||

"promise-toolbox": "^0.13.0"

|

||||

"promise-toolbox": "^0.14.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@babel/cli": "^7.0.0",

|

||||

"@babel/core": "^7.0.0",

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"index-modules": "^0.3.0",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

@@ -48,7 +48,7 @@

|

||||

"dev": "cross-env NODE_ENV=development babel --watch --source-maps --out-dir=dist/ src/",

|

||||

"prebuild": "yarn run clean",

|

||||

"predev": "yarn run prebuild",

|

||||

"prepublishOnly": "yarn run build",

|

||||

"prepare": "yarn run build",

|

||||

"postversion": "npm publish"

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,13 +1,13 @@

|

||||

# ${pkg.name} [](https://travis-ci.org/${pkg.shortGitHubPath})

|

||||

# @xen-orchestra/mixin [](https://travis-ci.org/${pkg.shortGitHubPath})

|

||||

|

||||

> ${pkg.description}

|

||||

|

||||

## Install

|

||||

|

||||

Installation of the [npm package](https://npmjs.org/package/${pkg.name}):

|

||||

Installation of the [npm package](https://npmjs.org/package/@xen-orchestra/mixin):

|

||||

|

||||

```

|

||||

> npm install --save ${pkg.name}

|

||||

> npm install --save @xen-orchestra/mixin

|

||||

```

|

||||

|

||||

## Usage

|

||||

@@ -40,10 +40,10 @@ the code.

|

||||

|

||||

You may:

|

||||

|

||||

- report any [issue](${pkg.bugs})

|

||||

- report any [issue](https://github.com/vatesfr/xen-orchestra/issues)

|

||||

you've encountered;

|

||||

- fork and create a pull request.

|

||||

|

||||

## License

|

||||

|

||||

${pkg.license} © [${pkg.author.name}](${pkg.author.url})

|

||||

ISC © [Vates SAS](https://vates.fr)

|

||||

|

||||

@@ -36,7 +36,7 @@

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"babel-plugin-dev": "^1.0.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

@@ -28,7 +28,7 @@

|

||||

"@babel/cli": "^7.0.0",

|

||||

"@babel/core": "^7.0.0",

|

||||

"@babel/preset-env": "^7.0.0",

|

||||

"cross-env": "^5.1.3",

|

||||

"cross-env": "^6.0.3",

|

||||

"rimraf": "^3.0.0"

|

||||

},

|

||||

"scripts": {

|

||||

|

||||

114

CHANGELOG.md

114

CHANGELOG.md

@@ -4,18 +4,124 @@

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Backup NG] Make report recipients configurable in the backup settings [#4581](https://github.com/vatesfr/xen-orchestra/issues/4581) (PR [#4646](https://github.com/vatesfr/xen-orchestra/pull/4646))

|

||||

- [SAML] Setting to disable requested authentication context (helps with _Active Directory_) (PR [#4675](https://github.com/vatesfr/xen-orchestra/pull/4675))

|

||||

- The default sign-in page can be configured via `authentication.defaultSignInPage` (PR [#4678](https://github.com/vatesfr/xen-orchestra/pull/4678))

|

||||

- [SR] Allow import of VHD and VMDK disks [#4137](https://github.com/vatesfr/xen-orchestra/issues/4137) (PR [#4138](https://github.com/vatesfr/xen-orchestra/pull/4138) )

|

||||

- [Host] Advanced Live Telemetry (PR [#4680](https://github.com/vatesfr/xen-orchestra/pull/4680))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Metadata backup] Add 10 minutes timeout to avoid stuck jobs [#4657](https://github.com/vatesfr/xen-orchestra/issues/4657) (PR [#4666](https://github.com/vatesfr/xen-orchestra/pull/4666))

|

||||

- [Metadata backups] Fix out-of-date listing for 1 minute due to cache (PR [#4672](https://github.com/vatesfr/xen-orchestra/pull/4672))

|

||||

- [Delta backup] Limit the number of merged deltas per run to avoid interrupted jobs (PR [#4674](https://github.com/vatesfr/xen-orchestra/pull/4674))

|

||||

|

||||

### Released packages

|

||||

|

||||

- xo-server v5.51.0

|

||||

- vhd-lib v0.7.2

|

||||

- xo-vmdk-to-vhd v0.1.8

|

||||

- xo-server-auth-ldap v0.6.6

|

||||

- xo-server-auth-saml v0.7.0

|

||||

- xo-server-backup-reports v0.16.4

|

||||

- @xen-orchestra/fs v0.10.2

|

||||

- xo-server v5.53.0

|

||||

- xo-web v5.53.1

|

||||

|

||||

## **5.40.2** (2019-11-22)

|

||||

|

||||

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Logs] Ability to report a bug with attached log (PR [#4201](https://github.com/vatesfr/xen-orchestra/pull/4201))

|

||||

- [Backup] Reduce _VDI chain protection error_ occurrence by being more tolerant (configurable via `xo-server`'s `xapiOptions.maxUncoalescedVdis` setting) [#4124](https://github.com/vatesfr/xen-orchestra/issues/4124) (PR [#4651](https://github.com/vatesfr/xen-orchestra/pull/4651))

|

||||

- [Plugin] [Web hooks](https://xen-orchestra.com/docs/web-hooks.html) [#1946](https://github.com/vatesfr/xen-orchestra/issues/1946) (PR [#3155](https://github.com/vatesfr/xen-orchestra/pull/3155))

|

||||

- [Tables] Always put the tables' search in the URL [#4542](https://github.com/vatesfr/xen-orchestra/issues/4542) (PR [#4637](https://github.com/vatesfr/xen-orchestra/pull/4637))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [SDN controller] Prevent private network creation on bond slave PIF (Fixes https://github.com/xcp-ng/xcp/issues/300) (PR [4633](https://github.com/vatesfr/xen-orchestra/pull/4633))

|

||||

- [Metadata backup] Fix failed backup reported as successful [#4596](https://github.com/vatesfr/xen-orchestra/issues/4596) (PR [#4598](https://github.com/vatesfr/xen-orchestra/pull/4598))

|

||||

- [Backup NG] Fix "task cancelled" error when the backup job timeout exceeds 596 hours [#4662](https://github.com/vatesfr/xen-orchestra/issues/4662) (PR [#4663](https://github.com/vatesfr/xen-orchestra/pull/4663))

|

||||

- Fix `promise rejected with non-error` warnings in logs (PR [#4659](https://github.com/vatesfr/xen-orchestra/pull/4659))

|

||||

|

||||

### Released packages

|

||||

|

||||

- xo-server-web-hooks v0.1.0

|

||||

- xen-api v0.27.3

|

||||

- xo-server-backup-reports v0.16.3

|

||||

- vhd-lib v0.7.1

|

||||

- xo-server v5.52.1

|

||||

- xo-web v5.52.0

|

||||

|

||||

## **5.40.1** (2019-10-29)

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [XOSAN] Fix "Install Cloud plugin" warning (PR [#4631](https://github.com/vatesfr/xen-orchestra/pull/4631))

|

||||

|

||||

### Released packages

|

||||

|

||||

- xo-web v5.51.1

|

||||

|

||||

## **5.40.0** (2019-10-29)

|

||||

|

||||

### Breaking changes

|

||||

|

||||

- `xo-server` requires Node 8.

|

||||

|

||||

### Highlights

|

||||

|

||||

- [Backup NG] Offline backup feature [#3449](https://github.com/vatesfr/xen-orchestra/issues/3449) (PR [#4470](https://github.com/vatesfr/xen-orchestra/pull/4470))

|

||||

- [Menu] Remove legacy backup entry [#4467](https://github.com/vatesfr/xen-orchestra/issues/4467) (PR [#4476](https://github.com/vatesfr/xen-orchestra/pull/4476))

|

||||

- [Hub] Ability to update existing template (PR [#4613](https://github.com/vatesfr/xen-orchestra/pull/4613))

|

||||

- [Support] Ability to open and close support tunnel from the user interface [#4513](https://github.com/vatesfr/xen-orchestra/issues/4513) (PR [#4616](https://github.com/vatesfr/xen-orchestra/pull/4616))

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Hub] Ability to select SR in hub VM installation (PR [#4571](https://github.com/vatesfr/xen-orchestra/pull/4571))

|

||||

- [Hub] Display more info about downloadable templates (PR [#4593](https://github.com/vatesfr/xen-orchestra/pull/4593))

|

||||

- [xo-server-transport-icinga2] Add support of [icinga2](https://icinga.com/docs/icinga2/latest/doc/12-icinga2-api/) for reporting services status [#4563](https://github.com/vatesfr/xen-orchestra/issues/4563) (PR [#4573](https://github.com/vatesfr/xen-orchestra/pull/4573))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [SR] Fix `[object HTMLInputElement]` name after re-attaching a SR [#4546](https://github.com/vatesfr/xen-orchestra/issues/4546) (PR [#4550](https://github.com/vatesfr/xen-orchestra/pull/4550))

|

||||

- [Schedules] Prevent double runs [#4625](https://github.com/vatesfr/xen-orchestra/issues/4625) (PR [#4626](https://github.com/vatesfr/xen-orchestra/pull/4626))

|

||||

- [Schedules] Properly enable/disable on config import (PR [#4624](https://github.com/vatesfr/xen-orchestra/pull/4624))

|

||||

|

||||

### Released packages

|

||||

|

||||

- @xen-orchestra/cron v1.0.6

|

||||

- xo-server-transport-icinga2 v0.1.0

|

||||

- xo-server-sdn-controller v0.3.1

|

||||

- xo-server v5.51.1

|

||||

- xo-web v5.51.0

|

||||

|

||||

### Dropped packages

|

||||

|

||||

- xo-server-cloud : this package was useless for OpenSource installations because it required a complete XOA environment

|

||||

|

||||

|

||||

## **5.39.1** (2019-10-11)

|

||||

|

||||

|

||||

|

||||

### Enhancements

|

||||

|

||||

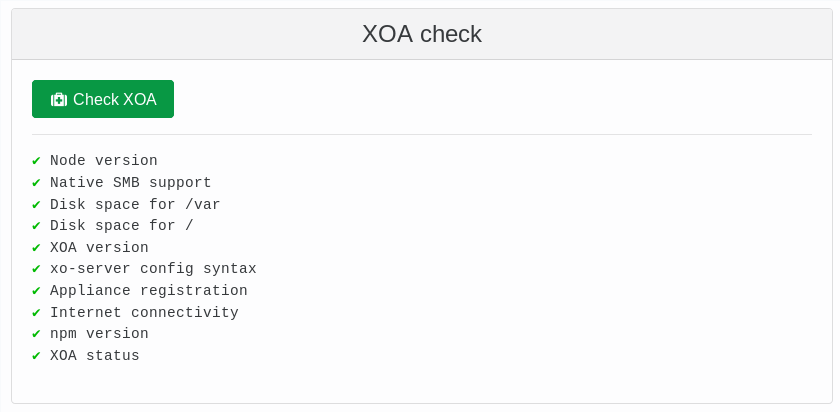

- [Support] Ability to check the XOA on the user interface [#4513](https://github.com/vatesfr/xen-orchestra/issues/4513) (PR [#4574](https://github.com/vatesfr/xen-orchestra/pull/4574))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [VM/new-vm] Fix template selection on creating new VM for resource sets [#4565](https://github.com/vatesfr/xen-orchestra/issues/4565) (PR [#4568](https://github.com/vatesfr/xen-orchestra/pull/4568))

|

||||

- [VM] Clearer invalid cores per socket error [#4120](https://github.com/vatesfr/xen-orchestra/issues/4120) (PR [#4187](https://github.com/vatesfr/xen-orchestra/pull/4187))

|

||||

|

||||

### Released packages

|

||||

|

||||

- xo-web v5.50.3

|

||||

|

||||

|

||||

## **5.39.0** (2019-09-30)

|

||||

|

||||

|

||||

|

||||

### Highlights

|

||||

|

||||

- [VM/console] Add a button to connect to the VM via the local SSH client (PR [#4415](https://github.com/vatesfr/xen-orchestra/pull/4415))

|

||||

@@ -69,8 +175,6 @@

|

||||

|

||||

## **5.38.0** (2019-08-29)

|

||||

|

||||

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [VM/Attach disk] Display confirmation modal when VDI is already attached [#3381](https://github.com/vatesfr/xen-orchestra/issues/3381) (PR [#4366](https://github.com/vatesfr/xen-orchestra/pull/4366))

|

||||

|

||||

@@ -11,7 +11,8 @@

|

||||

|

||||

> Users must be able to say: “I had this issue, happy to know it's fixed”

|

||||

|

||||

- [VM/new-vm] Fix template selection on creating new VM for resource sets [#4565](https://github.com/vatesfr/xen-orchestra/issues/4565) (PR [#4568](https://github.com/vatesfr/xen-orchestra/pull/4568))

|

||||

- [Host] Fix Enable Live Telemetry button state (PR [#4686](https://github.com/vatesfr/xen-orchestra/pull/4686))

|

||||

- [Host] Fix Advanced Live Telemetry URL (PR [#4687](https://github.com/vatesfr/xen-orchestra/pull/4687))

|

||||

|

||||

### Released packages

|

||||

|

||||

@@ -20,5 +21,5 @@

|

||||

>

|

||||

> Rule of thumb: add packages on top.

|

||||

|

||||

- xo-server v5.51.0

|

||||

- xo-web v5.51.0

|

||||

- xo-server v5.54.0

|

||||

- xo-web v5.54.0

|

||||

|

||||

@@ -51,6 +51,7 @@

|

||||

* [Health](health.md)

|

||||

* [Job manager](scheduler.md)

|

||||

* [Alerts](alerts.md)

|

||||

* [Web hooks](web-hooks.md)

|

||||

* [Load balancing](load_balancing.md)

|

||||

* [Emergency Shutdown](emergency_shutdown.md)

|

||||

* [Auto scalability](auto_scalability.md)

|

||||

|

||||

BIN

docs/assets/release-channels.png

Normal file

BIN

docs/assets/release-channels.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 99 KiB |

@@ -22,7 +22,7 @@ group = 'nogroup'

|

||||

By default, XO-server listens on all addresses (0.0.0.0) and runs on port 80. If you need to, you can change this in the `# Basic HTTP` section:

|

||||

|

||||

```toml

|

||||

host = '0.0.0.0'

|

||||

hostname = '0.0.0.0'

|

||||

port = 80

|

||||

```

|

||||

|

||||

@@ -31,7 +31,7 @@ port = 80

|

||||

XO-server can also run in HTTPS (you can run HTTP and HTTPS at the same time) - just modify what's needed in the `# Basic HTTPS` section, this time with the certificates/keys you need and their path:

|

||||

|

||||

```toml

|

||||

host = '0.0.0.0'

|

||||

hostname = '0.0.0.0'

|

||||

port = 443

|

||||

certificate = './certificate.pem'

|

||||

key = './key.pem'

|

||||

@@ -43,10 +43,10 @@ key = './key.pem'

|

||||

|

||||

If you want to redirect everything to HTTPS, you can modify the configuration like this:

|

||||

|

||||

```

|

||||

```toml

|

||||

# If set to true, all HTTP traffic will be redirected to the first HTTPs configuration.

|

||||

|

||||

redirectToHttps: true

|

||||

redirectToHttps = true

|

||||

```

|

||||

|

||||

This should be written just before the `mount` option, inside the `http:` block.

|

||||

|

||||

@@ -20,7 +20,7 @@ We'll consider at this point that you've got a working node on your box. E.g:

|

||||

|

||||

```

|

||||

$ node -v

|

||||

v8.12.0

|

||||

v8.16.2

|

||||

```

|

||||

|

||||

If not, see [this page](https://nodejs.org/en/download/package-manager/) for instructions on how to install Node.

|

||||

@@ -65,17 +65,13 @@ Now you have to create a config file for `xo-server`:

|

||||

|

||||

```

|

||||

$ cd packages/xo-server

|

||||

$ cp sample.config.toml .xo-server.toml

|

||||

$ mkdir -p ~/.config/xo-server

|

||||

$ cp sample.config.toml ~/.config/xo-server/config.toml

|

||||

```

|

||||

|

||||

Edit and uncomment it to have the right path to serve `xo-web`, because `xo-server` embeds an HTTP server (we assume that `xen-orchestra` and `xo-web` are in the same directory):

|

||||

> Note: If you're installing `xo-server` as a global service, you may want to copy the file to `/etc/xo-server/config.toml` instead.

|

||||

|

||||

```toml

|

||||

[http.mounts]

|

||||

'/' = '../xo-web/dist/'

|

||||

```

|

||||

|

||||

In this config file, you can also change default ports (80 and 443) for xo-server. If you are running the server as a non-root user, you will need to set the port to 1024 or higher.

|

||||

In this config file, you can change default ports (80 and 443) for xo-server. If you are running the server as a non-root user, you will need to set the port to 1024 or higher.

|

||||

|

||||

You can try to start xo-server to see if it works. You should have something like this:

|

||||

|

||||

@@ -186,7 +182,7 @@ service redis start

|

||||

|

||||

## SUDO

|

||||

|

||||

If you are running `xo-server` as a non-root user, you need to use `sudo` to be able to mount NFS remotes. You can do this by editing `xo-server/.xo-server.toml` and setting `useSudo = true`. It's near the end of the file:

|

||||

If you are running `xo-server` as a non-root user, you need to use `sudo` to be able to mount NFS remotes. You can do this by editing `xo-server` configuration file and setting `useSudo = true`. It's near the end of the file:

|

||||

|

||||

```

|

||||

useSudo = true

|

||||

|

||||

@@ -41,6 +41,20 @@ However, if you want to start a manual check, you can do it by clicking on the "

|

||||

|

||||

|

||||

|

||||

#### Release channel

|

||||

In Xen Orchestra, you can make a choice between two different release channels.

|

||||

|

||||

##### Stable

|

||||

The stable channel is intended to be a version of Xen Orchestra that is already **one month old** (and therefore will benefit from one month of community feedback and various fixes). This way, users more concerned with the stability of their appliance will have the option to stay on a slightly older (and tested) version of XO (still supported by our pro support).

|

||||

|

||||

##### Latest

|

||||

|

||||

The latest channel will include all the latest improvements available in Xen Orchestra. The version available in latest has already been QA'd by our team, but issues may still occur once deployed in vastly varying environments, such as our user base has.

|

||||

|

||||

> To select the release channel of your choice, go to the XOA > Updates view.

|

||||

|

||||

|

||||

|

||||

#### Upgrade

|

||||

|

||||

If a new version is found, you'll have an upgrade button and its tooltip displayed:

|

||||

|

||||

72

docs/web-hooks.md

Normal file

72

docs/web-hooks.md

Normal file

@@ -0,0 +1,72 @@

|

||||

# Web hooks

|

||||

|

||||

⚠ This feature is experimental!

|

||||

|

||||

## Configuration

|

||||

|

||||

The plugin "web-hooks" needs to be installed and loaded for this feature to work.

|

||||

|

||||

You can trigger an HTTP POST request to a URL when a Xen Orchestra API method is called.

|

||||

|

||||

* Go to Settings > Plugins > Web hooks

|

||||

* Add new hooks

|

||||

* For each hook, configure:

|

||||

* Method: the XO API method that will trigger the HTTP request when called

|

||||

* Type:

|

||||

* pre: the request will be sent when the method is called

|

||||

* post: the request will be sent after the method action is completed

|

||||

* pre/post: both

|

||||

* URL: the full URL which the requests will be sent to

|

||||

* Save the plugin configuration

|

||||

|

||||

From now on, a request will be sent to the corresponding URLs when a configured method is called by an XO client.

|

||||

|

||||

## Request content

|

||||

|

||||

```

|

||||

POST / HTTP/1.1

|

||||

Content-Type: application/json

|

||||

```

|

||||

|

||||

The request's body is a JSON string representing an object with the following properties:

|

||||

|

||||

- `type`: `"pre"` or `"post"`

|

||||

- `callId`: unique ID for this call to help match a pre-call and a post-call

|

||||

- `userId`: unique internal ID of the user who performed the call

|

||||

- `userName`: login/e-mail address of the user who performed the call

|

||||

- `method`: name of the method that was called (e.g. `"vm.start"`)

|

||||

- `params`: call parameters (object)

|

||||

- `timestamp`: epoch timestamp of the beginning ("pre") or end ("post") of the call in ms

|

||||

- `duration`: duration of the call in ms ("post" hooks only)

|

||||

- `result`: call result on success ("post" hooks only)

|

||||

- `error`: call result on error ("post" hooks only)

|

||||

|

||||

## Request handling

|

||||

|

||||

*Quick Node.js example of how you may want to handle the requests*

|

||||

|

||||

```js

|

||||

const http = require('http')

|

||||

const { exec } = require('child_process')

|

||||

|

||||

http

|

||||

.createServer((req, res) => {

|

||||

let body = ''

|

||||

req.on('data', chunk => {

|

||||

body += chunk

|

||||

})

|

||||

req.on('end', () => handleHook(body))

|

||||

res.end()

|

||||

})

|

||||

.listen(3000)

|

||||

|

||||

const handleHook = data => {

|

||||

const { method, params, type, result, error, timestamp } = JSON.parse(data)

|

||||

|

||||

// Log it

|

||||

console.log(`${new Date(timestamp).toISOString()} [${method}|${type}] ${params} → ${result || error}`)

|

||||

|

||||

// Run scripts

|

||||

exec(`./hook-scripts/${method}-${type}.sh`)

|

||||

}

|

||||

```

|

||||

54

docs/xoa.md

54

docs/xoa.md

@@ -22,9 +22,9 @@ For use on huge infrastructure (more than 500+ VMs), feel free to increase the R

|

||||

|

||||

### The quickest way

|

||||

|

||||

The **fastest and most secure way** to install Xen Orchestra is to use our web deploy page. Go on https://xen-orchestra.com/#!/xoa and follow instructions.

|

||||

The **fastest and most secure way** to install Xen Orchestra is to use our web deploy page. Go to https://xen-orchestra.com/#!/xoa and follow the instructions.

|

||||

|

||||

> **Note:** no data will be sent to our servers, it's running only between your browser and your host!

|

||||

> **Note:** no data will be sent to our servers, the deployment only runs between your browser and your host!

|

||||

|

||||

|

||||

|

||||

@@ -41,12 +41,12 @@ bash -c "$(curl -s http://xoa.io/deploy)"

|

||||

Follow the instructions:

|

||||

|

||||

* Your IP configuration will be requested: it's set to **DHCP by default**, otherwise you can enter a fixed IP address (eg `192.168.0.10`)

|

||||

* If DHCP is selected, the script will continue automatically. Otherwise a netmask, gateway, and DNS should be provided.

|

||||

* If DHCP is selected, the script will continue automatically. Otherwise a netmask, gateway, and DNS server should be provided.

|

||||

* XOA will be deployed on your default storage repository. You can move it elsewhere anytime after.

|

||||

|

||||

### Via download the XVA

|

||||

### Via a manual XVA download

|

||||

|

||||

Download XOA from xen-orchestra.com. Once you've got the XVA file, you can import it with `xe vm-import filename=xoa_unified.xva` or via XenCenter.

|

||||

You can also download XOA from xen-orchestra.com in an XVA file. Once you've got the XVA file, you can import it with `xe vm-import filename=xoa_unified.xva` or via XenCenter.

|

||||

|

||||

After the VM is imported, you just need to start it with `xe vm-start vm="XOA"` or with XenCenter.

|

||||

|

||||

@@ -64,6 +64,35 @@ Once you have started the VM, you can access the web UI by putting the IP you co

|

||||

|

||||

**The first thing** you need to do with your XOA is register. [Read the documentation on the page dedicated to the updater/register inferface](updater.md).

|

||||

|

||||

## Technical Support

|

||||

|

||||

In your appliance, you can access the support section in the XOA menu. In this section you can:

|

||||

|

||||

* launch an `xoa check` command

|

||||

|

||||

|

||||

|

||||

* Open a secure support tunnel so our team can remotely investigate

|

||||

|

||||

|

||||

|

||||

<a id="ssh-pro-support"></a>

|

||||

|

||||

If your web UI is not working, you can also open the secure support tunnel from the CLI. To open a private tunnel (we are the only one with the private key), you can use the command `xoa support tunnel` like below:

|

||||

|

||||

```

|

||||

$ xoa support tunnel

|

||||

The support tunnel has been created.

|

||||

|

||||

Do not stop this command before the intervention is over!

|

||||

Give this id to the support: 40713

|

||||

```

|

||||

|

||||

Give us this number, and we'll be able to access your XOA in a secure manner. Then, close the tunnel with `Ctrl+C` after your issue has been solved by support.

|

||||

|

||||

> The tunnel utilizes the user `xoa-support`. If you want to deactivate this bundled user, you can run `chage -E 0 xoa-support`. To re-activate this account, you must run `chage -E 1 xoa-support`.

|

||||

|

||||

|

||||

### First console connection

|

||||

|

||||

If you connect via SSH or console, the default credentials are:

|

||||

@@ -156,21 +185,6 @@ You can access the VM console through XenCenter or using VNC through a SSH tunne

|

||||

|

||||

If you want to go back in DHCP, just run `xoa network dhcp`

|

||||

|

||||

### SSH Pro Support

|

||||

|

||||

By default, if you need support, there is a dedicated user named `xoa-support`. We are the only one with the private key. If you want our assistance on your XOA, you can open a private tunnel with the command `xoa support tunnel` like below:

|

||||

|

||||

```

|

||||

$ xoa support tunnel

|

||||

The support tunnel has been created.

|

||||

|

||||

Do not stop this command before the intervention is over!

|

||||

Give this id to the support: 40713

|

||||

```

|

||||

|

||||

Give us this number, we'll be able to access your XOA in a secure manner. Then, close the tunnel with `Ctrl+C` after your issue has been solved by support.

|

||||

|

||||

> If you want to deactivate this bundled user, you can type `chage -E 0 xoa-support`. To re-activate this account, you must use the `chage -E 1 xoa-support`.

|

||||

|

||||

### Firewall

|

||||

|

||||

|

||||

@@ -12,18 +12,18 @@

|

||||

"eslint-config-standard-jsx": "^8.1.0",

|

||||

"eslint-plugin-eslint-comments": "^3.1.1",

|

||||

"eslint-plugin-import": "^2.8.0",

|

||||

"eslint-plugin-node": "^9.0.1",

|

||||

"eslint-plugin-node": "^10.0.0",

|

||||

"eslint-plugin-promise": "^4.0.0",

|

||||

"eslint-plugin-react": "^7.6.1",

|

||||