Compare commits

200 Commits

xo-vmdk-to

...

xo-web-v5.

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d505401446 | ||

|

|

fafc24aeae | ||

|

|

f78ef0d208 | ||

|

|

8384cc3652 | ||

|

|

60aa18a229 | ||

|

|

3d64b42a89 | ||

|

|

b301997d4b | ||

|

|

ab34743250 | ||

|

|

bc14a1d167 | ||

|

|

2886ec116f | ||

|

|

c2beb2a5fa | ||

|

|

d6ac10f527 | ||

|

|

9dcd8a707a | ||

|

|

e1e97ef158 | ||

|

|

5d6b37f81a | ||

|

|

e1da08ba38 | ||

|

|

1dfb50fefd | ||

|

|

5c06ebc9c8 | ||

|

|

52a9270fb0 | ||

|

|

82247d7422 | ||

|

|

b34688043f | ||

|

|

ce4bcbd19d | ||

|

|

cde9a02c32 | ||

|

|

fe1da4ea12 | ||

|

|

a73306817b | ||

|

|

54e683d3d4 | ||

|

|

f49910ca82 | ||

|

|

4052f7f736 | ||

|

|

b47e097983 | ||

|

|

e44dbfb2a4 | ||

|

|

7d69dd9400 | ||

|

|

e6aae8fcfa | ||

|

|

da800b3391 | ||

|

|

3a574bcecc | ||

|

|

1bb0e234e7 | ||

|

|

b7e14ebf2a | ||

|

|

2af1207702 | ||

|

|

ecfed30e6e | ||

|

|

d06c3e3dd8 | ||

|

|

16b3fbeb16 | ||

|

|

0938804947 | ||

|

|

851bcf9816 | ||

|

|

9f6fc785bc | ||

|

|

56636bf5d4 | ||

|

|

3899a65167 | ||

|

|

628e53c1c3 | ||

|

|

9fa424dd8d | ||

|

|

3e6f2eecfa | ||

|

|

cc655c8ba8 | ||

|

|

78aa0474ee | ||

|

|

9caefa2f49 | ||

|

|

478726fa3b | ||

|

|

f64917ec52 | ||

|

|

2bc25f91c4 | ||

|

|

623d7ffe2f | ||

|

|

07510b5099 | ||

|

|

9f21f9a7bc | ||

|

|

93da70709e | ||

|

|

00436e744a | ||

|

|

1e642fc512 | ||

|

|

6baef2450c | ||

|

|

600f34f85a | ||

|

|

6c0c6bc5c4 | ||

|

|

fcd62ed3cd | ||

|

|

785f2e3a6d | ||

|

|

c2925f7c1e | ||

|

|

60814d8b58 | ||

|

|

2dec448f2c | ||

|

|

b71f4f6800 | ||

|

|

558083a916 | ||

|

|

d507ed9dff | ||

|

|

7ed0242662 | ||

|

|

d7b3d989d7 | ||

|

|

707b2f77f0 | ||

|

|

5ddbb76979 | ||

|

|

97b0fe62d4 | ||

|

|

8ac9b2cdc7 | ||

|

|

bc4c1a13e6 | ||

|

|

d3ec303ade | ||

|

|

6cfc2a1ba6 | ||

|

|

e15cadc863 | ||

|

|

2f9284c263 | ||

|

|

2465852fd6 | ||

|

|

a9f48a0d50 | ||

|

|

4ed0035c67 | ||

|

|

b66f2dfb80 | ||

|

|

3cb155b129 | ||

|

|

df7efc04e2 | ||

|

|

a21a8457a4 | ||

|

|

020955f535 | ||

|

|

51f23a5f03 | ||

|

|

d024319441 | ||

|

|

f8f35938c0 | ||

|

|

2573ace368 | ||

|

|

6bf7269814 | ||

|

|

6695c7bf5e | ||

|

|

44a83fd817 | ||

|

|

08ddfe0649 | ||

|

|

5ba170bf1f | ||

|

|

8150d3110c | ||

|

|

312b33ae85 | ||

|

|

008eb995ed | ||

|

|

6d8848043c | ||

|

|

cf572c0cc5 | ||

|

|

18cfa7dd29 | ||

|

|

72cac2bbd6 | ||

|

|

48ffa28e0b | ||

|

|

2e6baeb95a | ||

|

|

3b5650dc1e | ||

|

|

3279728e4b | ||

|

|

fe0dcbacc5 | ||

|

|

7c5d90fe40 | ||

|

|

944dad6e36 | ||

|

|

6713d3ec66 | ||

|

|

6adadb2359 | ||

|

|

b01096876c | ||

|

|

60243d8517 | ||

|

|

94d0809380 | ||

|

|

e935dd9bad | ||

|

|

30aa2b83d0 | ||

|

|

fc42c58079 | ||

|

|

ee9443cf16 | ||

|

|

f91d4a07eb | ||

|

|

c5a5ef6c93 | ||

|

|

7559fbdab7 | ||

|

|

7925ee8fee | ||

|

|

fea5117ed8 | ||

|

|

468a2c5bf3 | ||

|

|

c728eeaffa | ||

|

|

6aa8e0d4ce | ||

|

|

76ae54ff05 | ||

|

|

344e9e06d0 | ||

|

|

d866bccf3b | ||

|

|

3931c4cf4c | ||

|

|

420f1c77a1 | ||

|

|

59106aa29e | ||

|

|

4216a5808a | ||

|

|

12a7000e36 | ||

|

|

685355c6fb | ||

|

|

66f685165e | ||

|

|

8e8b1c009a | ||

|

|

705d069246 | ||

|

|

58e8d75935 | ||

|

|

5eb1454e67 | ||

|

|

04b31db41b | ||

|

|

29b4cf414a | ||

|

|

7a2a88b7ad | ||

|

|

dc34f3478d | ||

|

|

58175a4f5e | ||

|

|

c4587c11bd | ||

|

|

5b1a5f4fe7 | ||

|

|

ee2db918f3 | ||

|

|

0695bafb90 | ||

|

|

8e116063bf | ||

|

|

3f3b372f89 | ||

|

|

24cc1e8e29 | ||

|

|

e988ad4df9 | ||

|

|

5c12d4a546 | ||

|

|

d90b85204d | ||

|

|

6332355031 | ||

|

|

4ce702dfdf | ||

|

|

362a381dfb | ||

|

|

0eec4ee2f7 | ||

|

|

b92390087b | ||

|

|

bce4d5d96f | ||

|

|

27262ff3e8 | ||

|

|

444b6642f1 | ||

|

|

67d11020bb | ||

|

|

7603974370 | ||

|

|

6cb5639243 | ||

|

|

0c5a37d8a3 | ||

|

|

78cc7fe664 | ||

|

|

2d51bef390 | ||

|

|

bc68fff079 | ||

|

|

0a63acac73 | ||

|

|

e484b073e1 | ||

|

|

b2813d7cc0 | ||

|

|

29b941868d | ||

|

|

37af47ecff | ||

|

|

8eb28d40da | ||

|

|

383dd7b38e | ||

|

|

b13b3fe9f6 | ||

|

|

04a5f55b16 | ||

|

|

4ab1de918e | ||

|

|

44fc5699fd | ||

|

|

dd6c3ff434 | ||

|

|

d747b937ee | ||

|

|

9aa63d0354 | ||

|

|

36220ac1c5 | ||

|

|

d8eb5d4934 | ||

|

|

b580ea98a7 | ||

|

|

0ad68c2280 | ||

|

|

b16f1899ac | ||

|

|

7e740a429a | ||

|

|

61b1bd2533 | ||

|

|

d6ddba8e56 | ||

|

|

d10c7f3898 | ||

|

|

2b2c2c42f1 | ||

|

|

efc65a0669 | ||

|

|

d8e0727d4d |

@@ -3,63 +3,12 @@

|

||||

# Julien Fontanet's configuration

|

||||

# https://gist.github.com/julien-f/8096213

|

||||

|

||||

# Top-most EditorConfig file.

|

||||

root = true

|

||||

|

||||

# Common config.

|

||||

[*]

|

||||

charset = utf-8

|

||||

end_of_line = lf

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

insert_final_newline = true

|

||||

trim_trailing_whitespace = true

|

||||

|

||||

# CoffeeScript

|

||||

#

|

||||

# https://github.com/polarmobile/coffeescript-style-guide/blob/master/README.md

|

||||

[*.{,lit}coffee]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# Markdown

|

||||

[*.{md,mdwn,mdown,markdown}]

|

||||

indent_size = 4

|

||||

indent_style = space

|

||||

|

||||

# Package.json

|

||||

#

|

||||

# This indentation style is the one used by npm.

|

||||

[package.json]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# Pug (Jade)

|

||||

[*.{jade,pug}]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# JavaScript

|

||||

#

|

||||

# Two spaces seems to be the standard most common style, at least in

|

||||

# Node.js (http://nodeguide.com/style.html#tabs-vs-spaces).

|

||||

[*.{js,jsx,ts,tsx}]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# Less

|

||||

[*.less]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# Sass

|

||||

#

|

||||

# Style used for http://libsass.com

|

||||

[*.s[ac]ss]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

# YAML

|

||||

#

|

||||

# Only spaces are allowed.

|

||||

[*.yaml]

|

||||

indent_size = 2

|

||||

indent_style = space

|

||||

|

||||

22

.eslintrc.js

@@ -1,5 +1,11 @@

|

||||

module.exports = {

|

||||

extends: ['standard', 'standard-jsx', 'prettier'],

|

||||

extends: [

|

||||

'standard',

|

||||

'standard-jsx',

|

||||

'prettier',

|

||||

'prettier/standard',

|

||||

'prettier/react',

|

||||

],

|

||||

globals: {

|

||||

__DEV__: true,

|

||||

$Dict: true,

|

||||

@@ -10,6 +16,16 @@ module.exports = {

|

||||

$PropertyType: true,

|

||||

$Shape: true,

|

||||

},

|

||||

|

||||

overrides: [

|

||||

{

|

||||

files: ['packages/*cli*/**/*.js', '*-cli.js'],

|

||||

rules: {

|

||||

'no-console': 'off',

|

||||

},

|

||||

},

|

||||

],

|

||||

|

||||

parser: 'babel-eslint',

|

||||

parserOptions: {

|

||||

ecmaFeatures: {

|

||||

@@ -17,12 +33,10 @@ module.exports = {

|

||||

},

|

||||

},

|

||||

rules: {

|

||||

'no-console': ['error', { allow: ['warn', 'error'] }],

|

||||

'no-var': 'error',

|

||||

'node/no-extraneous-import': 'error',

|

||||

'node/no-extraneous-require': 'error',

|

||||

'prefer-const': 'error',

|

||||

|

||||

// See https://github.com/prettier/eslint-config-prettier/issues/65

|

||||

'react/jsx-indent': 'off',

|

||||

},

|

||||

}

|

||||

|

||||

@@ -7,6 +7,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/async-map",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/async-map",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

|

||||

@@ -46,6 +46,12 @@ const getConfig = (key, ...args) => {

|

||||

: config

|

||||

}

|

||||

|

||||

// some plugins must be used in a specific order

|

||||

const pluginsOrder = [

|

||||

'@babel/plugin-proposal-decorators',

|

||||

'@babel/plugin-proposal-class-properties',

|

||||

]

|

||||

|

||||

module.exports = function(pkg, plugins, presets) {

|

||||

plugins === undefined && (plugins = {})

|

||||

presets === undefined && (presets = {})

|

||||

@@ -61,7 +67,13 @@ module.exports = function(pkg, plugins, presets) {

|

||||

return {

|

||||

comments: !__PROD__,

|

||||

ignore: __TEST__ ? undefined : [/\.spec\.js$/],

|

||||

plugins: Object.keys(plugins).map(plugin => [plugin, plugins[plugin]]),

|

||||

plugins: Object.keys(plugins)

|

||||

.map(plugin => [plugin, plugins[plugin]])

|

||||

.sort(([a], [b]) => {

|

||||

const oA = pluginsOrder.indexOf(a)

|

||||

const oB = pluginsOrder.indexOf(b)

|

||||

return oA !== -1 && oB !== -1 ? oA - oB : a < b ? -1 : 1

|

||||

}),

|

||||

presets: Object.keys(presets).map(preset => [preset, presets[preset]]),

|

||||

}

|

||||

}

|

||||

|

||||

@@ -5,6 +5,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/babel-config",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/babel-config",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

}

|

||||

|

||||

@@ -82,35 +82,26 @@ ${cliName} v${pkg.version}

|

||||

)

|

||||

|

||||

await Promise.all([

|

||||

srcXapi.setFieldEntries(srcSnapshot, 'other_config', metadata),

|

||||

srcXapi.setFieldEntries(srcSnapshot, 'other_config', {

|

||||

'xo:backup:exported': 'true',

|

||||

}),

|

||||

tgtXapi.setField(

|

||||

tgtVm,

|

||||

'name_label',

|

||||

`${srcVm.name_label} (${srcSnapshot.snapshot_time})`

|

||||

),

|

||||

tgtXapi.setFieldEntries(tgtVm, 'other_config', metadata),

|

||||

tgtXapi.setFieldEntries(tgtVm, 'other_config', {

|

||||

srcSnapshot.update_other_config(metadata),

|

||||

srcSnapshot.update_other_config('xo:backup:exported', 'true'),

|

||||

tgtVm.set_name_label(`${srcVm.name_label} (${srcSnapshot.snapshot_time})`),

|

||||

tgtVm.update_other_config(metadata),

|

||||

tgtVm.update_other_config({

|

||||

'xo:backup:sr': tgtSr.uuid,

|

||||

'xo:copy_of': srcSnapshotUuid,

|

||||

}),

|

||||

tgtXapi.setFieldEntries(tgtVm, 'blocked_operations', {

|

||||

start:

|

||||

'Start operation for this vm is blocked, clone it if you want to use it.',

|

||||

}),

|

||||

tgtVm.update_blocked_operations(

|

||||

'start',

|

||||

'Start operation for this vm is blocked, clone it if you want to use it.'

|

||||

),

|

||||

Promise.all(

|

||||

userDevices.map(userDevice => {

|

||||

const srcDisk = srcDisks[userDevice]

|

||||

const tgtDisk = tgtDisks[userDevice]

|

||||

|

||||

return tgtXapi.setFieldEntry(

|

||||

tgtDisk,

|

||||

'other_config',

|

||||

'xo:copy_of',

|

||||

srcDisk.uuid

|

||||

)

|

||||

return tgtDisk.update_other_config({

|

||||

'xo:copy_of': srcDisk.uuid,

|

||||

})

|

||||

})

|

||||

),

|

||||

])

|

||||

|

||||

@@ -4,6 +4,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/cr-seed-cli",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/cr-seed-cli",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

@@ -15,6 +16,6 @@

|

||||

},

|

||||

"dependencies": {

|

||||

"golike-defer": "^0.4.1",

|

||||

"xen-api": "^0.24.1"

|

||||

"xen-api": "^0.24.6"

|

||||

}

|

||||

}

|

||||

|

||||

@@ -17,6 +17,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/cron",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/cron",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

|

||||

@@ -7,6 +7,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/defined",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/defined",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

|

||||

@@ -7,6 +7,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/emit-async",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/emit-async",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

|

||||

@@ -1,12 +1,13 @@

|

||||

{

|

||||

"name": "@xen-orchestra/fs",

|

||||

"version": "0.6.0",

|

||||

"version": "0.8.0",

|

||||

"license": "AGPL-3.0",

|

||||

"description": "The File System for Xen Orchestra backups.",

|

||||

"keywords": [],

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/fs",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/fs",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

@@ -23,11 +24,12 @@

|

||||

"@marsaud/smb2": "^0.13.0",

|

||||

"@sindresorhus/df": "^2.1.0",

|

||||

"@xen-orchestra/async-map": "^0.0.0",

|

||||

"decorator-synchronized": "^0.5.0",

|

||||

"execa": "^1.0.0",

|

||||

"fs-extra": "^7.0.0",

|

||||

"get-stream": "^4.0.0",

|

||||

"lodash": "^4.17.4",

|

||||

"promise-toolbox": "^0.11.0",

|

||||

"promise-toolbox": "^0.12.1",

|

||||

"readable-stream": "^3.0.6",

|

||||

"through2": "^3.0.0",

|

||||

"tmp": "^0.0.33",

|

||||

@@ -43,7 +45,7 @@

|

||||

"async-iterator-to-stream": "^1.1.0",

|

||||

"babel-plugin-lodash": "^3.3.2",

|

||||

"cross-env": "^5.1.3",

|

||||

"dotenv": "^6.1.0",

|

||||

"dotenv": "^7.0.0",

|

||||

"index-modules": "^0.3.0",

|

||||

"rimraf": "^2.6.2"

|

||||

},

|

||||

|

||||

@@ -1,5 +1,6 @@

|

||||

import execa from 'execa'

|

||||

import fs from 'fs-extra'

|

||||

import { ignoreErrors } from 'promise-toolbox'

|

||||

import { join } from 'path'

|

||||

import { tmpdir } from 'os'

|

||||

|

||||

@@ -21,7 +22,13 @@ export default class MountHandler extends LocalHandler {

|

||||

super(remote, opts)

|

||||

|

||||

this._execa = useSudo ? sudoExeca : execa

|

||||

this._params = params

|

||||

this._keeper = undefined

|

||||

this._params = {

|

||||

...params,

|

||||

options: [params.options, remote.options]

|

||||

.filter(_ => _ !== undefined)

|

||||

.join(','),

|

||||

}

|

||||

this._realPath = join(

|

||||

mountsDir,

|

||||

remote.id ||

|

||||

@@ -32,19 +39,20 @@ export default class MountHandler extends LocalHandler {

|

||||

}

|

||||

|

||||

async _forget() {

|

||||

await this._execa('umount', ['--force', this._getRealPath()], {

|

||||

env: {

|

||||

LANG: 'C',

|

||||

},

|

||||

}).catch(error => {

|

||||

if (

|

||||

error == null ||

|

||||

typeof error.stderr !== 'string' ||

|

||||

!error.stderr.includes('not mounted')

|

||||

) {

|

||||

throw error

|

||||

}

|

||||

})

|

||||

const keeper = this._keeper

|

||||

if (keeper === undefined) {

|

||||

return

|

||||

}

|

||||

this._keeper = undefined

|

||||

await fs.close(keeper)

|

||||

|

||||

await ignoreErrors.call(

|

||||

this._execa('umount', [this._getRealPath()], {

|

||||

env: {

|

||||

LANG: 'C',

|

||||

},

|

||||

})

|

||||

)

|

||||

}

|

||||

|

||||

_getRealPath() {

|

||||

@@ -52,26 +60,49 @@ export default class MountHandler extends LocalHandler {

|

||||

}

|

||||

|

||||

async _sync() {

|

||||

await fs.ensureDir(this._getRealPath())

|

||||

const { type, device, options, env } = this._params

|

||||

return this._execa(

|

||||

'mount',

|

||||

['-t', type, device, this._getRealPath(), '-o', options],

|

||||

{

|

||||

env: {

|

||||

LANG: 'C',

|

||||

...env,

|

||||

},

|

||||

// in case of multiple `sync`s, ensure we properly close previous keeper

|

||||

{

|

||||

const keeper = this._keeper

|

||||

if (keeper !== undefined) {

|

||||

this._keeper = undefined

|

||||

ignoreErrors.call(fs.close(keeper))

|

||||

}

|

||||

).catch(error => {

|

||||

let stderr

|

||||

if (

|

||||

error == null ||

|

||||

typeof (stderr = error.stderr) !== 'string' ||

|

||||

!(stderr.includes('already mounted') || stderr.includes('busy'))

|

||||

) {

|

||||

}

|

||||

|

||||

const realPath = this._getRealPath()

|

||||

|

||||

await fs.ensureDir(realPath)

|

||||

|

||||

try {

|

||||

const { type, device, options, env } = this._params

|

||||

await this._execa(

|

||||

'mount',

|

||||

['-t', type, device, realPath, '-o', options],

|

||||

{

|

||||

env: {

|

||||

LANG: 'C',

|

||||

...env,

|

||||

},

|

||||

}

|

||||

)

|

||||

} catch (error) {

|

||||

try {

|

||||

// the failure may mean it's already mounted, use `findmnt` to check

|

||||

// that's the case

|

||||

await this._execa('findmnt', [realPath], {

|

||||

stdio: 'ignore',

|

||||

})

|

||||

} catch (_) {

|

||||

throw error

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

// keep an open file on the mount to prevent it from being unmounted if used

|

||||

// by another handler/process

|

||||

const keeperPath = `${realPath}/.keeper_${Math.random()

|

||||

.toString(36)

|

||||

.slice(2)}`

|

||||

this._keeper = await fs.open(keeperPath, 'w')

|

||||

ignoreErrors.call(fs.unlink(keeperPath))

|

||||

}

|

||||

}

|

||||

|

||||

@@ -5,6 +5,7 @@ import getStream from 'get-stream'

|

||||

|

||||

import asyncMap from '@xen-orchestra/async-map'

|

||||

import path from 'path'

|

||||

import synchronized from 'decorator-synchronized'

|

||||

import { fromCallback, fromEvent, ignoreErrors, timeout } from 'promise-toolbox'

|

||||

import { parse } from 'xo-remote-parser'

|

||||

import { randomBytes } from 'crypto'

|

||||

@@ -24,6 +25,10 @@ type RemoteInfo = { used?: number, size?: number }

|

||||

type File = FileDescriptor | string

|

||||

|

||||

const checksumFile = file => file + '.checksum'

|

||||

const computeRate = (hrtime: number[], size: number) => {

|

||||

const seconds = hrtime[0] + hrtime[1] / 1e9

|

||||

return size / seconds

|

||||

}

|

||||

|

||||

const DEFAULT_TIMEOUT = 6e5 // 10 min

|

||||

|

||||

@@ -34,18 +39,18 @@ const ignoreEnoent = error => {

|

||||

}

|

||||

|

||||

class PrefixWrapper {

|

||||

constructor(remote, prefix) {

|

||||

constructor(handler, prefix) {

|

||||

this._prefix = prefix

|

||||

this._remote = remote

|

||||

this._handler = handler

|

||||

}

|

||||

|

||||

get type() {

|

||||

return this._remote.type

|

||||

return this._handler.type

|

||||

}

|

||||

|

||||

// necessary to remove the prefix from the path with `prependDir` option

|

||||

async list(dir, opts) {

|

||||

const entries = await this._remote.list(this._resolve(dir), opts)

|

||||

const entries = await this._handler.list(this._resolve(dir), opts)

|

||||

if (opts != null && opts.prependDir) {

|

||||

const n = this._prefix.length

|

||||

entries.forEach((entry, i, entries) => {

|

||||

@@ -56,7 +61,7 @@ class PrefixWrapper {

|

||||

}

|

||||

|

||||

rename(oldPath, newPath) {

|

||||

return this._remote.rename(this._resolve(oldPath), this._resolve(newPath))

|

||||

return this._handler.rename(this._resolve(oldPath), this._resolve(newPath))

|

||||

}

|

||||

|

||||

_resolve(path) {

|

||||

@@ -216,6 +221,7 @@ export default class RemoteHandlerAbstract {

|

||||

// FIXME: Some handlers are implemented based on system-wide mecanisms (such

|

||||

// as mount), forgetting them might breaking other processes using the same

|

||||

// remote.

|

||||

@synchronized()

|

||||

async forget(): Promise<void> {

|

||||

await this._forget()

|

||||

}

|

||||

@@ -354,23 +360,33 @@ export default class RemoteHandlerAbstract {

|

||||

// metadata

|

||||

//

|

||||

// This method MUST ALWAYS be called before using the handler.

|

||||

@synchronized()

|

||||

async sync(): Promise<void> {

|

||||

await this._sync()

|

||||

}

|

||||

|

||||

async test(): Promise<Object> {

|

||||

const SIZE = 1024 * 1024 * 10

|

||||

const testFileName = normalizePath(`${Date.now()}.test`)

|

||||

const data = await fromCallback(cb => randomBytes(1024 * 1024, cb))

|

||||

const data = await fromCallback(cb => randomBytes(SIZE, cb))

|

||||

let step = 'write'

|

||||

try {

|

||||

const writeStart = process.hrtime()

|

||||

await this._outputFile(testFileName, data, { flags: 'wx' })

|

||||

const writeDuration = process.hrtime(writeStart)

|

||||

|

||||

step = 'read'

|

||||

const readStart = process.hrtime()

|

||||

const read = await this._readFile(testFileName, { flags: 'r' })

|

||||

const readDuration = process.hrtime(readStart)

|

||||

|

||||

if (!data.equals(read)) {

|

||||

throw new Error('output and input did not match')

|

||||

}

|

||||

return {

|

||||

success: true,

|

||||

writeRate: computeRate(writeDuration, SIZE),

|

||||

readRate: computeRate(readDuration, SIZE),

|

||||

}

|

||||

} catch (error) {

|

||||

return {

|

||||

@@ -565,7 +581,7 @@ function createPrefixWrapperMethods() {

|

||||

if (arguments.length !== 0 && typeof (path = arguments[0]) === 'string') {

|

||||

arguments[0] = this._resolve(path)

|

||||

}

|

||||

return value.apply(this._remote, arguments)

|

||||

return value.apply(this._handler, arguments)

|

||||

}

|

||||

|

||||

defineProperty(pPw, name, descriptor)

|

||||

|

||||

@@ -16,6 +16,8 @@ class TestHandler extends AbstractHandler {

|

||||

}

|

||||

}

|

||||

|

||||

jest.useFakeTimers()

|

||||

|

||||

describe('closeFile()', () => {

|

||||

it(`throws in case of timeout`, async () => {

|

||||

const testHandler = new TestHandler({

|

||||

|

||||

@@ -290,9 +290,11 @@ handlers.forEach(url => {

|

||||

|

||||

describe('#test()', () => {

|

||||

it('tests the remote appears to be working', async () => {

|

||||

expect(await handler.test()).toEqual({

|

||||

success: true,

|

||||

})

|

||||

const answer = await handler.test()

|

||||

|

||||

expect(answer.success).toBe(true)

|

||||

expect(typeof answer.writeRate).toBe('number')

|

||||

expect(typeof answer.readRate).toBe('number')

|

||||

})

|

||||

})

|

||||

|

||||

|

||||

@@ -6,12 +6,11 @@ const DEFAULT_NFS_OPTIONS = 'vers=3'

|

||||

|

||||

export default class NfsHandler extends MountHandler {

|

||||

constructor(remote, opts) {

|

||||

const { host, port, path, options } = parse(remote.url)

|

||||

const { host, port, path } = parse(remote.url)

|

||||

super(remote, opts, {

|

||||

type: 'nfs',

|

||||

device: `${host}${port !== undefined ? ':' + port : ''}:${path}`,

|

||||

options:

|

||||

DEFAULT_NFS_OPTIONS + (options !== undefined ? `,${options}` : ''),

|

||||

options: DEFAULT_NFS_OPTIONS,

|

||||

})

|

||||

}

|

||||

|

||||

|

||||

@@ -5,19 +5,13 @@ import normalizePath from './_normalizePath'

|

||||

|

||||

export default class SmbMountHandler extends MountHandler {

|

||||

constructor(remote, opts) {

|

||||

const {

|

||||

domain = 'WORKGROUP',

|

||||

host,

|

||||

options,

|

||||

password,

|

||||

path,

|

||||

username,

|

||||

} = parse(remote.url)

|

||||

const { domain = 'WORKGROUP', host, password, path, username } = parse(

|

||||

remote.url

|

||||

)

|

||||

super(remote, opts, {

|

||||

type: 'cifs',

|

||||

device: '//' + host + normalizePath(path),

|

||||

options:

|

||||

`domain=${domain}` + (options !== undefined ? `,${options}` : ''),

|

||||

options: `domain=${domain}`,

|

||||

env: {

|

||||

USER: username,

|

||||

PASSWD: password,

|

||||

|

||||

@@ -7,6 +7,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/log",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/log",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

@@ -30,7 +31,7 @@

|

||||

},

|

||||

"dependencies": {

|

||||

"lodash": "^4.17.4",

|

||||

"promise-toolbox": "^0.11.0"

|

||||

"promise-toolbox": "^0.12.1"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@babel/cli": "^7.0.0",

|

||||

|

||||

@@ -7,6 +7,7 @@

|

||||

"homepage": "https://github.com/vatesfr/xen-orchestra/tree/master/@xen-orchestra/mixin",

|

||||

"bugs": "https://github.com/vatesfr/xen-orchestra/issues",

|

||||

"repository": {

|

||||

"directory": "@xen-orchestra/mixin",

|

||||

"type": "git",

|

||||

"url": "https://github.com/vatesfr/xen-orchestra.git"

|

||||

},

|

||||

|

||||

145

CHANGELOG.md

@@ -1,9 +1,112 @@

|

||||

# ChangeLog

|

||||

|

||||

## *next*

|

||||

## Next (2019-03-19)

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [SR/Disk] Disable actions on unmanaged VDIs [#3988](https://github.com/vatesfr/xen-orchestra/issues/3988) (PR [#4000](https://github.com/vatesfr/xen-orchestra/pull/4000))

|

||||

- [Pool] Specify automatic networks on a Pool [#3916](https://github.com/vatesfr/xen-orchestra/issues/3916) (PR [#3958](https://github.com/vatesfr/xen-orchestra/pull/3958))

|

||||

- [VM/advanced] Manage start delay for VM [#3909](https://github.com/vatesfr/xen-orchestra/issues/3909) (PR [#4002](https://github.com/vatesfr/xen-orchestra/pull/4002))

|

||||

- [New/Vm] SR section: Display warning message when the selected SRs aren't in the same host [#3911](https://github.com/vatesfr/xen-orchestra/issues/3911) (PR [#3967](https://github.com/vatesfr/xen-orchestra/pull/3967))

|

||||

- Enable compression for HTTP requests (and initial objects fetch)

|

||||

- [VDI migration] Display same-pool SRs first in the selector [#3945](https://github.com/vatesfr/xen-orchestra/issues/3945) (PR [#3996](https://github.com/vatesfr/xen-orchestra/pull/3996))

|

||||

- [Home] Save the current page in url [#3993](https://github.com/vatesfr/xen-orchestra/issues/3993) (PR [#3999](https://github.com/vatesfr/xen-orchestra/pull/3999))

|

||||

- [VDI] Ensure suspend VDI is destroyed when destroying a VM [#4027](https://github.com/vatesfr/xen-orchestra/issues/4027) (PR [#4038](https://github.com/vatesfr/xen-orchestra/pull/4038))

|

||||

- [VM/disk]: Warning when 2 VDIs are on 2 different hosts' local SRs [#3911](https://github.com/vatesfr/xen-orchestra/issues/3911) (PR [#3969](https://github.com/vatesfr/xen-orchestra/pull/3969))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [New network] PIF was wrongly required which prevented from creating a private network (PR [#4010](https://github.com/vatesfr/xen-orchestra/pull/4010))

|

||||

- [Google authentication] Migrate to new endpoint

|

||||

- [Backup NG] Better handling of huge logs [#4025](https://github.com/vatesfr/xen-orchestra/issues/4025) (PR [#4026](https://github.com/vatesfr/xen-orchestra/pull/4026))

|

||||

- [Home/VM] Bulk migration: fixed VM VDIs not migrated to the selected SR [#3986](https://github.com/vatesfr/xen-orchestra/issues/3986) (PR [#3987](https://github.com/vatesfr/xen-orchestra/pull/3987))

|

||||

- [Stats] Fix cache usage with simultaneous requests [#4017](https://github.com/vatesfr/xen-orchestra/issues/4017) (PR [#4028](https://github.com/vatesfr/xen-orchestra/pull/4028))

|

||||

- [Backup NG] Fix compression displayed for the wrong backup mode (PR [#4021](https://github.com/vatesfr/xen-orchestra/pull/4021))

|

||||

|

||||

## **5.32.2** (2019-02-28)

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- Fix XAPI events monitoring on old version (XenServer 7.2)

|

||||

|

||||

## **5.32.1** (2019-02-28)

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- Fix a very short timeout in the monitoring of XAPI events which may lead to unresponsive XenServer hosts

|

||||

|

||||

## **5.32.0** (2019-02-28)

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [VM migration] Display same-pool hosts first in the selector [#3262](https://github.com/vatesfr/xen-orchestra/issues/3262) (PR [#3890](https://github.com/vatesfr/xen-orchestra/pull/3890))

|

||||

- [Home/VM] Sort VM by start time [#3955](https://github.com/vatesfr/xen-orchestra/issues/3955) (PR [#3970](https://github.com/vatesfr/xen-orchestra/pull/3970))

|

||||

- [Editable fields] Unfocusing (clicking outside) submits the change instead of canceling (PR [#3980](https://github.com/vatesfr/xen-orchestra/pull/3980))

|

||||

- [Network] Dedicated page for network creation [#3895](https://github.com/vatesfr/xen-orchestra/issues/3895) (PR [#3906](https://github.com/vatesfr/xen-orchestra/pull/3906))

|

||||

- [Logs] Add button to download the log [#3957](https://github.com/vatesfr/xen-orchestra/issues/3957) (PR [#3985](https://github.com/vatesfr/xen-orchestra/pull/3985))

|

||||

- [Continuous Replication] Share full copy between schedules [#3973](https://github.com/vatesfr/xen-orchestra/issues/3973) (PR [#3995](https://github.com/vatesfr/xen-orchestra/pull/3995))

|

||||

- [Backup] Ability to backup XO configuration and pool metadata [#808](https://github.com/vatesfr/xen-orchestra/issues/808) [#3501](https://github.com/vatesfr/xen-orchestra/issues/3501) (PR [#3912](https://github.com/vatesfr/xen-orchestra/pull/3912))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Host] Fix multipathing status for XenServer < 7.5 [#3956](https://github.com/vatesfr/xen-orchestra/issues/3956) (PR [#3961](https://github.com/vatesfr/xen-orchestra/pull/3961))

|

||||

- [Home/VM] Show creation date of the VM on if it available [#3953](https://github.com/vatesfr/xen-orchestra/issues/3953) (PR [#3959](https://github.com/vatesfr/xen-orchestra/pull/3959))

|

||||

- [Notifications] Fix invalid notifications when not registered (PR [#3966](https://github.com/vatesfr/xen-orchestra/pull/3966))

|

||||

- [Import] Fix import of some OVA files [#3962](https://github.com/vatesfr/xen-orchestra/issues/3962) (PR [#3974](https://github.com/vatesfr/xen-orchestra/pull/3974))

|

||||

- [Servers] Fix *already connected error* after a server has been removed during connection [#3976](https://github.com/vatesfr/xen-orchestra/issues/3976) (PR [#3977](https://github.com/vatesfr/xen-orchestra/pull/3977))

|

||||

- [Backup] Fix random _mount_ issues with NFS/SMB remotes [#3973](https://github.com/vatesfr/xen-orchestra/issues/3973) (PR [#4003](https://github.com/vatesfr/xen-orchestra/pull/4003))

|

||||

|

||||

### Released packages

|

||||

|

||||

- @xen-orchestra/fs v0.7.0

|

||||

- xen-api v0.24.3

|

||||

- xoa-updater v0.15.2

|

||||

- xo-server v5.36.0

|

||||

- xo-web v5.36.0

|

||||

|

||||

## **5.31.2** (2019-02-08)

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Home] Set description on bulk snapshot [#3925](https://github.com/vatesfr/xen-orchestra/issues/3925) (PR [#3933](https://github.com/vatesfr/xen-orchestra/pull/3933))

|

||||

- Work-around the XenServer issue when `VBD#VDI` is an empty string instead of an opaque reference (PR [#3950](https://github.com/vatesfr/xen-orchestra/pull/3950))

|

||||

- [VDI migration] Retry when XenServer fails with `TOO_MANY_STORAGE_MIGRATES` (PR [#3940](https://github.com/vatesfr/xen-orchestra/pull/3940))

|

||||

- [VM]

|

||||

- [General] The creation date of the VM is now visible [#3932](https://github.com/vatesfr/xen-orchestra/issues/3932) (PR [#3947](https://github.com/vatesfr/xen-orchestra/pull/3947))

|

||||

- [Disks] Display device name [#3902](https://github.com/vatesfr/xen-orchestra/issues/3902) (PR [#3946](https://github.com/vatesfr/xen-orchestra/pull/3946))

|

||||

- [VM Snapshotting]

|

||||

- Detect and destroy broken quiesced snapshot left by XenServer [#3936](https://github.com/vatesfr/xen-orchestra/issues/3936) (PR [#3937](https://github.com/vatesfr/xen-orchestra/pull/3937))

|

||||

- Retry twice after a 1 minute delay if quiesce failed [#3938](https://github.com/vatesfr/xen-orchestra/issues/3938) (PR [#3952](https://github.com/vatesfr/xen-orchestra/pull/3952))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Import] Fix import of big OVA files

|

||||

- [Host] Show the host's memory usage instead of the sum of the VMs' memory usage (PR [#3924](https://github.com/vatesfr/xen-orchestra/pull/3924))

|

||||

- [SAML] Make `AssertionConsumerServiceURL` matches the callback URL

|

||||

- [Backup NG] Correctly delete broken VHD chains [#3875](https://github.com/vatesfr/xen-orchestra/issues/3875) (PR [#3939](https://github.com/vatesfr/xen-orchestra/pull/3939))

|

||||

- [Remotes] Don't ignore `mount` options [#3935](https://github.com/vatesfr/xen-orchestra/issues/3935) (PR [#3931](https://github.com/vatesfr/xen-orchestra/pull/3931))

|

||||

|

||||

### Released packages

|

||||

|

||||

- xen-api v0.24.2

|

||||

- @xen-orchestra/fs v0.6.1

|

||||

- xo-server-auth-saml v0.5.3

|

||||

- xo-server v5.35.0

|

||||

- xo-web v5.35.0

|

||||

|

||||

## **5.31.0** (2019-01-31)

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Backup NG] Restore logs moved to restore tab [#3772](https://github.com/vatesfr/xen-orchestra/issues/3772) (PR [#3802](https://github.com/vatesfr/xen-orchestra/pull/3802))

|

||||

- [Remotes] New SMB implementation that provides better stability and performance [#2257](https://github.com/vatesfr/xen-orchestra/issues/2257) (PR [#3708](https://github.com/vatesfr/xen-orchestra/pull/3708))

|

||||

- [VM/advanced] ACL management from VM view [#3040](https://github.com/vatesfr/xen-orchestra/issues/3040) (PR [#3774](https://github.com/vatesfr/xen-orchestra/pull/3774))

|

||||

- [VM / snapshots] Ability to save the VM memory [#3795](https://github.com/vatesfr/xen-orchestra/issues/3795) (PR [#3812](https://github.com/vatesfr/xen-orchestra/pull/3812))

|

||||

- [Backup NG / Health] Show number of lone snapshots in tab label [#3500](https://github.com/vatesfr/xen-orchestra/issues/3500) (PR [#3824](https://github.com/vatesfr/xen-orchestra/pull/3824))

|

||||

- [Login] Add autofocus on username input on login page [#3835](https://github.com/vatesfr/xen-orchestra/issues/3835) (PR [#3836](https://github.com/vatesfr/xen-orchestra/pull/3836))

|

||||

- [Home/VM] Bulk snapshot: specify snapshots' names [#3778](https://github.com/vatesfr/xen-orchestra/issues/3778) (PR [#3787](https://github.com/vatesfr/xen-orchestra/pull/3787))

|

||||

- [Remotes] Show free space and disk usage on remote [#3055](https://github.com/vatesfr/xen-orchestra/issues/3055) (PR [#3767](https://github.com/vatesfr/xen-orchestra/pull/3767))

|

||||

- [New SR] Add tooltip for reattach action button [#3845](https://github.com/vatesfr/xen-orchestra/issues/3845) (PR [#3852](https://github.com/vatesfr/xen-orchestra/pull/3852))

|

||||

- [VM migration] Display hosts' free memory [#3264](https://github.com/vatesfr/xen-orchestra/issues/3264) (PR [#3832](https://github.com/vatesfr/xen-orchestra/pull/3832))

|

||||

- [Plugins] New field to filter displayed plugins (PR [#3832](https://github.com/vatesfr/xen-orchestra/pull/3871))

|

||||

- Ability to copy ID of "unknown item"s [#3833](https://github.com/vatesfr/xen-orchestra/issues/3833) (PR [#3856](https://github.com/vatesfr/xen-orchestra/pull/3856))

|

||||

@@ -24,6 +127,12 @@

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Self] Display sorted Resource Sets [#3818](https://github.com/vatesfr/xen-orchestra/issues/3818) (PR [#3823](https://github.com/vatesfr/xen-orchestra/pull/3823))

|

||||

- [Servers] Correctly report connecting status (PR [#3838](https://github.com/vatesfr/xen-orchestra/pull/3838))

|

||||

- [Servers] Fix cannot reconnect to a server after connection has been lost [#3839](https://github.com/vatesfr/xen-orchestra/issues/3839) (PR [#3841](https://github.com/vatesfr/xen-orchestra/pull/3841))

|

||||

- [New VM] Fix `NO_HOSTS_AVAILABLE()` error when creating a VM on a local SR from template on another local SR [#3084](https://github.com/vatesfr/xen-orchestra/issues/3084) (PR [#3827](https://github.com/vatesfr/xen-orchestra/pull/3827))

|

||||

- [Backup NG] Fix typo in the form [#3854](https://github.com/vatesfr/xen-orchestra/issues/3854) (PR [#3855](https://github.com/vatesfr/xen-orchestra/pull/3855))

|

||||

- [New SR] No warning when creating a NFS SR on a path that is already used as NFS SR [#3844](https://github.com/vatesfr/xen-orchestra/issues/3844) (PR [#3851](https://github.com/vatesfr/xen-orchestra/pull/3851))

|

||||

- [New SR] No redirection if the SR creation failed or canceled [#3843](https://github.com/vatesfr/xen-orchestra/issues/3843) (PR [#3853](https://github.com/vatesfr/xen-orchestra/pull/3853))

|

||||

- [Home] Fix two tabs opened by middle click in Firefox [#3450](https://github.com/vatesfr/xen-orchestra/issues/3450) (PR [#3825](https://github.com/vatesfr/xen-orchestra/pull/3825))

|

||||

- [XOA] Enable downgrade for ending trial (PR [#3867](https://github.com/vatesfr/xen-orchestra/pull/3867))

|

||||

@@ -38,6 +147,8 @@

|

||||

|

||||

### Released packages

|

||||

|

||||

- vhd-cli v0.2.0

|

||||

- @xen-orchestra/fs v0.6.0

|

||||

- vhd-lib v0.5.1

|

||||

- xoa-updater v0.15.0

|

||||

- xen-api v0.24.1

|

||||

@@ -45,38 +156,6 @@

|

||||

- xo-server v5.34.0

|

||||

- xo-web v5.34.0

|

||||

|

||||

## *staging*

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Backup NG] Restore logs moved to restore tab [#3772](https://github.com/vatesfr/xen-orchestra/issues/3772) (PR [#3802](https://github.com/vatesfr/xen-orchestra/pull/3802))

|

||||

- [Remotes] New SMB implementation that provides better stability and performance [#2257](https://github.com/vatesfr/xen-orchestra/issues/2257) (PR [#3708](https://github.com/vatesfr/xen-orchestra/pull/3708))

|

||||

- [VM/advanced] ACL management from VM view [#3040](https://github.com/vatesfr/xen-orchestra/issues/3040) (PR [#3774](https://github.com/vatesfr/xen-orchestra/pull/3774))

|

||||

- [VM / snapshots] Ability to save the VM memory [#3795](https://github.com/vatesfr/xen-orchestra/issues/3795) (PR [#3812](https://github.com/vatesfr/xen-orchestra/pull/3812))

|

||||

- [Backup NG / Health] Show number of lone snapshots in tab label [#3500](https://github.com/vatesfr/xen-orchestra/issues/3500) (PR [#3824](https://github.com/vatesfr/xen-orchestra/pull/3824))

|

||||

- [Login] Add autofocus on username input on login page [#3835](https://github.com/vatesfr/xen-orchestra/issues/3835) (PR [#3836](https://github.com/vatesfr/xen-orchestra/pull/3836))

|

||||

- [Home/VM] Bulk snapshot: specify snapshots' names [#3778](https://github.com/vatesfr/xen-orchestra/issues/3778) (PR [#3787](https://github.com/vatesfr/xen-orchestra/pull/3787))

|

||||

- [Remotes] Show free space and disk usage on remote [#3055](https://github.com/vatesfr/xen-orchestra/issues/3055) (PR [#3767](https://github.com/vatesfr/xen-orchestra/pull/3767))

|

||||

- [New SR] Add tooltip for reattach action button [#3845](https://github.com/vatesfr/xen-orchestra/issues/3845) (PR [#3852](https://github.com/vatesfr/xen-orchestra/pull/3852))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Self] Display sorted Resource Sets [#3818](https://github.com/vatesfr/xen-orchestra/issues/3818) (PR [#3823](https://github.com/vatesfr/xen-orchestra/pull/3823))

|

||||

- [Servers] Correctly report connecting status (PR [#3838](https://github.com/vatesfr/xen-orchestra/pull/3838))

|

||||

- [Servers] Fix cannot reconnect to a server after connection has been lost [#3839](https://github.com/vatesfr/xen-orchestra/issues/3839) (PR [#3841](https://github.com/vatesfr/xen-orchestra/pull/3841))

|

||||

- [New VM] Fix `NO_HOSTS_AVAILABLE()` error when creating a VM on a local SR from template on another local SR [#3084](https://github.com/vatesfr/xen-orchestra/issues/3084) (PR [#3827](https://github.com/vatesfr/xen-orchestra/pull/3827))

|

||||

- [Backup NG] Fix typo in the form [#3854](https://github.com/vatesfr/xen-orchestra/issues/3854) (PR [#3855](https://github.com/vatesfr/xen-orchestra/pull/3855))

|

||||

- [New SR] No warning when creating a NFS SR on a path that is already used as NFS SR [#3844](https://github.com/vatesfr/xen-orchestra/issues/3844) (PR [#3851](https://github.com/vatesfr/xen-orchestra/pull/3851))

|

||||

|

||||

### Released packages

|

||||

|

||||

- vhd-lib v0.5.0

|

||||

- vhd-cli v0.2.0

|

||||

- xen-api v0.24.0

|

||||

- @xen-orchestra/fs v0.6.0

|

||||

- xo-server v5.33.0

|

||||

- xo-web v5.33.0

|

||||

|

||||

## **5.30.0** (2018-12-20)

|

||||

|

||||

### Enhancements

|

||||

|

||||

37

CHANGELOG.unreleased.md

Normal file

@@ -0,0 +1,37 @@

|

||||

> This file contains all changes that have not been released yet.

|

||||

|

||||

### Enhancements

|

||||

|

||||

- [Remotes] Benchmarks (read and write rate speed) added when remote is tested [#3991](https://github.com/vatesfr/xen-orchestra/issues/3991) (PR [#4015](https://github.com/vatesfr/xen-orchestra/pull/4015))

|

||||

- [Cloud Config] Support both NoCloud and Config Drive 2 datasources for maximum compatibility (PR [#4053](https://github.com/vatesfr/xen-orchestra/pull/4053))

|

||||

- [Advanced] Configurable cookie validity (PR [#4059](https://github.com/vatesfr/xen-orchestra/pull/4059))

|

||||

- [Plugins] Display number of installed plugins [#4008](https://github.com/vatesfr/xen-orchestra/issues/4008) (PR [#4050](https://github.com/vatesfr/xen-orchestra/pull/4050))

|

||||

- [Continuous Replication] Opt-in mode to guess VHD size, should help with XenServer 7.1 CU2 and various `VDI_IO_ERROR` errors (PR [#3726](https://github.com/vatesfr/xen-orchestra/pull/3726))

|

||||

- [VM/Snapshots] Always delete broken quiesced snapshots [#4074](https://github.com/vatesfr/xen-orchestra/issues/4074) (PR [#4075](https://github.com/vatesfr/xen-orchestra/pull/4075))

|

||||

- [Settings/Servers] Display link to pool [#4041](https://github.com/vatesfr/xen-orchestra/issues/4041) (PR [#4045](https://github.com/vatesfr/xen-orchestra/pull/4045))

|

||||

- [Import] Change wording of drop zone (PR [#4020](https://github.com/vatesfr/xen-orchestra/pull/4020))

|

||||

- [Backup NG] Ability to set the interval of the full backups [#1783](https://github.com/vatesfr/xen-orchestra/issues/1783) (PR [#4083](https://github.com/vatesfr/xen-orchestra/pull/4083))

|

||||

- [Hosts] Display a warning icon if you have XenServer license restrictions [#4091](https://github.com/vatesfr/xen-orchestra/issues/4091) (PR [#4094](https://github.com/vatesfr/xen-orchestra/pull/4094))

|

||||

- [Restore] Ability to restore a metadata backup [#4004](https://github.com/vatesfr/xen-orchestra/issues/4004) (PR [#4023](https://github.com/vatesfr/xen-orchestra/pull/4023))

|

||||

|

||||

### Bug fixes

|

||||

|

||||

- [Home] Always sort the items by their names as a secondary sort criteria [#3983](https://github.com/vatesfr/xen-orchestra/issues/3983) (PR [#4047](https://github.com/vatesfr/xen-orchestra/pull/4047))

|

||||

- [Remotes] Fixes `spawn mount EMFILE` error during backup

|

||||

- Properly redirect to sign in page instead of being stuck in a refresh loop

|

||||

- [Backup-ng] No more false positives when list matching VMs on Home page [#4078](https://github.com/vatesfr/xen-orchestra/issues/4078) (PR [#4085](https://github.com/vatesfr/xen-orchestra/pull/4085))

|

||||

- [Plugins] Properly remove optional settings when unchecking _Fill information_ (PR [#4076](https://github.com/vatesfr/xen-orchestra/pull/4076))

|

||||

- [Patches] (PR [#4077](https://github.com/vatesfr/xen-orchestra/pull/4077))

|

||||

- Add a host to a pool: fixes the auto-patching of the host on XenServer < 7.2 [#3783](https://github.com/vatesfr/xen-orchestra/issues/3783)

|

||||

- Add a host to a pool: homogenizes both the host and **pool**'s patches [#2188](https://github.com/vatesfr/xen-orchestra/issues/2188)

|

||||

- Safely install a subset of patches on a pool [#3777](https://github.com/vatesfr/xen-orchestra/issues/3777)

|

||||

- XCP-ng: no longer requires to run `yum install xcp-ng-updater` when it's already installed [#3934](https://github.com/vatesfr/xen-orchestra/issues/3934)

|

||||

|

||||

### Released packages

|

||||

|

||||

- xen-api v0.24.6

|

||||

- vhd-lib v0.6.0

|

||||

- @xen-orchestra/fs v0.8.0

|

||||

- xo-server-usage-report v0.7.2

|

||||

- xo-server v5.38.0

|

||||

- xo-web v5.38.0

|

||||

@@ -4,7 +4,7 @@

|

||||

|

||||

- [ ] PR reference the relevant issue (e.g. `Fixes #007`)

|

||||

- [ ] if UI changes, a screenshot has been added to the PR

|

||||

- [ ] CHANGELOG:

|

||||

- [ ] `CHANGELOG.unreleased.md`:

|

||||

- enhancement/bug fix entry added

|

||||

- list of packages to release updated (`${name} v${new version}`)

|

||||

- [ ] documentation updated

|

||||

|

||||

@@ -33,6 +33,7 @@

|

||||

* [Disaster recovery](disaster_recovery.md)

|

||||

* [Smart Backup](smart_backup.md)

|

||||

* [File level Restore](file_level_restore.md)

|

||||

* [Metadata Backup](metadata_backup.md)

|

||||

* [Backup Concurrency](concurrency.md)

|

||||

* [Configure backup reports](backup_reports.md)

|

||||

* [Backup troubleshooting](backup_troubleshooting.md)

|

||||

@@ -51,6 +52,7 @@

|

||||

* [Job manager](scheduler.md)

|

||||

* [Alerts](alerts.md)

|

||||

* [Load balancing](load_balancing.md)

|

||||

* [Emergency Shutdown](emergency_shutdown.md)

|

||||

* [Auto scalability](auto_scalability.md)

|

||||

* [Forecaster](forecaster.md)

|

||||

* [Recipes](recipes.md)

|

||||

|

||||

BIN

docs/assets/cloud-init-1.png

Normal file

|

After Width: | Height: | Size: 5.8 KiB |

BIN

docs/assets/cloud-init-2.png

Normal file

|

After Width: | Height: | Size: 11 KiB |

BIN

docs/assets/cloud-init-3.png

Normal file

|

After Width: | Height: | Size: 8.4 KiB |

BIN

docs/assets/cloud-init-4.png

Normal file

|

After Width: | Height: | Size: 13 KiB |

BIN

docs/assets/cr-seed-1.png

Normal file

|

After Width: | Height: | Size: 12 KiB |

BIN

docs/assets/cr-seed-2.png

Normal file

|

After Width: | Height: | Size: 14 KiB |

BIN

docs/assets/cr-seed-3.png

Normal file

|

After Width: | Height: | Size: 15 KiB |

BIN

docs/assets/cr-seed-4.png

Normal file

|

After Width: | Height: | Size: 17 KiB |

BIN

docs/assets/e-shutdown-1.png

Normal file

|

After Width: | Height: | Size: 2.8 KiB |

BIN

docs/assets/e-shutdown-2.png

Normal file

|

After Width: | Height: | Size: 3.5 KiB |

BIN

docs/assets/e-shutdown-3.png

Normal file

|

After Width: | Height: | Size: 3.5 KiB |

@@ -12,7 +12,9 @@ Another good way to check if there is activity is the XOA VM stats view (on the

|

||||

|

||||

### VDI chain protection

|

||||

|

||||

This means your previous VM disks and snapshots should be "merged" (*coalesced* in the XenServer world) before we can take a new snapshot. This mechanism is handled by XenServer itself, not Xen Orchestra. But we can check your existing VDI chain and avoid creating more snapshots than your storage can merge. Otherwise, this will lead to catastrophic consequences. Xen Orchestra is the **only** XenServer/XCP backup product dealing with this.

|

||||

Backup jobs regularly delete snapshots. When a snapshot is deleted, either manually or via a backup job, it triggers the need for Xenserver to coalesce the VDI chain - to merge the remaining VDIs and base copies in the chain. This means generally we cannot take too many new snapshots on said VM until Xenserver has finished running a coalesce job on the VDI chain.

|

||||

|

||||

This mechanism and scheduling is handled by XenServer itself, not Xen Orchestra. But we can check your existing VDI chain and avoid creating more snapshots than your storage can merge. If we don't, this will lead to catastrophic consequences. Xen Orchestra is the **only** XenServer/XCP backup product that takes this into account and offers protection.

|

||||

|

||||

Without this detection, you could have 2 potential issues:

|

||||

|

||||

@@ -21,9 +23,9 @@ Without this detection, you could have 2 potential issues:

|

||||

|

||||

The first issue is a chain that contains more than 30 elements (fixed XenServer limit), and the other one means it's full because the "coalesce" process couldn't keep up the pace and the storage filled up.

|

||||

|

||||

In the end, this message is a **protection mechanism against damaging your SR**. The backup job will fail, but XenServer itself should eventually automatically coalesce the snapshot chain, and the the next time the backup job should complete.

|

||||

In the end, this message is a **protection mechanism preventing damage to your SR**. The backup job will fail, but XenServer itself should eventually automatically coalesce the snapshot chain, and the the next time the backup job should complete.

|

||||

|

||||

Just remember this: **coalesce will happen every time a snapshot is removed**.

|

||||

Just remember this: **a coalesce should happen every time a snapshot is removed**.

|

||||

|

||||

> You can read more on this on our dedicated blog post regarding [XenServer coalesce detection](https://xen-orchestra.com/blog/xenserver-coalesce-detection-in-xen-orchestra/).

|

||||

|

||||

@@ -33,11 +35,13 @@ Just remember this: **coalesce will happen every time a snapshot is removed**.

|

||||

|

||||

First check SMlog on the XenServer host for messages relating to VDI corruption or coalesce job failure. For example, by running `cat /var/log/SMlog | grep -i exception` or `cat /var/log/SMlog | grep -i error` on the XenServer host with the affected storage.

|

||||

|

||||

Coalesce jobs can also fail to run if the SR does not have enough free space. Check the problematic SR and make sure it has enough free space, generally 30% or more free is recommended depending on VM size.

|

||||

Coalesce jobs can also fail to run if the SR does not have enough free space. Check the problematic SR and make sure it has enough free space, generally 30% or more free is recommended depending on VM size. You can check if this is the issue by searching `SMlog` with `grep -i coales /var/log/SMlog` (you may have to look at previous logs such as `SMlog.1`).

|

||||

|

||||

You can check if a coalesce job is currently active by running `ps axf | grep vhd` on the XenServer host and looking for a VHD process in the results (one of the resulting processes will be the grep command you just ran, ignore that one).

|

||||

|

||||

If you don't see any running coalesce jobs, and can't find any other reason that XenServer has not started one, you can attempt to make it start a coalesce job by rescanning the SR. This is harmless to try, but will not always result in a coalesce. Visit the problematic SR in the XOA UI, then click the "Rescan All Disks" button towards the top right: it looks like a refresh circle icon. This should begin the coalesce process - if you click the Advanced tab in the SR view, the "disks needing to be coalesced" list should become smaller and smaller.

|

||||

If you don't see any running coalesce jobs, and can't find any other reason that XenServer has not started one, you can attempt to make it start a coalesce job by rescanning the SR. This is harmless to try, but will not always result in a coalesce. Visit the problematic SR in the XOA UI, then click the "Rescan All Disks" button towards the top right: it looks like a refresh circle icon. This should begin the coalesce process - if you click the Advanced tab in the SR view, the "disks needing to be coalesced" list should become smaller and smaller.

|

||||

|

||||

As a last resort, migrating the VM (more specifically, its disks) to a new storage repository will also force a coalesce and solve this issue. That means migrating a VM to another host (with its own storage) and back will force the VDI chain for that VM to be coalesced, and get rid of the `VDI Chain Protection` message.

|

||||

|

||||

### Parse Error

|

||||

|

||||

|

||||

@@ -1,5 +1,7 @@

|

||||

# Backups

|

||||

|

||||

> Watch our [introduction video](https://www.youtube.com/watch?v=FfUqIwT8KzI) (45m) to Backup in Xen Orchestra!

|

||||

|

||||

This section is dedicated to all existing methods of rolling back or backing up your VMs in Xen Orchestra.

|

||||

|

||||

There are several ways to protect your VMs:

|

||||

@@ -8,6 +10,7 @@ There are several ways to protect your VMs:

|

||||

* [Rolling Snapshots](rolling_snapshots.md) [*Starter Edition*]

|

||||

* [Delta Backups](delta_backups.md) (best of both previous ones) [*Enterprise Edition*]

|

||||

* [Disaster Recovery](disaster_recovery.md) [*Enterprise Edition*]

|

||||

* [Metadata Backups](metadata_backup.md) [*Enterprise Edition*]

|

||||

* [Continuous Replication](continuous_replication.md) [*Premium Edition*]

|

||||

* [File Level Restore](file_level_restore.md) [*Premium Edition*]

|

||||

|

||||

@@ -39,7 +42,7 @@ Each backups' job execution is identified by a `runId`. You can find this `runId

|

||||

|

||||

All backup types rely on snapshots. But what about data consistency? By default, Xen Orchestra will try to take a **quiesced snapshot** every time a snapshot is done (and fall back to normal snapshots if it's not possible).

|

||||

|

||||

Snapshots of Windows VMs can be quiesced (especially MS SQL or Exchange services) after you have installed Xen Tools in your VMs. However, [there is an extra step to install the VSS provider on windows](quiesce). A quiesced snapshot means the operating system will be notified and the cache will be flushed to disks. This way, your backups will always be consistent.

|

||||

Snapshots of Windows VMs can be quiesced (especially MS SQL or Exchange services) after you have installed Xen Tools in your VMs. However, [there is an extra step to install the VSS provider on windows](https://xen-orchestra.com/blog/xenserver-quiesce-snapshots/). A quiesced snapshot means the operating system will be notified and the cache will be flushed to disks. This way, your backups will always be consistent.

|

||||

|

||||

To see if you have quiesced snapshots for a VM, just go into its snapshot tab, then the "info" icon means it is a quiesced snapshot:

|

||||

|

||||

|

||||

@@ -1,7 +1,5 @@

|

||||

# CloudInit

|

||||

|

||||

> CloudInit support is available in the 4.11 release and higher

|

||||

|

||||

Cloud-init is a program "that handles the early initialization of a cloud instance"[^n]. In other words, you can, on a "cloud-init"-ready template VM, pass a lot of data at first boot:

|

||||

|

||||

* setting the hostname

|

||||

@@ -18,25 +16,27 @@ So it means very easily customizing your VM when you create it from a compatible

|

||||

|

||||

You only need to use a template of a VM with CloudInit installed inside it. [Check this blog post to learn how to install CloudInit](https://xen-orchestra.com/blog/centos-cloud-template-for-xenserver/).

|

||||

|

||||

**Note:** In XOA 5.31, we changed the cloud-init config drive type from [OpenStack](https://cloudinit.readthedocs.io/en/latest/topics/datasources/configdrive.html) to the [NoCloud](https://cloudinit.readthedocs.io/en/latest/topics/datasources/nocloud.html) type. This will allow us to pass network configuration to VMs in the future. For 99% of users, including default cloud-init installs, this change will have no effect. However if you have previously modified your cloud-init installation in a VM template to only look for `openstack` drive types (for instance with the `datasource_list` setting in `/etc/cloud/cloud.cfg`) you need to modify it to also look for `nocloud`.

|

||||

|

||||

## Usage

|

||||

|

||||

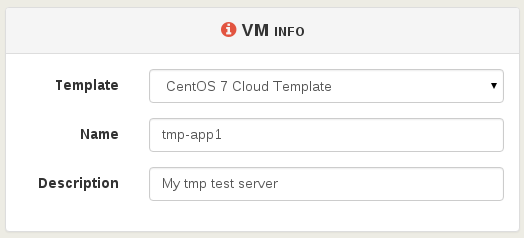

First, select your compatible template (CloudInit ready) and name it:

|

||||

|

||||

|

||||

|

||||

|

||||

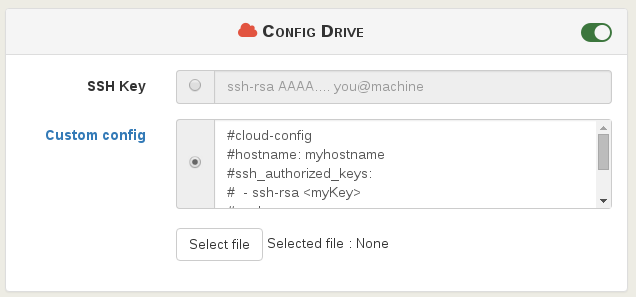

Then, activate the config drive and insert your SSH key. Or you can also use a custom CloudInit configuration:

|

||||

|

||||

|

||||

|

||||

|

||||

> CloudInit configuration examples are [available here](http://cloudinit.readthedocs.org/en/latest/topics/examples.html).

|

||||

|

||||

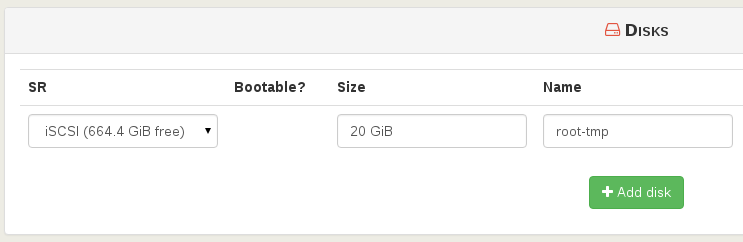

You can extend the disk size (**in this case, the template disk was 8 GiB originally**):

|

||||

You can extend the disk size (**in this case, the template disk was 8 GiB originally**). We'll extend it to 20GiB:

|

||||

|

||||

|

||||

|

||||

|

||||

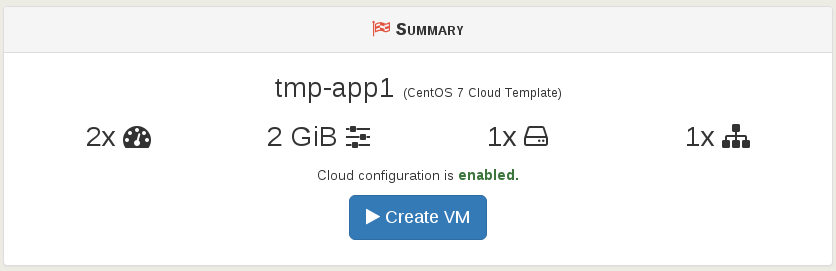

Finally, create the VM:

|

||||

|

||||

|

||||

|

||||

|

||||

Now start the VM and SSH to its IP:

|

||||

|

||||

|

||||

@@ -43,11 +43,19 @@ To protect the replication, we removed the possibility to boot your copied VM di

|

||||

|

||||

### Job creation

|

||||

|

||||

Create the Continuous Replication backup job, and leave it disabled for now. On the main Backup-NG page, note its identifiers, the main `backupJobId` and the ID of one on the schedules for the job, `backupScheduleId`.

|

||||

Create the Continuous Replication backup job, and leave it disabled for now. On the main Backup-NG page, copy the job's `backupJobId` by hovering to the left of the shortened ID and clicking the copy to clipboard button:

|

||||

|

||||

|

||||

|

||||

Copy it somewhere temporarily. Now we need to also copy the ID of the job schedule, `backupScheduleId`. Do this by hovering over the schedule name in the same panel as before, and clicking the copy to clipboard button. Keep it with the `backupJobId` you copied previously as we will need them all later:

|

||||

|

||||

|

||||

|

||||

### Seed creation

|

||||

|

||||

Manually create a snapshot on the VM to backup, and note its UUID as `snapshotUuid` from the snapshot panel for the VM.

|

||||

Manually create a snapshot on the VM being backed up, then copy this snapshot UUID, `snapshotUuid` from the snapshot panel of the VM:

|

||||

|

||||

|

||||

|

||||

> DO NOT ever delete or alter this snapshot, feel free to rename it to make that clear.

|

||||

|

||||

@@ -55,7 +63,9 @@ Manually create a snapshot on the VM to backup, and note its UUID as `snapshotUu

|

||||

|

||||

Export this snapshot to a file, then import it on the target SR.

|

||||

|

||||

Note the UUID of this newly created VM as `targetVmUuid`.

|

||||

We need to copy the UUID of this newly created VM as well, `targetVmUuid`:

|

||||

|

||||

|

||||

|

||||

> DO not start this VM or it will break the Continuous Replication job! You can rename this VM to more easily remember this.

|

||||

|

||||

@@ -66,7 +76,7 @@ The XOA backup system requires metadata to correctly associate the source snapsh

|

||||

First install the tool (all the following is done from the XOA VM CLI):

|

||||

|

||||

```

|

||||

npm i -g xo-cr-seed

|

||||

sudo npm i -g --unsafe-perm @xen-orchestra/cr-seed-cli

|

||||

```

|

||||

|

||||

Here is an example of how the utility expects the UUIDs and info passed to it:

|

||||

|

||||

27

docs/emergency_shutdown.md

Normal file

@@ -0,0 +1,27 @@

|

||||

# Emergency Shutdown

|

||||

|

||||